IaC 2.0: Beyond Terraform – The Next Wave of Infrastructure Automation.

Introduction: The Rise of Terraform and the Limits of IaC 1.0

Over the past decade, Infrastructure as Code (IaC) has transformed the way organizations manage and provision cloud resources. At the forefront of this movement has been Terraform, an open-source tool developed by HashiCorp, which quickly became the de facto standard for declaratively managing infrastructure across public cloud providers like AWS, Azure, and GCP.

With Terraform, engineers could define infrastructure in human-readable configuration files, track changes in version control, and provision infrastructure reliably and repeatably. It enabled a critical shift from click-based UIs and ad hoc scripts to predictable, automated workflows ushering in what we now call IaC 1.0.

This first generation of IaC introduced enormous benefits: better collaboration via Git, infrastructure versioning, code review pipelines, reduced human error, and more scalable cloud provisioning. Terraform’s provider ecosystem made it uniquely extensible, allowing users to manage not only compute resources but also DNS, storage, monitoring, and SaaS tools.

However, as infrastructure complexity has evolved, the cracks in the IaC 1.0 model have started to show. Organizations now operate in multi-cloud and hybrid environments, orchestrate thousands of microservices, manage dynamic workloads with Kubernetes, and rely heavily on ephemeral resources challenges that the original Terraform model wasn’t fully built to handle.

Terraform’s declarative-only approach, while simple, makes complex logic cumbersome. Features like conditionals and loops require hacks or brittle workarounds. Writing truly reusable infrastructure modules often means sacrificing flexibility or maintainability.

Additionally, state management becomes a central point of fragility. Shared state files can become a bottleneck in collaborative environments, while drift between the declared and actual state frequently leads to configuration inconsistencies and unexpected behavior.

These limitations grow more pronounced at scale, where change management, auditing, and compliance become top concerns.

Security is another weak point in the IaC 1.0 paradigm. Traditional IaC tools often lack first-class support for policy enforcement and secret management, leaving these concerns to be bolted on after the fact. There’s minimal built-in support for policy-as-code, meaning teams must rely on external tools to enforce compliance and governance, often leading to fragmented workflows.

In modern DevSecOps cultures, security must be proactive and continuous not reactive and manual.

Furthermore, Terraform’s execution model is batch-based, meaning infrastructure changes are applied in a single, planned step. This creates long feedback loops and makes infrastructure difficult to test in real-time.

Continuous Delivery and GitOps require more event-driven, reactive systems something IaC 1.0 does not inherently support. In Kubernetes environments, this gap is especially evident, as Terraform lacks native integration with Kubernetes controllers or reconciliation loops.

Tooling fragmentation also plagues the IaC ecosystem. As developers layer on additional tools for testing, secret injection, policy validation, and drift detection, infrastructure pipelines become more brittle and complex.

Terraform alone cannot provide a seamless, end-to-end infrastructure automation experience. And while Terraform Enterprise and third-party platforms aim to fill in the gaps, they often come with significant overhead and cost.

All of this signals a clear need for evolution. As cloud-native architectures continue to grow in scope and complexity, the underlying infrastructure tooling must evolve as well. The principles that made Terraform successful declarative configuration, cloud abstraction, and version control integration are still valid.

But the next generation of infrastructure automation must build on these foundations while addressing their limitations. That brings us to the emerging paradigm of IaC 2.0: an era defined by intelligent, programmable, policy-driven, and event-aware infrastructure as code systems designed for the demands of the modern software delivery lifecycle.

What Is IaC 2.0?

Infrastructure as Code 2.0 (IaC 2.0) represents the evolution of infrastructure automation beyond the limitations of traditional declarative tools like Terraform.

While IaC 1.0 was focused on defining infrastructure in code and using static templates to provision resources, IaC 2.0 introduces a more dynamic, intelligent, and integrated approach.

It combines the strengths of programming languages, policy enforcement, automation pipelines, and cloud-native tooling to deliver infrastructure that is not only codified but also aware, adaptive, and secure by design. This new paradigm embraces programming-language-native IaC tools like Pulumi, AWS CDK, and CDK for Terraform (CDKTF), allowing developers to write infrastructure using familiar languages such as TypeScript, Python, or Go.

This unlocks richer logic, abstraction, and reuse enabling teams to build infrastructure that behaves more like software.

IaC 2.0 is GitOps-native, treating Git repositories as the single source of truth and tightly integrating with CI/CD workflows to enable automated testing, deployment, and rollback of infrastructure changes.

It brings infrastructure closer to modern development practices and empowers teams to manage infra like application code.

At the same time, it incorporates policy-as-code frameworks like Open Policy Agent (OPA) and Sentinel to enforce security, compliance, and operational rules as part of the deployment process. This means infrastructure changes are validated not only against desired configurations but also against organizational and regulatory standards before they are ever applied.

Another defining trait of IaC 2.0 is its event-driven and runtime-aware nature. Instead of just planning and applying infrastructure once, the new model allows for continuous reconciliation between desired and actual state.

Tools like Crossplane, Kubernetes controllers, and advanced orchestration platforms enable self-healing, autonomous infrastructure that can respond to real-time signals, scale dynamically, and maintain compliance without human intervention.

This fundamentally changes how teams think about uptime, resilience, and scalability.

IaC 2.0 doesn’t replace Terraform it builds on its principles while addressing its blind spots. It enables more flexible, secure, and collaborative infrastructure management across modern, distributed systems.

Ultimately, IaC 2.0 is about creating infrastructure platforms that are programmable, composable, policy-enforced, and deeply integrated into the software delivery lifecycle, ushering in a new era of infrastructure as software.

Core Principles of IaC 2.0

- Imperative + Declarative Hybrid Move beyond static YAML/JSON/HCL with tools like:

- Pulumi (TypeScript, Python, Go)

- CDK (Cloud Development Kit) by AWS (TypeScript, Python, Java)

Benefits: - Loops, conditionals, and functions

- Reusability via modules and classes

- Shift-Left Security & Policy-as-Code Embed security into the provisioning workflow:

- OPA (Open Policy Agent) for guardrails

- Checkov, tfsec, Conftest for static scanning

- Ensure compliance before deployment, not after

- Dynamic & Context-Aware Provisioning Infrastructure that adapts to:

- Cost/usage metrics

- Cluster load

- Real-time events (e.g., scaling based on traffic spikes)

- GitOps & Continuous Deployment Integration IaC becomes part of CI/CD:

- Tools like Argo CD, Flux, and Spacelift

- Infrastructure changes are triggered and validated via Git commits and pull requests

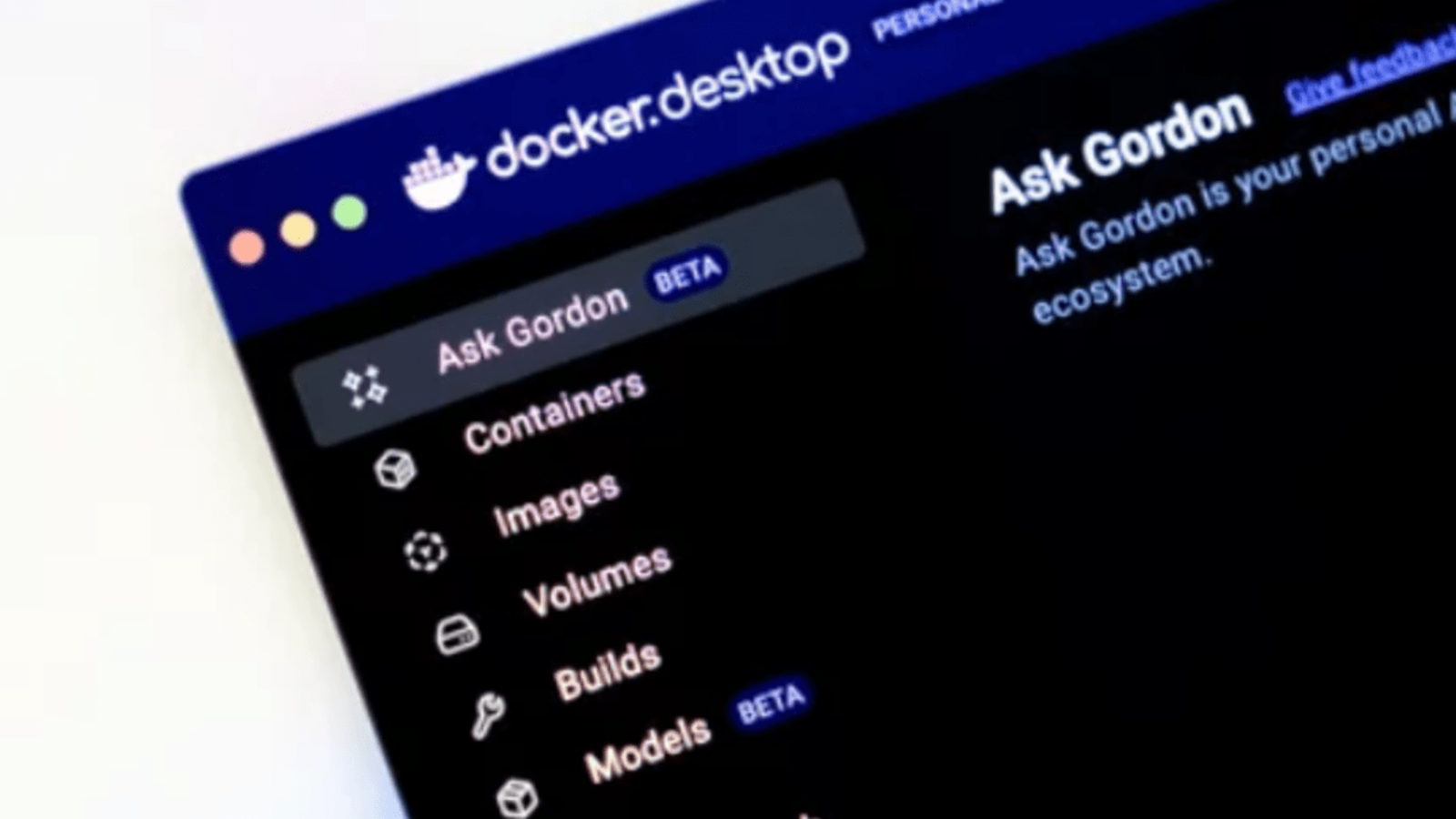

- AI-Augmented IaC

- Copilot-like suggestions for infrastructure

- Automated remediation of drift or misconfiguration

- AI-generated documentation and compliance reports

- Drift Detection & Self-Healing Infrastructure Tools that continuously reconcile desired state with actual state:

- Crossplane, Terraform Cloud, Kubernetes Operators

Key Tools Powering IaC 2.0

| Category | Tools/Platforms |

|---|---|

| Language-native IaC | Pulumi, AWS CDK, CDKTF (Terraform CDK) |

| GitOps Automation | ArgoCD, Flux, Spacelift |

| Policy-as-Code | OPA, Sentinel (HashiCorp), Checkov |

| Self-healing Infra | Crossplane, Kubernetes Controllers/Operators |

| Secret Management | Vault, SOPS, Doppler |

| Drift Detection | Terraform Cloud, env0, Firefly |

Challenges of IaC 2.0

- Increased complexity: More moving parts and abstraction layers

- Skill gaps: Developers need to learn programming + DevOps principles

- Tool sprawl: Many overlapping tools in the ecosystem

- Security risks: More power in the hands of code, more room for mistakes

The Road Ahead: What’s Next?

- Standardization: Like Kubernetes became the de facto standard for containers, IaC 2.0 needs standards (e.g., OpenTofu)

- Platform Engineering: IaC as part of internal developer platforms (IDPs)

- AI-Driven InfraOps: Predictive scaling, anomaly detection, self-optimizing infra

- Composable Infrastructure: APIs over static templates, driven by service catalogs

Conclusion

IaC 2.0 is not just about managing infrastructure it’s about engineering platforms that are secure, observable, and developer-friendly. While Terraform remains a cornerstone, the future demands more flexibility, dynamism, and intelligence in how we define and manage our systems.

Welcome to the next wave of infrastructure automation.

What is AWS Fargate? A Serverless Container Service Explained.

What Is AWS Fargate?

AWS Fargate is a serverless compute engine for containers offered by Amazon Web Services. It allows users to run containers directly without managing the underlying servers, infrastructure, or clusters. With traditional container environments, developers typically need to provision, scale, and maintain the EC2 instances on which containers run.

AWS Fargate removes that responsibility, letting developers focus purely on building and deploying applications. It works seamlessly with both Amazon ECS (Elastic Container Service) and Amazon EKS (Elastic Kubernetes Service).

When a task is launched in ECS or a pod is deployed in EKS, Fargate allocates the required compute resources and automatically handles provisioning, scaling, and infrastructure maintenance behind the scenes.

This makes AWS Fargate particularly attractive for teams that want to streamline operations and reduce the overhead of infrastructure management.

Instead of worrying about instance types, cluster sizing, and patching, teams can focus on application performance, deployment strategies, and user experience.

Fargate supports a precise configuration model where you can specify the exact amount of CPU and memory your containers need, ensuring cost efficiency and optimized performance.

It automatically scales based on demand, starting new containers as needed and terminating them when they’re no longer required. Because you only pay for the compute time used, Fargate can be more economical than running idle EC2 instances.

Security is another core strength of Fargate. Each task or pod runs in its own isolated compute environment, which enhances workload isolation and security.

There’s no resource sharing between containers unless explicitly configured, which reduces the attack surface and risks associated with multi-tenant environments.

AWS also handles patching the underlying host infrastructure, ensuring it is up-to-date with the latest security enhancements. This managed security model aligns with modern DevSecOps practices and compliance requirements.

Fargate integrates with a wide range of AWS services like Amazon CloudWatch for logging and monitoring, IAM for identity and access control, VPC for networking, and AWS Secrets Manager for sensitive data management.

You can configure networking at the task level using elastic network interfaces (ENIs), which allows you to assign security groups and control access tightly. This deep integration into the AWS ecosystem makes it easy to build highly secure, scalable, and observable containerized applications.

When comparing Fargate to the EC2 launch type in ECS or EKS, the key difference lies in control versus convenience. With EC2, you manage the server instances, which provides more customization but also more complexity.

Fargate, on the other hand, abstracts the infrastructure layer, offering simplicity, faster deployment, and automation.

It is especially well-suited for microservices architectures, event-driven workloads, and applications that need rapid scaling without manual intervention. For developers adopting continuous integration and deployment pipelines (CI/CD), Fargate reduces the friction associated with infrastructure operations.

Another benefit of Fargate is its compatibility with container orchestrators. Whether you prefer ECS, AWS’s native orchestrator, or EKS, which supports Kubernetes workloads, Fargate can run your containers efficiently.

This makes it a flexible solution for organizations that use both platforms or are transitioning between orchestration tools. Fargate also supports container images from Amazon ECR (Elastic Container Registry) or third-party registries like Docker Hub.

Fargate is not without trade-offs. It may have limitations in terms of networking configurations or support for specialized workloads that require custom kernel modules or privileged access.

However, for the vast majority of container-based applications, these limitations are negligible when weighed against the operational simplicity and cost savings. AWS continues to evolve the service, adding support for features like ephemeral storage, larger task sizes, and more integration options.

AWS Fargate brings the serverless paradigm to container workloads, removing the need to manage infrastructure while still offering the scalability, flexibility, and control required by modern cloud-native applications. It empowers teams to innovate faster, deploy more frequently, and maintain high availability and performance without the burden of managing servers.

Key Features

| Feature | Description |

|---|---|

| Serverless | No need to manage servers or clusters. Just define the container, and Fargate handles the rest. |

| On-Demand Scaling | Automatically scales based on your workload. |

| Pay-per-use | You pay for the vCPU and memory your container uses while running. |

| Integrated with ECS & EKS | Works seamlessly with ECS and EKS for container orchestration. |

| Secure by Design | Each Fargate task runs in its own isolated compute environment. |

How It Works.

AWS Fargate works by abstracting the server and infrastructure layer from the container deployment process.

When using Amazon ECS or EKS, you define a task (in ECS) or a pod (in EKS), which includes the container image, CPU and memory requirements, networking settings, and IAM roles. Instead of launching this task or pod onto a provisioned EC2 instance, you select Fargate as the launch type.

Once selected, Fargate takes care of all the behind-the-scenes infrastructure setup. It dynamically provisions the right amount of compute resources needed to run your containerized workload.

When a Fargate task or pod is initiated, AWS automatically allocates an isolated compute environment. This environment includes exactly the amount of CPU and memory you requested no more, no less. Unlike EC2, where multiple containers might share an instance, Fargate tasks are isolated from each other at the kernel level, improving security and stability.

It also creates a dedicated elastic network interface (ENI) for each task or pod, allowing fine-grained control over networking and access via security groups and VPC settings.

Fargate continuously manages the container lifecycle. It handles task placement, networking, image pulling, logging, and monitoring automatically.

If a container crashes or completes, Fargate stops billing for it immediately, making the pricing model efficient and usage-based. Tasks can also be configured to scale automatically based on resource metrics or event triggers, enabling your applications to respond to changing demand without manual intervention.

Behind the scenes, Fargate uses a highly optimized AWS-managed fleet of servers across multiple availability zones. These servers are kept up-to-date, patched, and monitored by AWS.

This invisible compute layer ensures that customers never need to worry about updates, OS versions, or hardware health. In addition, logs and metrics from Fargate tasks can be sent to services like Amazon CloudWatch, making it easier to monitor and troubleshoot your applications.

Overall, AWS Fargate simplifies the container deployment process by removing the need to manage infrastructure, while still offering fine control over configuration, networking, and scaling. Developers define what their containerized application needs, and Fargate ensures it runs securely, reliably, and efficiently in a fully managed environment.

Benefits

- No infrastructure management: Great for teams that want to focus on application development.

- Efficient resource utilization: Choose precise CPU and memory settings.

- Faster deployment: No need to set up and configure EC2 instances.

- Improved security: Isolated environments for each task/pod.

Fargate vs EC2 Launch Type

| Feature | Fargate | EC2 |

|---|---|---|

| Infrastructure | Fully managed by AWS | You manage the EC2 instances |

| Scalability | Automatic | Manual or auto-scaling setup |

| Billing | Per-task/per-second basis | Per EC2 instance (per hour) |

| Use case | Simpler, dynamic workloads | Complex setups with more control |

Use Cases

- Microservices applications

- Batch processing jobs

- Event-driven architecture (e.g., AWS Lambda alternative for containers)

- APIs and web applications

Summary

AWS Fargate is ideal if you want to run containers without managing servers. It brings the benefits of serverless computing to containerized applications, making it a powerful option for modern cloud-native development.

Would you like an example of a Fargate setup with ECS?

What is AWS X-Ray? A Beginner’s Guide to Distributed Tracing.

What is AWS X-Ray?

AWS X-Ray is a distributed tracing service offered by Amazon Web Services that helps developers analyze, monitor, and debug complex applications, especially those using a microservices or serverless architecture.

As modern applications often rely on many interconnected services such as API Gateway, Lambda functions, containers, and databases it becomes difficult to track performance issues or pinpoint the root cause of errors.

AWS X-Ray addresses this challenge by providing end-to-end visibility into requests as they travel through an application.

When a user sends a request, AWS X-Ray can trace it from start to finish, capturing detailed data about each component it passes through.

This data includes response times, exceptions, latency, and downstream calls. X-Ray organizes this information into a “trace,” which is made up of “segments” representing each service the request touches.

Each segment can have “subsegments,” offering finer granularity into specific tasks like a database query or an external API call. With these insights, developers can identify bottlenecks, detect slow operations, and understand dependencies.

One of the most powerful features of X-Ray is the Service Map, a visual representation of how services are connected within your app.

This map lets you drill down into specific traces and examine the flow of data, making it easier to troubleshoot issues in real-time or after they occur. Whether you’re using EC2, ECS, Lambda, or other AWS services, X-Ray integrates seamlessly, allowing you to start tracing with minimal setup.

Developers can add AWS X-Ray SDKs to their code, available in languages like Node.js, Java, Python, .NET, and Go.

These SDKs intercept HTTP calls, AWS SDK calls, and custom logic, and forward the data to the X-Ray service. The trace data can be filtered, queried, and viewed directly in the AWS Management Console.

To control overhead, X-Ray uses sampling, which lets you trace a percentage of incoming requests rather than all of them. This helps in reducing cost and performance impact, while still providing a representative view of your application’s behavior.

Additionally, annotations and metadata can be added to traces for custom filtering and more precise analysis.

X-Ray is particularly valuable in serverless applications where traditional monitoring tools struggle to correlate requests across multiple AWS services.

With X-Ray, you can trace a single user request as it triggers an API Gateway endpoint, invokes a Lambda function, queries DynamoDB, and returns a response all within one visual trace. This makes troubleshooting and optimizing serverless workflows significantly easier.

Whether your application is experiencing intermittent slowdowns, unexplained errors, or simply needs deeper insight into how its components interact, AWS X-Ray offers the tools to gain clarity.

It’s designed not just for troubleshooting, but also for performance tuning and architectural understanding. With minimal changes to your code and support for many AWS services out of the box, X-Ray makes it easier to build reliable, scalable, and observable applications in the cloud.

AWS X-Ray is your window into the inner workings of distributed systems on AWS. It’s a vital tool for DevOps engineers, backend developers, and system architects who need to understand how their applications behave in real-world conditions.

With features like traces, service maps, sampling, annotations, and native AWS integrations, X-Ray simplifies monitoring and debugging in even the most complex cloud-native environments.

Why Use AWS X-Ray?

AWS X-Ray is a powerful tool for developers and DevOps teams who need to gain deep visibility into the inner workings of distributed applications, especially in microservices and serverless environments.

As modern cloud-native applications often rely on numerous services and infrastructure components, diagnosing issues or tracking the journey of a single request becomes a complex task.

X-Ray solves this challenge by providing end-to-end tracing, allowing you to monitor how data flows through your system, where it slows down, and where it fails.

One of the primary reasons to use AWS X-Ray is its ability to pinpoint performance bottlenecks. It highlights latency issues across different components like databases, external APIs, or internal services.

You can see exactly which part of your application is taking longer than expected and take action accordingly. This saves hours of manual investigation and helps maintain a smooth user experience.

Another major benefit is error detection and troubleshooting. X-Ray captures faults and exceptions automatically, displaying stack traces and error codes. This allows developers to identify bugs, misconfigurations, or downstream failures without needing to reproduce the issue manually.

X-Ray’s Service Map is another compelling reason to use it. It provides a visual layout of how your services interact, making it easier to understand your application’s architecture at a glance. This is especially useful when dealing with new or evolving systems, as it reveals all upstream and downstream dependencies clearly.

In addition, X-Ray supports custom annotations and metadata, giving you the flexibility to tag requests with business-relevant data like user IDs, order numbers, or customer segments. This allows you to filter traces by meaningful context and debug issues more effectively.

X-Ray also integrates natively with many AWS services including Lambda, EC2, ECS, API Gateway, DynamoDB, and more, so you don’t need to install or configure third-party tools for basic monitoring. You simply enable tracing in the service console or SDK, and X-Ray begins collecting data.

With sampling features, X-Ray avoids overwhelming your system by tracing only a percentage of requests while still providing a representative view of system behavior. This keeps performance impact and cost low, making it scalable for high-throughput applications.

Using AWS X-Ray also aligns well with DevOps and observability best practices, giving teams a unified, real-time view into system behavior. It helps catch early signs of trouble, supports root cause analysis, and promotes faster incident resolution.

Ultimately, AWS X-Ray enhances the reliability, performance, and maintainability of applications by offering a clear, detailed view into how every part of your system behaves under real workloads. It is a must-have tool for anyone operating in dynamic AWS environments.

Key Features of AWS X-Ray

| Feature | Description |

|---|---|

| Traces | A record of a single request as it travels through your app |

| Segments | Information about each individual service or component involved in the trace |

| Subsegments | Finer-grained details within a segment (e.g., a specific database call) |

| Annotations & Metadata | Custom data you can add to traces for filtering and analysis |

| Service Map | A visual graph showing all the services your app interacts with |

| Sampling | Controls how many requests are traced to manage overhead |

How AWS X-Ray Works.

AWS X-Ray works by collecting data about requests as they flow through your application, and then piecing that data together to create a complete picture of performance, behavior, and dependencies.

It begins with instrumenting your application code using the AWS X-Ray SDK or enabling tracing in AWS services like Lambda, API Gateway, ECS, or EC2. Once enabled, X-Ray begins capturing trace data for incoming requests.

Each request generates a trace, which is composed of one or more segments each representing a specific component or service handling part of the request.

For example, when a user makes a request to your application, X-Ray can trace the journey from an API Gateway to a Lambda function, then to a database like DynamoDB.

Each of these steps creates a segment, and each segment can have subsegments that dive deeper into operations such as function calls, SQL queries, or outbound HTTP requests. These segments and subsegments are timestamped and annotated with metadata, performance metrics, and error information.

The trace data is first sent to an X-Ray daemon or agent, which acts as a local collector. The daemon buffers and batches the trace data before sending it to the AWS X-Ray service. This helps reduce network overhead and ensures efficient data transfer. In some environments, such as Lambda, the agent is managed automatically.

Once the trace data reaches the X-Ray service, it is aggregated, processed, and visualized in the AWS Management Console. There, you can see detailed trace timelines, which show how much time each component spent processing a request.

You can also view the Service Map, a real-time visual representation of how your services interact and where latency or errors are occurring.

X-Ray supports sampling, which helps control the amount of trace data collected by only recording a subset of requests. This keeps costs down and avoids slowing your system while still giving meaningful insights. Developers can also add annotations and metadata to traces to provide business context, like tagging requests with customer IDs or transaction types, which makes filtering and debugging easier.

In short, AWS X-Ray works by tracing requests across your application’s components, collecting detailed timing and error information, and then stitching it all together into actionable insights.

It helps developers detect problems, optimize performance, and gain a deep understanding of how services communicate and respond under load.

Whether you’re working with a few services or a complex microservices architecture, X-Ray gives you the tools to monitor, debug, and improve your system with minimal setup.

What Can You Trace?

- AWS Lambda functions

- Amazon EC2 instances

- Amazon ECS containers

- Amazon API Gateway requests

- DynamoDB, RDS, S3, SNS, SQS, etc.

Getting Started with AWS X-Ray

1. Enable X-Ray in Your AWS Resources

Most AWS services like Lambda and API Gateway have built-in support.

2. Install the X-Ray SDK

For example, in Node.js:

npm install aws-xray-sdk3. Instrument Your Code

const AWSXRay = require(‘aws-xray-sdk’);

const express = require(‘express’);

const app = express();

AWSXRay.captureHTTPsGlobal(require(‘http’));

app.use(AWSXRay.express.openSegment(‘MyApp’));

app.get(‘/’, function (req, res) {

res.send(‘Hello, X-Ray!’);

});

app.use(AWSXRay.express.closeSegment());

app.listen(3000);

Example: X-Ray Service Map

Imagine your app has:

- An API Gateway

- A Lambda function

- A DynamoDB table

X-Ray can show a service map like:

Client → API Gateway → Lambda → DynamoDBEach arrow is clickable and shows detailed trace data

Benefits of Using AWS X-Ray

- Quickly find performance issues

- See how services interact

- Diagnose errors and faults

- Improve observability in microservices environments

- Supports real-time debugging

Final Thoughts

AWS X-Ray is an essential tool for any AWS-based application, especially when working with serverless, microservices, or complex distributed systems. It helps answer the crucial question:

“What happened to my request?”

Would you like a step-by-step setup for a specific language or service (e.g., Python & Lambda)?

Conclusion.

AWS X-Ray is a powerful and essential tool for monitoring, debugging, and optimizing modern cloud applications. As systems grow more complex often involving multiple microservices, serverless functions, and third-party integrations traditional debugging methods fall short.

X-Ray fills this gap by providing end-to-end request tracing, detailed performance insights, and a clear visual map of how services interact.

By automatically collecting trace data and organizing it into meaningful segments, X-Ray helps developers quickly identify bottlenecks, trace errors, and understand system behavior in real time.

Its native integration with AWS services, support for multiple programming languages, and customizable features like annotations and sampling make it flexible and scalable for applications of any size.

Ultimately, AWS X-Ray enhances observability, reduces troubleshooting time, and improves the reliability and performance of your applications.

Whether you’re building new cloud-native systems or managing existing ones, X-Ray empowers you to make smarter, faster decisions with greater visibility into every request.

The Hidden Costs of DockerHub: When Free Isn’t Really Free.

Introduction.

In the world of software development, the word “free” often feels like a gift a no-brainer choice that saves time and money.

DockerHub, as the most popular container image registry, has long been that gift for millions of developers and organizations.

Its free tier offers quick access to a vast repository of container images and promises seamless integration into any workflow. But as more teams rely on DockerHub, the cracks beneath the surface start to show.

Rate limits quietly throttle your CI/CD pipelines, causing unexpected build failures and deployment delays. Public images, while convenient, carry security risks that often go unnoticed until it’s too late.

The lack of uptime guarantees and support means you’re on your own when things go wrong. What looks like a free service begins to demand hidden payments in developer time, troubleshooting headaches, and lost productivity.

Over time, these costs add up, turning what seemed like a free tool into a source of technical debt and operational risk. For teams scaling their container strategy, understanding these hidden costs is critical. Because when “free” breaks your build or jeopardizes your security, it’s no longer free at all.

1. Rate Limits Can Break Your CI/CD

DockerHub enforces strict rate limits on image pulls for anonymous and free-tier users up to 100 pulls per 6 hours per IP for unauthenticated users, and 200 for authenticated free accounts. This sounds sufficient until it’s not.

If your CI/CD pipeline depends on multiple containers, microservices, or is shared across a team, you’ll quickly find those limits aren’t generous they’re disruptive. Builds fail. Deployments stall. Engineers scramble to debug what turns out to be… a pull limit.

2. Security Risks of Public Images

Many developers rely on public images without auditing them. The convenience of docker pull somecool/image:latest masks the risk of using unverified, potentially outdated or even malicious containers.

Free tiers encourage minimal oversight. But when vulnerabilities arise, who takes responsibility for securing these images? Spoiler: you do.

3. No SLA for Uptime or Performance

Free-tier users get zero guarantees when it comes to uptime, performance, or support. If DockerHub goes down or slows to a crawl during your release window there’s no one to call. You’re left in the dark.

4. Hidden Developer Time Costs

Ever spent half a day debugging a mysterious CI failure only to trace it back to a DockerHub rate limit? The “free” service just cost your team 4+ hours of developer time. Multiply that over weeks or months, and you’re looking at real, expensive inefficiencies.

5. Vendor Lock-In and Migration Pains

Because DockerHub is so deeply integrated into many workflows, moving away can be painful. Switching to another registry (like AWS ECR, GitHub Container Registry, or self-hosted Harbor) requires planning, credential management, and image migration often during your already-busy release cycles.

So What’s the Alternative?

If you’re using DockerHub just because it’s familiar and “free,” consider this your wake-up call. Evaluate these alternatives based on your real needs:

- GitHub Container Registry (GHCR): Tightly integrated with GitHub Actions and supports private repos even on free tiers.

- AWS/GCP/Azure registries: Great for teams already living in those ecosystems. Offer better authentication and security controls.

- Self-hosted registries: Harbor, JFrog Artifactory, or even simple S3-backed registries can offer more control at lower cost over time.

- Image proxies & caching: Tools like Dragonfly or registry mirror setups can help cache DockerHub images locally and reduce rate-limit risk.

Conclusion: Pay Now or Pay Later

DockerHub’s free tier is a great starting point. But at scale, “free” becomes a technical debt you’re constantly paying off in outages, developer frustration, or security risks.

So ask yourself: Is “free” still worth it when it costs your team time, stability, and control?

The best infrastructure decisions are rarely about cost alone they’re about value. And in 2025’s world of secure, reproducible, and scalable DevOps, relying on the “default free” may no longer be the wise choice.

What is MLOps? A Beginner’s Guide to Machine Learning Operations.

What is MLOps?

MLOps, short for Machine Learning Operations, is a discipline that combines machine learning (ML) with DevOps practices to streamline and automate the lifecycle of machine learning models.

As machine learning continues to be integrated into modern software systems, managing models at scale becomes increasingly complex. MLOps addresses this challenge by applying automation, monitoring, and collaboration practices to ML workflows.

The core goal of MLOps is to bridge the gap between data science and production environments, ensuring that machine learning models are developed, deployed, and maintained reliably and efficiently.

Traditional ML projects often struggle to move from research environments into production due to lack of reproducibility, poor collaboration, or manual workflows.

MLOps introduces best practices to standardize and automate key processes like model training, evaluation, deployment, and monitoring. It encourages a collaborative culture between data scientists, machine learning engineers, DevOps professionals, and business stakeholders.

By incorporating version control, CI/CD pipelines, and infrastructure as code, MLOps supports the reproducible development and deployment of ML models.

A typical MLOps workflow starts with data management, where data is collected, cleaned, labeled, and versioned. From there, models are trained and validated in experimental environments, using tools like MLflow or Weights & Biases to track performance and parameters.

These trained models then move through testing and validation stages to ensure they meet business and technical requirements. Once validated, models are deployed to production using containerization tools like Docker or model servers like TensorFlow Serving or BentoML.

Once in production, monitoring becomes critical. MLOps involves tracking model performance in real-time, detecting problems like data drift, concept drift, or model degradation. If performance drops, MLOps systems can trigger automated retraining pipelines using fresh data.

This ongoing lifecycle, often referred to as continuous training, ensures that models remain accurate and relevant over time. It also supports model rollback, A/B testing, and canary deployments, which help reduce risk in production environments.

MLOps emphasizes automation, aiming to reduce the manual effort needed to keep models functioning effectively. CI/CD pipelines, inspired by software engineering, are used to test, validate, and deploy models in a consistent and automated way.

These pipelines ensure that changes to the model, data, or code are immediately tested and integrated, preventing unexpected failures. Teams can also use infrastructure-as-code tools like Terraform or Kubernetes to define and manage the environments where ML workloads run.

Security and compliance are also essential elements of MLOps. Organizations must track which data was used, how models were trained, and who made changes, especially in regulated industries like finance or healthcare.

MLOps tools provide audit logs, model registries, and data versioning systems that help ensure transparency and traceability.

One of the biggest benefits of MLOps is scalability. It allows organizations to manage many models across teams, projects, or regions without losing visibility or control.

Whether it’s a recommendation engine, a fraud detection model, or a customer support chatbot, MLOps ensures each model is properly maintained, updated, and monitored.

As machine learning becomes more deeply embedded into products and services, MLOps is no longer optional it’s a core requirement. It brings together data science creativity with engineering discipline, making sure that machine learning delivers real business value consistently.

In short, MLOps transforms ML from experimental notebooks into production-ready, enterprise-scale, and long-lived systems.

Why is MLOps Important?

MLOps is important because it solves one of the most pressing challenges in machine learning today: operationalizing ML models at scale. While building a machine learning model in a notebook might take days or weeks, deploying that model into a reliable, secure, and scalable production environment and keeping it functioning over time is far more complex.

MLOps addresses this gap by providing the tools, practices, and workflows necessary to take models from development to real-world use. Without MLOps, organizations often face failed ML projects, long delays between model development and deployment, and models that perform poorly once in production.

MLOps helps ensure that machine learning initiatives deliver actual business value and not just promising experiments.

The traditional data science workflow is highly manual and often disconnected from the larger software engineering and infrastructure ecosystem.

Data scientists focus on building models, but not necessarily on the engineering, testing, deployment, and monitoring required for a model to succeed in the real world.

This leads to problems like inconsistent environments, lack of reproducibility, poor version control, and inability to monitor model performance over time.

MLOps brings structure, repeatability, and automation to this process, ensuring that models are not just trained well but deployed and maintained correctly, too.

A critical reason why MLOps is important is that models degrade over time. Real-world data changes. Customer behavior shifts. External conditions evolve.

As a result, a model that performs well today may fail tomorrow a problem known as model drift. MLOps provides the monitoring tools and alerting systems needed to detect this drift and trigger automated retraining workflows, ensuring models remain accurate and trustworthy over time.

This ongoing lifecycle management is key to maintaining model reliability in dynamic environments.

Another major advantage of MLOps is its support for collaboration. It allows data scientists, ML engineers, software developers, and operations teams to work together using shared tools and processes. For example, code and models can be versioned in Git, model performance can be tracked in MLflow, and deployment pipelines can be automated with CI/CD tools.

This cross-functional collaboration results in faster development cycles, fewer deployment errors, and more robust models. It also reduces friction between teams, turning machine learning into a shared responsibility rather than a siloed task.

Scalability is another reason MLOps matters. As companies grow, so does the number of machine learning models in use. Without MLOps, maintaining a few models is doable but managing dozens or hundreds of models across different departments and environments becomes overwhelming.

MLOps provides frameworks and tools to scale ML systems, track model versions, manage dependencies, and orchestrate training and deployment workflows automatically. It makes ML manageable at enterprise scale.

In regulated industries such as healthcare, finance, and insurance, compliance and auditability are essential. Organizations must prove how decisions were made, which data was used, and whether models were biased.

MLOps offers the traceability and transparency needed for regulatory compliance. It enables version tracking for datasets, training code, models, and model artifacts, making it possible to reproduce results months or even years later critical in legal or regulated settings.

MLOps also reduces time to market for ML solutions. By automating repetitive tasks like testing, deployment, monitoring, and retraining, teams can iterate faster, deploy quicker, and respond to business needs in real-time.

Instead of taking weeks or months to deploy a new model, MLOps can reduce that process to hours or days. This agility is crucial in competitive industries where data-driven decisions must be made quickly.

Moreover, MLOps promotes resilience and fault tolerance. Production systems often experience failures from hardware outages to data pipeline errors.

With proper MLOps practices, such as automated rollbacks, containerization, and continuous monitoring, systems can detect problems early and recover gracefully. This leads to more stable production environments and better end-user experiences.

Finally, MLOps ensures that machine learning doesn’t become a one-off project, but rather a continuous capability. It transforms ML from a research activity into a reliable part of software development and business operations.

Just like DevOps changed how software is built and deployed, MLOps is reshaping how machine learning is scaled, governed, and embedded into real-world applications.

MLOps is important because it turns machine learning theory into operational reality. It makes ML systems scalable, reliable, auditable, and effective, allowing organizations to gain real and sustained value from their machine learning investments.

Without it, ML projects are likely to remain stuck in development, deliver poor results, or even fail entirely.

Key Components of MLOps

Here are the core components and steps involved in MLOps:

- Data Management

- Data collection, labeling, validation

- Versioning datasets (e.g., with DVC)

- Model Development

- Training models using frameworks like TensorFlow, PyTorch, or Scikit-learn

- Experiment tracking (e.g., MLflow, Weights & Biases)

- Model Validation

- Evaluating model performance using metrics (accuracy, precision, recall, etc.)

- Unit testing and integration testing for ML code

- Model Deployment

- Serving models using APIs (e.g., FastAPI, Flask)

- Using platforms like AWS SageMaker, Azure ML, or Google Vertex AI

- Continuous Integration / Continuous Deployment (CI/CD)

- Automating ML pipelines using tools like GitHub Actions, Jenkins, or GitLab CI

- Ensuring every model update goes through automated testing and deployment

- Monitoring and Logging

- Monitoring model performance in production

- Detecting concept drift, data drift, or performance degradation

- Model Retraining and Lifecycle Management

- Automating model retraining with new data

- Managing different versions of models in production

MLOps Tools

- Version Control: Git, DVC

- Experiment Tracking: MLflow, Weights & Biases

- Pipeline Automation: Kubeflow, Airflow, Metaflow

- Model Serving: TensorFlow Serving, TorchServe, BentoML

- Monitoring: Prometheus, Grafana, Evidently AI

Benefits of MLOps

- Faster time to production for ML models

- Better collaboration between data science and engineering teams

- Improved model performance and reliability

- Reproducibility and auditability for compliance and debugging

MLOps in Practice: Example Workflow

- Data scientist trains a model and tracks experiments with MLflow

- Model is saved and versioned using DVC and Git

- CI/CD pipeline tests and deploys the model to a cloud API

- Monitoring tools track real-time model accuracy and flag anomalies

- A scheduled pipeline retrains the model weekly with new data

Final Thoughts.

MLOps is essential for turning machine learning prototypes into scalable, reliable production systems. As organizations increasingly rely on ML for business decisions, MLOps ensures models are not only built correctly but also maintained efficiently over time.

Conclusion.

MLOps is a crucial practice that bridges the gap between data science experimentation and real-world machine learning deployment. It ensures that ML models are not only built efficiently but also deployed, monitored, and maintained with consistency and scalability.

For beginners, understanding MLOps means recognizing that machine learning is not just about algorithms it’s about the entire lifecycle of getting models into production and keeping them running effectively.

By combining best practices from machine learning, DevOps, and software engineering, MLOps brings structure, automation, and reliability to ML workflows. It helps teams collaborate better, reduce operational risks, and respond faster to changing data and business needs.

As machine learning becomes a foundational technology in every industry, mastering MLOps is essential for building models that truly deliver long-term value in production environments.

In short, MLOps transforms machine learning from isolated experiments into production-ready, scalable systems making it a key enabler of successful, real-world AI applications.

What is AWS Config? A Beginner’s Guide to Compliance & Security.

What is AWS Config?

AWS Config is a fully managed service provided by Amazon Web Services (AWS) that enables you to assess, audit, and evaluate the configurations of your AWS resources. It plays a critical role in ensuring compliance and security by offering continuous monitoring and recording of resource configurations, and it helps identify changes, trends, and potential misconfigurations over time.

AWS Config provides a clear picture of your AWS environment by maintaining a detailed inventory of your resources and their current and historical configurations.

The service automatically records the configurations of supported AWS resources such as EC2 instances, S3 buckets, IAM roles, security groups, Lambda functions, and more.

Each time a resource is created, modified, or deleted, AWS Config captures the details and stores them as configuration items. These records help you understand what your environment looked like at any given point in time and allow for easy troubleshooting and auditing.

One of AWS Config’s most powerful features is its ability to evaluate your resources against compliance rules. These rules can be AWS-managed or custom-defined to meet your specific security and operational requirements.

For example, you can use rules to check whether S3 buckets have server-side encryption enabled, whether IAM passwords meet complexity requirements, or whether EC2 volumes are attached properly. Based on these evaluations, AWS Config marks resources as compliant or noncompliant, which helps identify areas that need attention.

In addition to resource recording and compliance checking, AWS Config allows you to visualize resource relationships and dependency mappings.

This means you can see how one resource is connected to another, making it easier to assess the impact of changes and understand the architecture of your cloud environment. This kind of visibility is crucial for managing risk, resolving incidents, and preparing for audits.

AWS Config also integrates with AWS Organizations, allowing you to aggregate compliance data across multiple accounts and regions.

This centralized view simplifies governance and makes it easier to enforce consistent policies throughout your organization. Whether you’re operating in a single-account or multi-account setup, AWS Config gives you control and insight into every layer of your infrastructure.

Change notifications are another key capability. AWS Config can alert you in real time when a resource changes or drifts from its intended state.

This enables you to act quickly on unauthorized changes, potential security issues, or configuration drift that could lead to performance or compliance problems. These notifications can be delivered via Amazon SNS, integrated into CloudWatch, or processed by automation workflows using Lambda functions.

From a security perspective, AWS Config helps enforce standards and regulatory requirements. It supports frameworks such as HIPAA, PCI DSS, SOC, and ISO by enabling organizations to demonstrate compliance through automated checks and detailed audit trails.

These records are tamper-proof, easily queryable, and stored securely, which is ideal for compliance teams and auditors.

Getting started with AWS Config is straightforward. You can enable it via the AWS Management Console, CLI, or SDKs. You choose which resources to monitor and which rules to apply.

AWS offers a library of predefined managed rules for common best practices, so you can begin enforcing policies immediately. As your environment grows, you can create custom rules using AWS Lambda to meet more specific compliance and security needs.

Costs are based on the number of configuration items recorded and the number of rule evaluations performed. While it’s not free, AWS Config is cost-effective compared to the potential impact of undetected misconfigurations or noncompliance.

You can manage costs by selectively recording only necessary resources and optimizing the frequency of rule evaluations.

AWS Config is an essential service for any organization serious about cloud governance. It provides unmatched visibility into AWS resource configurations, automates compliance checks, simplifies audits, and enhances security posture.

Whether you are a developer, operations engineer, security professional, or compliance officer, AWS Config equips you with the tools to monitor, track, and secure your infrastructure proactively. It transforms the complexity of cloud resource management into a manageable, automated, and transparent process.

Key Features of AWS Config

- Resource Inventory

- Automatically discovers and records configuration details for AWS resources (EC2, S3, IAM, etc.).

- Builds a complete inventory for visibility and audit.

- Configuration History

- Tracks configuration changes over time.

- Lets you see what changed, when it changed, and who made the change.

- Compliance Auditing

- Evaluates resources against custom rules or managed rules (e.g., “S3 buckets must be encrypted”).

- Marks resources as compliant or noncompliant.

- Change Notifications

- Sends alerts (via Amazon SNS or CloudWatch) when configurations change or resources go out of compliance.

- Integration with AWS Organizations

- Centralized governance and compliance for multi-account environments.

Common Use Cases

- Security & Compliance Auditing

- Detect open security groups, unencrypted S3 buckets, or non-compliant IAM roles.

- Operational Troubleshooting

- Pinpoint when and how a resource’s settings were modified.

- Governance in Multi-Account Setups

- Monitor and enforce policies across multiple AWS accounts.

- Change Management

- Track unauthorized changes to resources or environments.

How It Works.

- AWS Config Recorder logs configuration details and resource relationships.

- AWS Config Rules evaluate those configurations against best practices or policies.

- AWS Config Aggregator (optional) consolidates data across accounts and regions.

Cost Considerations.

You’re charged based on:

- Number of configuration items recorded

- Number of active AWS Config Rules

- Evaluations of these rules

Tip: Use the free tier and aggregation features wisely to manage costs.

Getting Started

- Enable AWS Config in the AWS Console.

- Choose the resources to record (or select all).

- Set up AWS Config Rules (start with managed rules).

- Monitor the compliance dashboard.

- Set up alerts for changes or violations.

Final Thoughts.

AWS Config is essential for security-conscious teams and regulated industries. Even in less strictly regulated environments, it offers powerful insights into resource changes, drift detection, and automated governance.

Start small, apply relevant rules, and expand as you grow. AWS Config brings visibility, accountability, and peace of mind to your cloud operations.

Conclusion.

AWS Config is a powerful tool for maintaining visibility, security, and compliance in your AWS environment. By automatically tracking configuration changes and evaluating resources against defined rules, it helps organizations detect misconfigurations early, enforce governance policies, and simplify audits.

Whether you’re a small startup or a large enterprise, adopting AWS Config can give you the confidence that your infrastructure is secure, compliant, and well-documented without needing to manually track every change.

Start with a few key rules, monitor your compliance, and scale your usage as your infrastructure grows. With AWS Config, you’re always one step ahead in managing cloud risk and compliance.

How to Simplify Multi-Account Management with AWS Organizations.

What is AWS Organizations?

AWS Organizations is a cloud management service provided by Amazon Web Services that allows you to centrally manage and govern multiple AWS accounts within a single structure. It is especially useful for enterprises, startups, or any organization that uses more than one AWS account to separate environments, teams, or projects.

With AWS Organizations, you can group accounts into Organizational Units (OUs), which can reflect your company’s hierarchy or functional divisions. This setup enables centralized governance using Service Control Policies (SCPs), which let you enforce permission boundaries and prevent unsafe or unauthorized actions across accounts.

One of the core benefits of AWS Organizations is centralized billing, allowing all member accounts to consolidate their costs under the management account and take advantage of volume pricing discounts.

It also improves operational efficiency by allowing administrators to automate account creation, apply policies at scale, and configure environments consistently using tools like AWS Control Tower.

In terms of security and compliance, AWS Organizations allows you to maintain global controls with organization-wide logging (via CloudTrail), compliance monitoring (via AWS Config), and shared access controls (via IAM Identity Center).

Furthermore, it promotes strong isolation between workloads while still enabling collaboration, as accounts can communicate through defined roles and resource sharing mechanisms.

Overall, AWS Organizations is a foundational service for enterprises looking to scale securely, manage costs effectively, and apply governance across a growing cloud footprint.

It supports a well-architected multi-account strategy that is essential for managing complex cloud environments at scale.

Steps to Simplify Multi-Account Management.

To simplify multi-account management in AWS, start by creating an AWS Organization using a management account, which acts as the central hub for governance. Next, group your AWS accounts into Organizational Units (OUs) based on function (e.g., Dev, Prod, Finance) or business structure.

Apply Service Control Policies (SCPs) to these OUs to enforce permissions and restrict unauthorized actions across accounts. Enable consolidated billing to manage all account expenses in one place and benefit from volume discounts.

Leverage AWS Control Tower to automate the provisioning of new accounts with pre-configured guardrails and baseline security settings. Integrate IAM Identity Center (AWS SSO) to provide centralized, role-based access control across all accounts for users and groups.

Use tagging standards consistently across resources and accounts to support cost tracking, organization, and reporting.

Enable CloudTrail and AWS Config at the organizational level for centralized logging, auditing, and compliance monitoring. Establish a logging account to collect and archive security, access, and billing logs. Implement account vending using automation to simplify new account creation and ensure consistency.

Finally, regularly review account usage, security posture, and cost data to refine policies and optimize governance.

Use Organizational Units (OUs).

Organizational Units (OUs) in AWS Organizations allow you to group AWS accounts based on function, department, or environment, such as Development, Production, or Finance.

This hierarchical structure helps you manage and apply governance policies more effectively. By organizing accounts into OUs, you can assign Service Control Policies (SCPs) to entire groups, ensuring consistent security and compliance standards.

OUs simplify policy management by reducing the need to configure each account individually. They also make it easier to scale operations as your organization grows. For example, a “Dev OU” can have looser restrictions for testing, while a “Prod OU” enforces tighter controls.

This separation improves both security and operational clarity. Additionally, OUs support auditing and monitoring by grouping related activities together.

Using OUs is a best practice for structuring and managing multi-account AWS environments efficiently.

Apply Service Control Policies (SCPs).

Service Control Policies (SCPs) in AWS Organizations are used to manage permissions across multiple AWS accounts. They act as guardrails, setting the maximum available permissions for accounts or Organizational Units (OUs).

SCPs don’t grant access directly but restrict what actions can be allowed, even if IAM policies permit them. This ensures centralized control over security and compliance. For example, you can deny access to certain services, block resource deletion, or limit actions to specific AWS regions.

Applying SCPs at the OU level allows consistent governance across all member accounts. This reduces risk, prevents misconfigurations, and enforces organizational policies.

SCPs are essential in environments like production, where strict access control is critical. Regular updates to SCPs help adapt to changing business and security needs.

Centralize Billing.

Centralizing billing with AWS Organizations allows you to consolidate payments for multiple AWS accounts under a single management account.

This simplifies financial tracking and enables you to view all charges in one place. By using consolidated billing, you can also take advantage of volume pricing discounts and shared Reserved Instances across accounts, which reduces overall costs.

Each member account retains its own resources and permissions but does not receive separate invoices. The management account pays the total bill, and detailed usage reports help with internal cost allocation. Centralized billing also improves budgeting and forecasting accuracy.

You can use AWS Cost Explorer and Cost & Usage Reports to analyze spending by account or service. Applying consistent tagging across accounts further enhances cost tracking.

Overall, centralized billing streamlines financial management and supports better decision-making across your organization.

Automate with AWS Control Tower.

AWS Control Tower is a service that automates the setup and governance of a secure, multi-account AWS environment based on best practices. It simplifies account provisioning by using Account Factory, allowing you to create new AWS accounts with pre-configured security, logging, and compliance settings.

Control Tower establishes a landing zone, which includes baseline guardrails, centralized logging, and monitoring across all accounts. These guardrails are implemented using Service Control Policies (SCPs) and AWS Config rules to enforce governance.

It also integrates with AWS Organizations to apply consistent policies to Organizational Units (OUs). Control Tower sets up centralized logging for CloudTrail and AWS Config in dedicated accounts. It reduces manual effort and ensures each account is compliant from day one.

As your organization scales, Control Tower helps maintain control and visibility. It’s ideal for enterprises seeking a standardized and secure cloud foundation. Regular updates ensure alignment with evolving AWS best practices.

Use AWS IAM Identity Center (formerly AWS SSO).

AWS IAM Identity Center (formerly AWS Single Sign-On) provides centralized access management across multiple AWS accounts and applications.

It allows you to define user identities and assign them fine-grained permissions to specific accounts and roles within your AWS Organization. By integrating with external identity providers like Microsoft Active Directory or Okta, you can manage access using your existing corporate credentials.

IAM Identity Center simplifies user provisioning and eliminates the need to create IAM users in each account. You can group users by department or role and assign permission sets that align with their responsibilities.

This improves security by enforcing least-privilege access and simplifying credential management. Users benefit from a single sign-on portal to access all assigned AWS accounts and services. Centralized access control enhances auditability and compliance tracking.

IAM Identity Center also supports multi-factor authentication (MFA) for added security. It’s a key component in building a scalable, secure multi-account AWS environment.

Tagging and Cost Allocation.

Tagging in AWS Organizations is the practice of assigning metadata labels, or tags, to AWS resources across multiple accounts to improve organization and cost tracking. By applying consistent tagging strategies, you can easily categorize resources by project, department, environment, or owner.

This standardization enables accurate cost allocation and budgeting, helping organizations understand where and how AWS resources are consumed. AWS Cost Allocation Tags allow you to break down bills and usage reports by tag values, providing detailed visibility into spending.

Tagging also supports automation, security policies, and compliance efforts by identifying resources across accounts. Implementing tagging policies centrally ensures consistency and reduces errors.

Combining tagging with AWS Cost Explorer and Cost & Usage Reports offers powerful tools for financial management.

Proper tagging helps teams optimize costs, detect unused resources, and plan future budgets. It’s essential for organizations managing complex, multi-account AWS environments.

Security & Governance Tips

- Enable AWS CloudTrail Organization Trail to get full visibility across accounts.

- Use AWS Config Aggregator to monitor compliance across the org.

- Define and enforce guardrails with SCPs and IAM best practices.

Best Practices Summary

| Practice | Benefit |

|---|---|

| Use OUs and SCPs | Structured governance |

| Centralize billing | Cost efficiency |

| Use Control Tower | Automated setup |

| IAM Identity Center | Simplified user access |

| Enable Org-wide CloudTrail | Unified auditing |

| Tag resources consistently | Easier cost allocation and tracking |

Conclusion.

AWS Organizations is a powerful tool that simplifies multi-account management by offering centralized control, improved security, and streamlined billing. By leveraging features like Organizational Units, Service Control Policies, AWS Control Tower, and IAM Identity Center, you can build a scalable, secure, and well-governed multi-account AWS environment.

Implementing these best practices helps you:

- Enforce consistent policies

- Reduce administrative overhead

- Improve visibility and compliance

- Optimize costs across accounts

In short, AWS Organizations enables you to manage cloud growth with control and confidence making it essential for modern, multi-account AWS environments.

Top Slack Integrations for DevOps Teams.

Introduction.

DevOps teams thrive on speed, collaboration, and automation and Slack has become the go-to hub for enabling all three. By integrating key DevOps tools directly into Slack, teams can manage incidents, monitor systems, deploy code, and streamline workflows without switching contexts. These integrations help break down silos between development and operations, offering real-time updates and actionable insights within the team’s main communication platform. Whether it’s alerting on outages, tracking pull requests, or automating CI/CD pipelines, Slack bridges the gap between people and systems. It enhances visibility, reduces response times, and fosters a culture of accountability. With the right integrations, teams can make data-driven decisions faster and resolve issues proactively. From cloud monitoring to infrastructure automation, Slack becomes a command center for modern DevOps. As teams grow more distributed and systems more complex, these integrations are no longer optional—they’re essential.

CI/CD & Deployment Integrations.

Jenkins

Jenkins is one of the most widely used open-source automation tools for continuous integration and continuous delivery (CI/CD), and its integration with Slack brings real-time visibility into the software delivery pipeline.

With this integration, teams can receive instant notifications on build status, test results, and deployment outcomes directly within Slack channels. Whether a build passes, fails, or gets stuck, team members are alerted immediately, reducing downtime and speeding up debugging.

Customizable messages allow teams to filter the most critical updates. Jenkins can also be configured to trigger builds using Slack slash commands, making deployments even more interactive. This integration helps maintain a seamless feedback loop between developers, testers, and ops.

It minimizes context switching and ensures everyone stays informed throughout the release cycle. By combining Jenkins with Slack, teams gain tighter control over their delivery process and can respond to issues before they impact users.

GitHub/GitLab

Integrating GitHub or GitLab with Slack keeps development teams closely connected to their code activity. Teams receive real-time notifications for events like pull requests, commits, issues, merges, and code reviews directly in Slack.

This visibility helps prevent delays by encouraging quick feedback and collaboration. Developers can track repository changes and participate in discussions without leaving Slack.

The integration is highly customizable, allowing teams to choose which events to track and where to post them. Some setups even support slash commands to interact with repos. Overall, it streamlines code collaboration and keeps everyone in sync.

CircleCI

CircleCI’s integration with Slack brings powerful CI/CD automation directly into team communication, helping developers stay on top of build and deployment pipelines.

With this integration, teams receive instant updates when a build starts, passes, or fails ensuring no change goes unnoticed. Slack channels can be configured to receive only relevant job updates, keeping noise low and focus high.

When a failure occurs, developers are quickly alerted with direct links to logs, making it easier to diagnose and fix issues. This tight feedback loop accelerates delivery and improves software quality.

CircleCI’s Slack integration also supports insights into test performance and build duration. It encourages a DevOps culture of shared responsibility and transparency. Teams can celebrate passing builds together or swarm on failed ones. Overall, it enhances visibility into the CI/CD process and helps reduce bottlenecks.

Incident & Alert Management.

PagerDuty

PagerDuty’s Slack integration empowers DevOps teams to respond to incidents faster by bringing critical alerts and on-call workflows directly into their chat environment. When an incident occurs, PagerDuty sends real-time notifications to designated Slack channels or individuals, reducing the need to check multiple tools.

Team members can acknowledge, escalate, or resolve incidents directly within Slack, saving valuable time during outages. The integration provides rich incident context such as source, severity, and service affected allowing for informed decision-making.

Automated alerts can be filtered to avoid noise and ensure the right people are notified. PagerDuty also supports creating incidents manually from Slack when issues are spotted in conversation.

Integration with status updates and runbooks further accelerates incident response. It helps teams collaborate more effectively during high-pressure moments. By centralizing alert management in Slack, PagerDuty strengthens operational awareness and minimizes downtime.

Datadog.

Datadog’s integration with Slack provides DevOps teams with real-time monitoring alerts, performance insights, and system health updates directly in their communication channels.

It allows teams to receive notifications about anomalies, threshold breaches, and deployment impacts without leaving Slack. Visual snapshots of dashboards or graphs can be shared instantly, making data-driven discussions faster and more collaborative.

Teams can customize which alerts go to which Slack channels, ensuring relevance and reducing noise. When incidents arise, Datadog alerts include detailed metadata and direct links to related dashboards for quick investigation. This integration supports better visibility across infrastructure, applications, and services.

It helps streamline incident detection, triage, and response. Datadog also integrates seamlessly with other tools like AWS, Kubernetes, and Jenkins, making it a central hub for observability. By connecting Datadog with Slack, teams improve uptime, reduce mean time to resolution (MTTR), and foster proactive operations.

Prometheus + Alertmanager.

Prometheus combined with Alertmanager offers powerful monitoring and alerting capabilities, and integrating it with Slack ensures timely incident awareness for DevOps teams.

When Prometheus detects an issue such as high CPU usage, memory leaks, or service downtime Alertmanager routes the alert to the appropriate Slack channel. Alerts can be customized with severity levels, labels, and routing rules to ensure that the right people are notified without overwhelming the team.

Each alert message includes key metrics, timestamps, and direct links to dashboards or logs for faster debugging. This integration helps teams identify and address problems before they escalate. Slack notifications foster quick collaboration, allowing teams to discuss and act on alerts in real time.

Alert silencing, grouping, and inhibition features reduce alert fatigue and help maintain focus. With Prometheus and Slack working together, observability becomes part of daily operations. This setup supports proactive monitoring, faster incident response, and a stronger DevOps culture.

Infrastructure & Cloud Tools.

AWS CloudWatch / Lambda

AWS CloudWatch and Lambda integrations with Slack enable DevOps teams to monitor, automate, and react to infrastructure events in real time.

CloudWatch sends alerts to Slack channels when predefined thresholds are breached, such as high CPU usage, failed health checks, or unusual traffic patterns. These alerts include detailed metrics and links to the AWS console, speeding up diagnosis and response.

Lambda functions can be triggered automatically by these alerts to execute remediation tasks like restarting instances or scaling services. With Slack in the loop, teams receive confirmation messages when Lambda functions run, helping maintain transparency and accountability.

This setup reduces the need for manual intervention during incidents. Slack also supports bi-directional interaction through AWS Chatbot, allowing users to query metrics or trigger actions from within Slack.

Combining CloudWatch and Lambda in Slack helps teams build a more proactive and responsive cloud operations workflow. It improves visibility, reduces downtime, and enhances operational efficiency.

Terraform Cloud.

Terraform Cloud’s Slack integration helps DevOps teams stay informed and in control of infrastructure changes through real-time notifications.

When a run is initiated, whether it’s a plan, apply, or destroy operation, updates are sent directly to designated Slack channels. This allows teams to monitor infrastructure workflows without leaving their communication platform.

Notifications include run status, affected resources, policy check results, and links to the Terraform UI for deeper inspection. By surfacing these updates in Slack, teams gain better visibility into who is making changes and why.

It promotes transparency and fosters collaboration around infrastructure decisions. Alerts for failed plans or policy violations ensure that potential issues are caught early. Approvals for runs can also be coordinated quickly within the team.

Overall, the integration strengthens infrastructure governance while accelerating delivery.

ChatOps & Automation.

Slack Workflow Builder

Slack Workflow Builder empowers DevOps teams to automate routine tasks and streamline processes directly within Slack, without needing extensive coding skills.

It allows users to create custom workflows that guide team members through complex procedures, like incident reporting, onboarding, or deployment checklists. Workflows can include forms, approvals, and notifications, helping standardize operations and reduce errors.

By embedding automation in Slack, teams save time and avoid context switching between tools. The visual interface makes it easy to design and modify workflows collaboratively.

Workflow Builder also integrates with external apps and APIs, expanding its capabilities. It supports faster response times and consistent execution of critical processes.

With Slack Workflow Builder, teams adopt a more efficient, transparent, and scalable approach to everyday tasks. It’s a key enabler for ChatOps, driving greater operational agility and collaboration.

Hubot / Slackbot Scripts

Hubot and Slackbot scripts bring powerful ChatOps automation to Slack, enabling DevOps teams to interact with their tools and infrastructure through simple chat commands.

These bots can perform a wide range of tasks from deploying code, checking system status, and running tests to fetching logs or restarting services all within Slack conversations. By automating repetitive tasks, they reduce manual work and human error.

Custom scripts can be tailored to fit team-specific workflows, making operations faster and more consistent.

Hubot, originally developed by GitHub, supports a rich ecosystem of plugins, while Slackbot offers easy-to-use built-in automation. Both bots foster collaboration by making technical actions visible and accessible to the whole team.