How to Optimize EBS Performance for High I/O Workloads.

Introduction.

In the era of cloud-native applications and data-driven systems, performance optimization plays a pivotal role in maintaining the efficiency, scalability, and reliability of compute and storage infrastructure. One critical component in this performance landscape is Amazon Elastic Block Store (EBS), a highly available and persistent block storage solution provided by Amazon Web Services (AWS).

EBS is commonly used in conjunction with Amazon EC2 instances to support a wide range of workloads from general-purpose computing and databases to high-performance analytics, streaming, and transactional systems. However, not all workloads are created equal, and high I/O workloads, in particular, demand a meticulously planned and well-optimized EBS configuration to avoid bottlenecks, downtime, or performance degradation.

High I/O workloads typically involve frequent and intensive read/write operations that require low latency, high throughput, and consistent IOPS (input/output operations per second) delivery. Examples include OLTP databases like MySQL or PostgreSQL, NoSQL systems such as Cassandra or MongoDB, data warehousing platforms, large-scale log analytics, distributed file systems, and real-time video processing applications.

To meet such demanding requirements, it is essential to understand how EBS works, what performance tiers and volume types are available, and how to configure both the storage and the EC2 instances effectively.

Optimizing EBS for high I/O workloads begins with selecting the right volume type. AWS offers multiple EBS volume types, including general-purpose SSDs (gp2 and gp3), provisioned IOPS SSDs (io1 and io2), and throughput-optimized HDDs (st1 and sc1). For workloads that require predictable and high-performance storage, io2 and its advanced variant, io2 Block Express, provide superior IOPS, low latency, and high durability. gp3 also offers the flexibility to provision IOPS and throughput independently from capacity, making it an excellent choice for many performance-sensitive applications at a lower cost than io2.

In addition to selecting the right volume, using EBS-optimized EC2 instances is critical. These instances provide dedicated bandwidth to EBS, minimizing latency and improving overall performance. Most modern Nitro-based EC2 instances, such as the C6i, M6i, and R6i families, come EBS-optimized by default and support significantly higher bandwidth and IOPS compared to older generations.

To further enhance performance, administrators can configure RAID 0 arrays using multiple EBS volumes to scale IOPS and throughput linearly. While RAID 0 provides no redundancy, it is often suitable for ephemeral data or where application-level replication handles fault tolerance.

OS-level and file system tuning also play a crucial role in performance. Choosing the right I/O scheduler such as noop or deadline and using efficient file systems like ext4 or XFS with optimized mount options can reduce latency and improve throughput. It’s equally important to monitor EBS performance using tools like Amazon CloudWatch, which offers metrics such as volume IOPS, throughput, queue length, and burst balance.

These insights help identify bottlenecks, detect under-provisioned resources, and plan scaling actions proactively. Additionally, for workloads launched from EBS snapshots, enabling Fast Snapshot Restore (FSR) can drastically reduce initialization time and improve startup performance.

Another vital consideration is avoiding the performance variability introduced by burst-based volumes such as gp2 and st1, which rely on credit systems. While these are suitable for low to moderate workloads, they may not sustain high-performance levels under prolonged stress. For mission-critical or high-throughput systems, opting for gp3 or io2 ensures consistent performance. Multi-Attach capability on io1 and io2 volumes also enables concurrent access from multiple EC2 instances, supporting clustered or highly available applications.

Ultimately, optimizing EBS for high I/O workloads is not a one-size-fits-all solution. It requires a thorough understanding of workload characteristics, AWS service capabilities, and performance tuning best practices. By leveraging the appropriate EBS volume types, selecting powerful EC2 instance types, implementing RAID or file system optimizations, and continuously monitoring and adapting the configuration, organizations can achieve reliable, scalable, and high-performance storage solutions on AWS that meet even the most demanding I/O requirements.

1. Choose the Right EBS Volume Type

AWS provides different EBS volume types designed for various workloads.

General Use:

- gp3 (General Purpose SSD): Cost-effective, customizable IOPS and throughput (up to 16,000 IOPS and 1,000 MB/s).

- gp2: Older version; performance scales with volume size (up to 16,000 IOPS).

High-Performance:

- io2 / io2 Block Express (Provisioned IOPS SSD):

- Designed for I/O-intensive workloads (databases, analytics).

- Up to 256,000 IOPS and 4,000 MB/s throughput (Block Express).

- High durability and consistent latency.

Throughput-Optimized:

- st1 (Throughput-optimized HDD): Good for streaming workloads.

- sc1 (Cold HDD): Low-cost for infrequent access.

Best for high I/O: Use io2/io2 Block Express or gp3 with provisioned IOPS and throughput.

2. Use EBS-Optimized EC2 Instances

- Choose EBS-optimized instances (most modern instances are by default).

- Ensure instance bandwidth is sufficient for your volume. Use instances with:

- High network throughput (e.g.,

m6i,r6i,c6i,i4i,z1d,x2idn, etc.). - High EBS bandwidth (check AWS documentation for instance type limits).

- High network throughput (e.g.,

Tip: Monitor EC2 instance EBS bandwidth caps using CloudWatch metrics.

3. Configure RAID for Higher Throughput

Combine multiple EBS volumes into a RAID array (software RAID 0) to scale IOPS/throughput:

- RAID 0 (striping): Increases performance (no redundancy).

- Useful for workloads needing more than one volume can provide.

Example using mdadm on Linux:

sudo mdadm --create --verbose /dev/md0 --level=0 --name=MY_RAID --raid-devices=4 /dev/nvme1n1 /dev/nvme2n1 /dev/nvme3n1 /dev/nvme4n14. Use Nitro-based EC2 Instances

Nitro instances (e.g., c6i, m6i, r6i) offer:

- Better performance

- Higher EBS throughput

- Enhanced networking

5. Tune OS and Filesystem Settings

- Linux I/O scheduler: Use

noopordeadlinefor EBS (especially for SSDs).

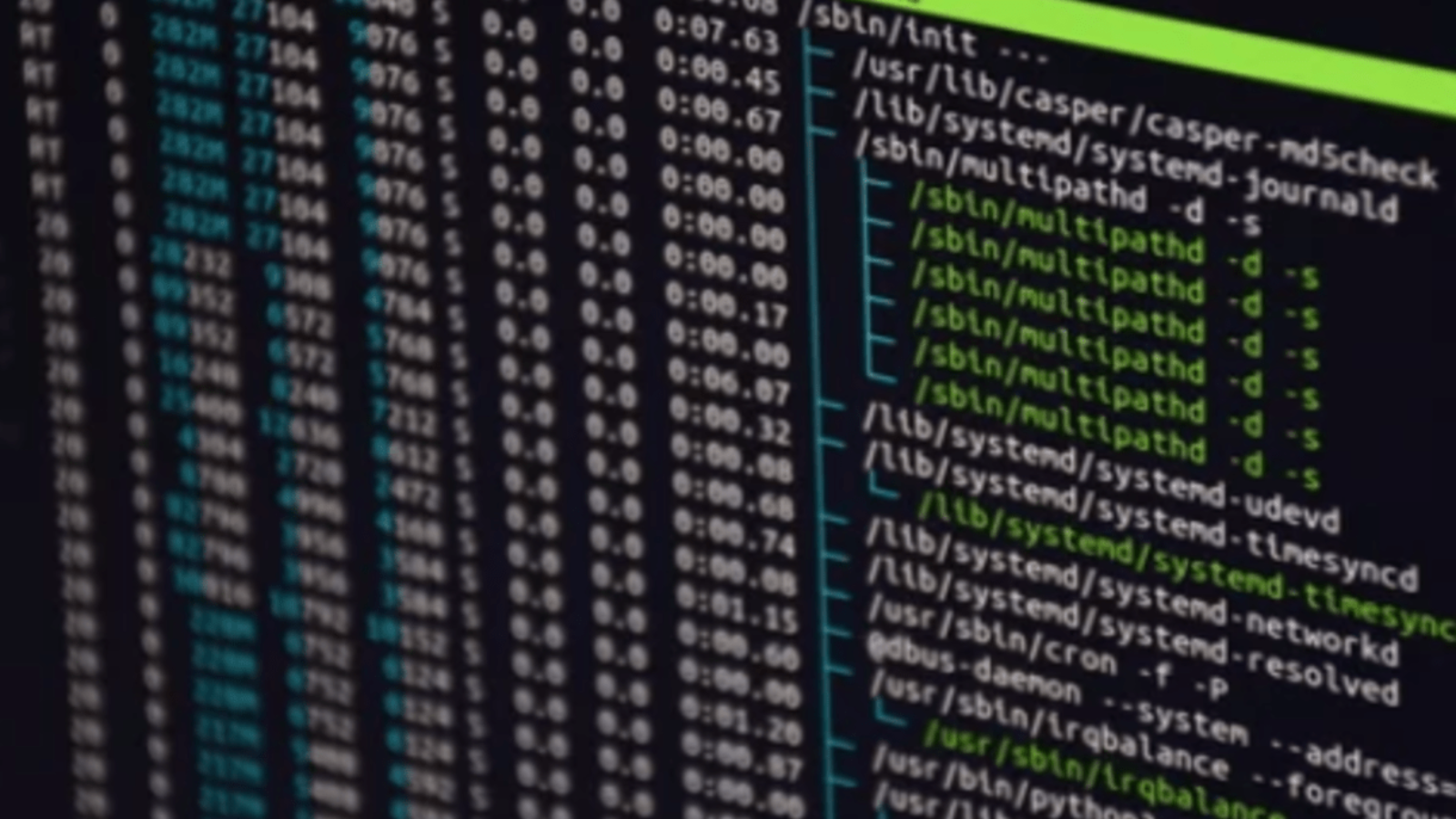

cat /sys/block/xvdf/queue/scheduler # check current scheduler

echo deadline | sudo tee /sys/block/xvdf/queue/scheduler6. Monitor Performance Metrics

Use Amazon CloudWatch and AWS CloudTrail to monitor:

VolumeReadOps,VolumeWriteOpsVolumeReadBytes,VolumeWriteBytesVolumeQueueLengthBurstBalance(for gp2/st1)- EC2-level metrics (e.g.,

DiskReadOps,DiskWriteOps)

Use these to identify:

- Bottlenecks

- Throttling

- Overprovisioned resources

7. Avoid EBS Burst Limits

For gp2/st1:

- Performance is based on credits; if burst balance depletes, IOPS drops.

- Use gp3 or io2 to avoid burst limits.

- Monitor and plan for sustained workloads.

8. Use EBS Multi-Attach (if applicable)

For shared-disk architecture (e.g., clustered databases), use io1/io2 Multi-Attach (attach a single volume to multiple instances).

9. Leverage EBS Fast Snapshot Restore (FSR)

If you’re launching volumes from snapshots:

- Enable Fast Snapshot Restore for low-latency, high-speed volume initialization.

| Setting | Recommended for High I/O |

|---|---|

| Volume Type | io2, io2 Block Express, gp3 with provisioned IOPS |

| RAID | Use RAID 0 for performance (no redundancy) |

| Instance Type | Nitro-based (e.g., m6i, c6i, i4i) |

| OS Scheduler | noop or deadline |

| Monitoring | CloudWatch: IOPS, throughput, queue length |

| Filesystem | ext4, XFS |

Conclusion.

In conclusion, optimizing Amazon EBS performance for high I/O workloads is essential for ensuring that cloud-based applications run efficiently, reliably, and at scale. With the diverse range of EBS volume types provided by AWS, including gp3 and io2/io2 Block Express, users have the flexibility to tailor their storage configuration to match the specific demands of their workloads. However, selecting the right volume type is only the first step.

True optimization requires a holistic approach that includes choosing the right EC2 instance types, enabling EBS-optimized networking, leveraging RAID configurations where necessary, and fine-tuning operating system and file system settings.

Monitoring is equally critical without insight into key performance metrics such as IOPS, throughput, latency, and queue length, performance degradation can go unnoticed until it impacts application availability or user experience.

Additionally, understanding the limitations of burst-based volumes like gp2 and planning for sustained performance using gp3 or io2 helps avoid bottlenecks during peak workloads. Advanced features such as Multi-Attach and Fast Snapshot Restore further enhance the flexibility and performance of EBS in complex, high-demand environments.

Ultimately, success lies in a proactive and informed strategy one that adapts to changing workload patterns, incorporates best practices, and uses AWS tools and services to their full potential. When implemented effectively, EBS performance tuning not only ensures the smooth functioning of high I/O workloads but also maximizes the return on investment in AWS infrastructure, supporting mission-critical applications with the speed, consistency, and reliability they require.

When Should You Use AWS MGN? Use Cases & Limitations.

What is MGN.

AWS MGN, short for AWS Application Migration Service, is a fully managed cloud migration service provided by Amazon Web Services that enables users to lift and shift physical, virtual, or cloud-based servers into the AWS Cloud with minimal downtime and without requiring any manual conversions or code changes.

It is designed to simplify and accelerate the migration process by automating the replication of entire servers operating systems, applications, and data into the AWS environment. This eliminates the need for complex re-architecting or extensive planning commonly associated with cloud migrations.

AWS MGN continuously replicates source servers to a low-cost staging area within the AWS account, ensuring up-to-date copies of workloads. Once the servers are replicated, users can launch them as Amazon EC2 instances, test their functionality, and cut over to AWS production systems with minimal disruption.

The service supports Windows and Linux operating systems and is capable of migrating workloads from on-premises data centers, VMware, Hyper-V, or even from other cloud providers. AWS MGN uses block-level replication to ensure high fidelity and consistency of data, which makes it ideal for critical applications that require accuracy during migration.

It offers built-in testing and cutover orchestration, allowing users to verify their applications in AWS before fully switching over, thereby reducing the risk of errors during go-live events. The interface is integrated into the AWS Management Console, making it accessible and user-friendly even for teams with limited AWS experience.

One of the core strengths of AWS MGN is its ability to scale large migration projects by allowing organizations to migrate dozens, hundreds, or even thousands of servers in a repeatable and streamlined manner.

Organizations undergoing data center evacuations, cloud consolidation, or digital transformation often turn to MGN as their preferred solution for rehosting workloads quickly and reliably. Since the service replicates entire servers, it supports legacy applications and systems that cannot be easily containerized or rearchitected, making it a practical option for maintaining business continuity during cloud adoption.

In terms of security, AWS MGN leverages AWS’s built-in capabilities, such as IAM (Identity and Access Management), encryption in transit and at rest, and VPC configurations, ensuring data protection throughout the migration lifecycle.

After the migration, organizations can optimize their EC2 instances, apply modernization strategies, or integrate their systems with other AWS services. Despite its many advantages, AWS MGN is best suited for rehosting scenarios and is not intended for migrations requiring application refactoring or real-time data synchronization. For these, other tools like AWS DMS (Database Migration Service) or App2Container may be more appropriate.

AWS MGN is a robust, cost-effective, and time-saving migration tool that empowers organizations to move workloads to AWS seamlessly. It supports a wide range of operating systems and infrastructure types, offering a consistent, repeatable migration process with minimal disruption to business operations. AWS MGN helps reduce operational risk by enabling test launches, automates critical migration steps, and integrates with AWS best practices. As cloud adoption continues to grow, AWS MGN remains a pivotal service for enterprises looking to transition efficiently to AWS without rewriting their applications or disrupting critical services.

When Should You Use AWS MGN?

Primary Use Cases

- Lift-and-Shift Migrations (Rehosting)

- Goal: Move workloads with minimal/no refactoring.

- Use Case: Quickly migrate servers and applications to AWS to reduce on-premises costs or to meet a cloud adoption deadline.

- Data Center Evacuations

- Goal: Shut down an entire data center by moving workloads to the cloud.

- Use Case: A business wants to eliminate on-prem infrastructure and migrate everything to AWS.

- Cloud-to-Cloud Migrations

- Goal: Move workloads from other cloud providers (e.g., Azure, GCP) to AWS.

- Use Case: A company is consolidating its workloads on AWS.

- Business Continuity & Disaster Recovery Setup

- Goal: Use AWS as a backup environment.

- Use Case: MGN is used to replicate on-prem servers to AWS as a fallback DR site.

- Legacy App Migration

- Goal: Migrate apps that can’t be easily containerized or rewritten.

- Use Case: Legacy Windows/Linux applications that need to stay intact in the cloud.

- Test Environments in the Cloud

- Goal: Use AWS to create temporary testing environments.

- Use Case: Migrate production clones to AWS for testing patches or updates without impacting live systems.

When Not to Use AWS MGN

Limitations & Better Alternatives

- You Need Refactoring or Replatforming

- Reason: AWS MGN doesn’t support application transformation.

- Use AWS Application Migration Hub, Elastic Beanstalk, or App2Container for modernizing workloads.

- Container-Based Workloads

- Reason: MGN is designed for VM and physical servers, not containers.

- Use ECS, EKS, or App2Container.

- Migrate Only Data, Not Entire Servers

- Reason: MGN migrates entire systems, not just files or databases.

- Use AWS DataSync, Snowball, or Database Migration Service (DMS).

- Real-Time or Continuous Synchronization Use Cases

- Reason: MGN is optimized for one-time migrations, not continuous replication.

- Use AWS DataSync or a third-party tool for ongoing sync.

- Unsupported Operating Systems

- Reason: MGN supports many OS types, but not all.

- Check compatibility; unsupported systems may require manual migration or third-party tools.

How It Works (Brief Overview)

- Install MGN Agent on source servers (on-prem, cloud, or virtual).

- Continuous replication to a staging area in AWS.

- Launch test instances to validate.

- Cut over to AWS with minimal downtime.

- Decommission source environment (optional).

Summary Table

| Use Case | Use AWS MGN? | Alternatives |

|---|---|---|

| Lift-and-shift server migration | Yes | – |

| Data center shutdown | Yes | – |

| Container-based apps | No | ECS, EKS, App2Container |

| App modernization | No | Beanstalk, Lambda, App2Container |

| Migrate databases only | No | AWS DMS |

| Disaster recovery setup | Yes | AWS MGN, AWS Elastic Disaster Recovery |

| Ongoing data sync | No | AWS DataSync |

Conclusion.

AWS MGN (Application Migration Service) is a powerful, fully managed tool that simplifies and accelerates the process of migrating servers to the AWS Cloud. It enables organizations to perform seamless lift-and-shift migrations with minimal downtime, no need for code changes, and high reliability.

Ideal for migrating legacy systems, large-scale environments, or workloads that must remain intact, MGN provides an efficient path to cloud adoption while reducing manual effort and risk.

Although it’s not designed for application modernization or container migrations, it plays a critical role in cloud migration strategies where speed, simplicity, and system integrity are priorities. By using AWS MGN, businesses can move to the cloud faster, reduce on-premises costs, and lay the foundation for future cloud-native innovation.

10 Essential Linux Commands Every New User Should Know.

Introduction.

Linux is a powerful, open-source operating system widely used for servers, development, cybersecurity, and personal computing.

Whether you’re managing files, navigating the system, or installing software, Linux provides a command-line interface (CLI) that offers precision and control.

For beginners, learning a core set of commands is essential to becoming comfortable with the terminal.

These commands allow you to perform everyday tasks such as browsing directories, handling files, and managing permissions.

The terminal may seem intimidating at first, but it’s a highly efficient tool once you understand its basics.

Compared to graphical interfaces, it often requires fewer resources and provides more direct control over the system.

The following 10 commands form the foundation of daily Linux use.

They are commonly supported across all major distributions like Ubuntu, Fedora, Debian, and CentOS.

With just a handful of commands, you can navigate the filesystem, create or delete files and folders, and execute administrative tasks.

Mastering these will not only increase your productivity but also deepen your understanding of how Linux systems work.

Each command usually comes with various options (called flags) that modify its behavior.

You can always use the man command (short for manual) to learn more about how each command works.

In this guide, you’ll learn what each command does, how to use it, and why it’s important.

We’ve also included example usage so you can try them out yourself.

As you become familiar with these commands, you’ll gain confidence in working within the terminal.

Eventually, you’ll be able to automate tasks, troubleshoot problems, and manage your system more effectively.

Whether you’re an aspiring developer, system administrator, or casual Linux user, this knowledge is indispensable.

Learning these commands is your first step toward becoming fluent in Linux.

So open your terminal and follow along

You’re about to unlock the full power of your Linux system.

Let’s get started with the top 10 essential commands every new user should know.

1. pwd – Print Working Directory

Displays the current directory you’re in.

pwd2. ls – List Directory Contents

Lists files and directories in the current directory.

ls # basic listing

ls -l # long format with permissions

ls -a # includes hidden files3. cd – Change Directory

Used to move between directories.

cd /home/username # go to specific path

cd .. # move up one directory

cd ~ # go to your home directory4. mkdir – Make Directory

Creates a new directory.

mkdir new_folder5. touch – Create an Empty File

Creates a new, empty file or updates the timestamp on an existing one.

touch file.txt6. cp – Copy Files or Directories

Copies files or directories.

cp file1.txt file2.txt # copy file

cp -r dir1/ dir2/ # copy directory recursively7. mv – Move or Rename Files

Moves or renames files and directories.

mv oldname.txt newname.txt # rename

mv file.txt /home/user/ # move file8. rm – Remove Files or Directories

Deletes files or directories.

rm file.txt # remove file

rm -r folder/ # remove directory recursively

rm -rf folder/ # force remove (⚠️ dangerous!)9. man – Manual Pages

Displays help and usage for other commands.

man ls # view help for 'ls'(Press q to quit the manual.)

10. sudo – Superuser Do

Runs a command with elevated (admin) privileges.

sudo apt update # run as root

Conclusion.

Learning Linux might seem overwhelming at first, but starting with these 10 essential commands gives you a strong foundation.

They allow you to navigate the file system, manage files and directories, execute commands with administrative privileges, and access help when you need it.

As you practice and use these commands regularly, the command line will become less intimidating and far more powerful.

You’ll start to understand how Linux works under the hood and how to interact with it efficiently.

Remember, the man command is your friend never hesitate to explore it when you’re unsure about a command’s usage.

The more you experiment, the more comfortable and capable you’ll become.

These basics open the door to more advanced topics like scripting, system administration, package management, and server operations.

Whether you’re pursuing a career in tech or simply looking to make the most of your Linux system, mastering these commands is the first critical step.

Keep practicing, stay curious, and don’t be afraid to break things that’s one of the best ways to learn in Linux.

Happy coding!

DevOps Explained: Principles, Practices, and Tools.

What is DevOps?

DevOps is a compound of two words: Development and Operations. It represents a cultural, philosophical, and technical movement that bridges the gap between software development teams and IT operations teams.

Traditionally, these two groups worked in silos developers wrote code and handed it off to operations to deploy and manage.

This handoff often caused delays, miscommunication, and production issues due to the lack of shared responsibilities. DevOps emerged as a response to these challenges, aiming to unify both functions with the common goal of delivering software quickly, reliably, and sustainably.

At its core, DevOps is about collaboration, automation, and continuous improvement. It encourages a culture where developers, testers, operations engineers, and other stakeholders work together throughout the software development lifecycle (SDLC).

This end-to-end collaboration leads to faster development cycles, more frequent deployments, better product quality, and a more stable production environment.

One of the key enablers of DevOps is automation. DevOps promotes the use of tools and scripts to automate repetitive and error-prone tasks such as code integration, testing, deployment, infrastructure provisioning, and monitoring.

By automating these processes, teams can reduce manual errors, increase deployment speed, and focus more on innovation and value creation. Automation also facilitates Continuous Integration (CI) and Continuous Delivery (CD), which are foundational practices in DevOps.

Continuous Integration involves developers frequently merging their code changes into a shared repository. Each integration is verified by automated builds and tests, ensuring that the software remains functional and free of integration issues.

Continuous Delivery, on the other hand, ensures that the software is always in a releasable state. Code can be automatically pushed through various testing stages and into production with minimal human intervention.

Another major concept in DevOps is Infrastructure as Code (IaC). Instead of manually configuring infrastructure components, DevOps teams define them in code using tools like Terraform or AWS CloudFormation.

This approach brings version control, repeatability, and scalability to infrastructure management, making it easier to provision and maintain consistent environments across development, testing, and production.

Monitoring and logging are also central to DevOps. They provide visibility into the health and performance of systems and applications.

By continuously monitoring applications and collecting log data, teams can detect anomalies, respond to incidents faster, and proactively address potential issues before they impact users. This feedback loop enhances system reliability and informs future development decisions.

DevOps also supports modern software architectures, such as microservices. In a microservices approach, applications are broken down into smaller, independent services that can be developed, deployed, and scaled independently.

DevOps practices align perfectly with microservices by enabling frequent updates and isolated deployments without affecting the entire system.

Security is another important aspect of DevOps, leading to the evolution of DevSecOps. This approach integrates security into the DevOps pipeline from the beginning rather than treating it as an afterthought.

By automating security checks and incorporating them into CI/CD workflows, teams can identify vulnerabilities early and ensure compliance without slowing down development.

While tools are essential in implementing DevOps, the true value lies in the cultural shift it demands. It requires organizations to embrace change, break down barriers, and foster a mindset of shared responsibility and continuous learning. Success in DevOps is not just about adopting tools but also about transforming how people work together and deliver software.

DevOps is a holistic approach to software delivery that emphasizes collaboration, automation, and agility. It enables organizations to build better software, faster and more reliably, while also enhancing customer satisfaction and business outcomes.

Whether you’re a startup or a large enterprise, adopting DevOps can lead to significant improvements in productivity, system uptime, and time to market. It is not a one-size-fits-all solution, but a journey of continuous evolution in how we build, deploy, and manage software.

Core Principles of DevOps

- Collaboration and Communication

- Breaks down silos between development and operations teams.

- Encourages shared responsibilities, goals, and feedback loops.

- Automation

- Automates repetitive tasks such as code integration, testing, deployment, and infrastructure provisioning.

- Continuous Improvement

- Focus on frequent iterations, learning from failures, and refining processes.

- Customer-Centric Action

- Aligns technical tasks with customer needs and feedback.

- End-to-End Responsibility

- Teams own applications from development through to production and maintenance.

- Lean Thinking

- Eliminates waste, optimizes workflows, and maximizes value delivery.

Key DevOps Practices

- Continuous Integration (CI)

- Developers merge code changes frequently.

- Automated builds and tests validate changes to catch issues early.

- Continuous Delivery (CD)

- Ensures code is always in a deployable state.

- Automates testing and deployment to staging/production environments.

- Infrastructure as Code (IaC)

- Manages infrastructure using code and automation.

- Enables consistent environments and repeatable deployments.

- Monitoring and Logging

- Tracks system performance and logs for quick detection and resolution of issues.

- Configuration Management

- Maintains consistency of systems and applications using automated configuration tools.

- Microservices Architecture

- Breaks applications into loosely coupled, independently deployable services.

- Version Control

- Tracks changes in code and configuration files (typically using Git).

Popular DevOps Tools

| Category | Tools |

|---|---|

| Version Control | Git, GitHub, GitLab, Bitbucket |

| CI/CD | Jenkins, GitLab CI, GitHub Actions, CircleCI, Travis CI, Azure DevOps |

| IaC | Terraform, AWS CloudFormation, Pulumi |

| Configuration Management | Ansible, Puppet, Chef, SaltStack |

| Containerization | Docker, Podman |

| Container Orchestration | Kubernetes, OpenShift, Docker Swarm |

| Monitoring & Logging | Prometheus, Grafana, ELK Stack (Elasticsearch, Logstash, Kibana), Splunk |

| Collaboration | Slack, Microsoft Teams, Jira, Confluence |

| Security (DevSecOps) | SonarQube, Snyk, Aqua Security, Twistlock |

Benefits of DevOps

- Faster delivery of features and updates

- Improved deployment frequency and reliability

- Enhanced collaboration between teams

- Greater system stability and faster recovery

- Better resource utilization and cost efficiency

Challenges in Adopting DevOps

- Cultural resistance to change

- Complexity of tools and integration

- Security concerns in automation

- Skills and knowledge gaps

- Managing legacy systems

Conclusion

DevOps is more than just a set of tools it’s a cultural shift that fosters collaboration, automation, and continuous improvement across the software development lifecycle. By embracing DevOps principles and practices, organizations can achieve faster, more reliable, and customer-centric software delivery.

Top 10 AWS IAM Security Best Practices You Must Follow.

Introduction.

In today’s cloud-driven world, securing your cloud infrastructure is no longer optional it’s a strategic necessity. Amazon Web Services (AWS), being the most widely adopted cloud platform, offers a robust suite of tools to manage access and control over your resources.

At the heart of AWS security lies IAM (Identity and Access Management), a powerful service that enables administrators to define who can access what, when, and under what conditions. Despite its flexibility and capabilities, IAM can become a serious vulnerability if misconfigured.

Many data breaches and account compromises stem from weak access control policies, excessive privileges, or unmanaged identities. Organizations must therefore adopt a proactive and disciplined approach to IAM.

This begins with understanding the potential risks associated with identity and access mismanagement and recognizing the role IAM plays in mitigating those risks.

Whether you’re managing a small startup environment or a complex multi-account enterprise structure, IAM is foundational to securing your AWS footprint. It governs authentication (who you are) and authorization (what you can do), making it essential for everything from S3 bucket permissions to EC2 instance control, from Lambda execution roles to cross-account access.

Because IAM touches virtually every AWS service, a single misstep can have widespread and cascading consequences. For instance, providing overly broad permissions to users or applications can unintentionally grant access to sensitive data or allow unauthorized modifications to critical resources.

Conversely, being overly restrictive can hamper operations and reduce productivity. The key lies in finding the right balance implementing fine-grained access control while maintaining operational agility.

As your environment grows, so does the complexity of managing users, roles, groups, and policies. Manual oversight is no longer sufficient. Best practices such as enabling Multi-Factor Authentication (MFA), following the principle of least privilege, and rotating access credentials regularly become critical components of a well-rounded security strategy.

Additionally, integrating automated monitoring through services like AWS CloudTrail and IAM Access Analyzer provides real-time insights into who is accessing what and helps flag anomalies before they escalate into breaches. Security is not a one-time configuration but a continuous process. IAM must be reviewed, refined, and enforced as your architecture evolves.

Moreover, IAM is not just a technical issue it’s also a human and organizational one. Teams need proper training, developers need secure defaults, and auditors need visibility. By adopting a set of clear, actionable, and scalable IAM best practices, organizations can reduce their attack surface, ensure compliance, and build trust with customers and stakeholders.

In this article, we explore the top 10 AWS IAM Security Best Practices every cloud architect, DevOps engineer, and security professional should implement to protect their AWS environment. From securing root accounts and managing access keys to leveraging service control policies and auditing unused permissions, these practices form the backbone of a secure, scalable, and resilient cloud infrastructure.

1. Enable MFA (Multi-Factor Authentication) for All Users

- Enforce MFA for all IAM users, especially for privileged accounts (like the root user).

- Use hardware MFA for highly sensitive accounts.

2. Avoid Using the Root Account

- Only use the AWS root account for initial setup and critical administrative tasks.

- Create individual IAM users with the required permissions instead.

- Secure the root account with strong MFA and lock away credentials.

3. Follow the Principle of Least Privilege

- Grant users and roles only the permissions they need to perform their tasks.

- Use IAM policies with fine-grained permissions, and regularly review them.

4. Use IAM Roles Instead of IAM Users Where Possible

- Prefer IAM roles for applications, services, and cross-account access.

- Roles are temporary and use short-term credentials, reducing the risk of key leakage.

5. Rotate Access Keys Regularly

- If access keys are in use, rotate them periodically.

- Monitor their usage and disable/delete unused keys.

6. Use Policy Conditions to Tighten Access

- Apply conditions (e.g., IP range, time of day, MFA requirement) in your IAM policies.

- This adds an extra layer of control over who can do what, when, and from where.

7. Use AWS Managed Policies with Caution

- AWS provides managed policies, but they may be overly permissive.

- Prefer custom policies tailored to your organization’s specific access needs.

8. Monitor IAM Activity with CloudTrail and IAM Access Analyzer

- Enable AWS CloudTrail to log and monitor IAM activity.

- Use IAM Access Analyzer to identify unused permissions and external access risks.

9. Remove Unused Users, Roles, and Permissions

- Conduct regular audits to remove stale IAM entities and unused permissions.

- Use AWS Access Advisor to identify unused services for each IAM user or role.

10. Enable Service Control Policies (SCPs) in AWS Organizations

- Use SCPs to set permission guardrails at the organizational or account level.

- Helps enforce consistent security practices across multiple AWS accounts.

Conclusion.

In conclusion, AWS Identity and Access Management (IAM) plays a pivotal role in the overall security posture of your cloud environment. While AWS provides the tools and frameworks to enforce secure access control, the responsibility of proper configuration lies with the user.

Mismanagement of IAM whether through excessive privileges, outdated credentials, or the use of the root account for daily operations can open doors to vulnerabilities that attackers are more than ready to exploit. This is why it is crucial to follow a structured set of best practices that not only protect your environment from external threats but also reduce internal risks and operational errors.

The top 10 IAM best practices we discussed from enforcing Multi-Factor Authentication (MFA) and eliminating root account usage, to applying the principle of least privilege and regularly rotating access keys form the backbone of a secure AWS deployment.

Each best practice contributes to building a layered security model, where no single point of failure can compromise the entire system. Implementing IAM roles instead of long-lived credentials, auditing access patterns with CloudTrail and Access Analyzer, and tightening permissions using policy conditions and Service Control Policies (SCPs) are all part of creating a proactive and future-proof access management strategy.

But security is not a one-and-done task. The landscape is constantly evolving, with new services, features, and threats emerging regularly. Organizations must therefore treat IAM security as a continuous process, not a checklist.

Regular reviews of users, policies, and permissions, combined with automated monitoring and alerting, will ensure that your environment remains compliant, secure, and aligned with industry best practices. Furthermore, as teams grow and roles become more dynamic, ensuring that everyone is educated on secure IAM usage is equally critical.

Good policy design, backed by strong governance and clear operational boundaries, reduces the likelihood of missteps.

AWS IAM security is about more than just locking down access it’s about enabling innovation safely. With the right safeguards in place, your teams can move fast and scale confidently, knowing that the underlying infrastructure is protected by thoughtful, well-enforced access control. By internalizing and applying these best practices, you lay the groundwork for a cloud environment that is not only efficient and scalable but also resilient and secure by design.

Multi-Account Strategies: How to Design Your AWS Landing Zone with AWS Organizations.

What Is a Landing Zone?

A Landing Zone in AWS is a pre-configured, secure, and scalable baseline environment that provides a foundational setup for cloud adoption within an organization.

It acts as the starting framework for deploying and operating multiple AWS accounts in a standardized and governed manner.

The core purpose of a Landing Zone is to ensure that every new workload or team entering the AWS environment inherits the necessary controls, security configurations, and operational best practices from the start.

At its essence, a Landing Zone incorporates automation, guardrails, identity management, logging, monitoring, and network architecture all designed to reduce risk and enable scale.

It’s not a single product, but rather a design approach often built using AWS Organizations, AWS Control Tower, IAM, Service Control Policies (SCPs), AWS Config, and other governance tools.

A well-architected Landing Zone supports centralized and decentralized models, letting teams operate independently while maintaining organizational standards.

It separates workloads into different AWS accounts to improve security, isolate failures, simplify billing, and enforce policy boundaries. It also defines account provisioning processes, naming conventions, baseline configurations, and tagging strategies.

A Landing Zone aligns technology deployment with enterprise governance, ensuring consistency, compliance, and scalability from day one.

Whether implemented through AWS Control Tower or custom automation, it is a critical component for organizations aiming to manage cloud environments with control and agility.

Why Go Multi-Account?

1. Isolation of Workloads

Using multiple AWS accounts allows you to isolate workloads by application, environment (dev, test, prod), or team. This isolation improves security by limiting the blast radius of incidents issues in one account don’t impact others. It also enhances fault tolerance and compliance, as sensitive data or regulated workloads can be kept separate.

Each account has its own resources, policies, and identity controls, making it easier to enforce least-privilege access. This separation also simplifies monitoring and auditing. By isolating workloads, you gain clearer boundaries, better control, and a more resilient architecture overall.

2. Security and Compliance

A multi-account strategy strengthens security and simplifies compliance by enforcing clear boundaries between workloads.

Sensitive applications or regulated data can reside in dedicated accounts with stricter controls. Service Control Policies (SCPs) allow organizations to enforce high-level restrictions, such as disabling specific services or regions.

Centralized logging and security tooling in separate accounts ensures tamper-proof auditing. Role-based access and identity federation become easier to manage when accounts are scoped by function or environment.

Compliance audits are streamlined, as evidence collection and scope definition are clearer. Overall, the model reduces risk, enforces governance, and supports industry standards like HIPAA, PCI, or ISO 27001.

3. Billing and Cost Transparency

Using separate AWS accounts for different teams, projects, or environments enables precise tracking of cloud spending. Each account generates its own usage and billing data, making it easier to attribute costs to the right stakeholders.

This visibility supports better budgeting, forecasting, and accountability across the organization. AWS Organizations offers consolidated billing, which combines all accounts under a single payment while still maintaining itemized cost breakdowns.

Teams can set their own budgets and receive alerts when spending exceeds thresholds. Tags can supplement account-level tracking for more granular reporting. This clarity encourages cost optimization and eliminates disputes over shared charges.

4. Scalability and Delegated Administration

A multi-account structure supports organizational growth by enabling scalable cloud governance. As teams expand, managing everything from a single account becomes impractical and risky.

By assigning each team or business unit its own AWS account, you can delegate administrative control while maintaining centralized oversight through AWS Organizations. This model allows teams to innovate independently without waiting on a central admin for every change.

Guardrails like SCPs ensure that delegated autonomy doesn’t compromise security or compliance. It also simplifies account lifecycle management teams can be onboarded or decommissioned with minimal impact to others. In essence, it balances agility with control.

AWS Organizations: The Foundation of Your Landing Zone

AWS Organizations allows you to create and manage multiple AWS accounts from a central location. It provides:

- Organizational Units (OUs): Hierarchical groups of accounts for logical separation.

- Service Control Policies (SCPs): High-level permissions that define what services/accounts can (or cannot) do.

- Account Vending: Automated provisioning of new accounts with predefined settings and governance.

Designing a Multi-Account Landing Zone: Key Theoretical Pillars

1. Organizational Structure Design

Design your OUs around clear patterns:

- By environment (e.g., Dev, Test, Prod)

- By function (e.g., Security, Networking, Shared Services)

- By business unit or project

2. Guardrails via SCPs

SCPs are preventive not detective. They don’t grant permissions but restrict what IAM policies can do. For instance:

- Deny use of regions not allowed

- Prevent use of specific services (e.g., expensive GPU services in dev accounts)

3. Security as a Foundation, Not a Layer

Designate a Security OU with accounts dedicated to:

- Security tooling (e.g., GuardDuty, AWS Security Hub)

- Logging (e.g., centralized CloudTrail, VPC flow logs)

- Auditing and compliance monitoring

4. Account Vending and Automation

Use tools like AWS Control Tower or custom automation pipelines (via Terraform, AWS CDK) to standardize account provisioning.

5. Networking Strategy

Create a Shared Services account to centralize VPCs, Transit Gateways, and Route53 zones. Use Resource Access Manager (RAM) to share resources across accounts.

6. Cost and Tag Governance

Enable Consolidated Billing, enforce tagging standards, and use AWS Budgets and Cost Explorer for visibility.

Closing Thoughts

Designing a Landing Zone with AWS Organizations is less about technical implementation and more about strategic foresight. It’s a balance of agility, governance, and scalability. Think of it as laying the bedrock of your cloud presence get the theory right, and the execution becomes a matter of automation.

By investing early in a multi-account strategy and embracing AWS’s organizational tooling, you position your teams to move faster, safer, and smarter in the cloud.

The 10-Minute CI/CD Pipeline: How We Made Builds Blazing Fast

Introduction.

In today’s fast-paced software development world, speed is everything. Customers expect frequent updates, rapid feature delivery, and quick bug fixes. To meet these demands, teams rely heavily on Continuous Integration and Continuous Deployment (CI/CD) pipelines to automate building, testing, and releasing their software.

Ideally, CI/CD pipelines act as the engines driving efficient and reliable software delivery. However, in practice, many teams find their pipelines slowing down their workflows instead of speeding them up. Long build times, flaky tests, inefficient resource usage, and complex pipeline configurations all contribute to bottlenecks that disrupt developer productivity and delay time-to-market.

When a pipeline takes 30, 40, or even 60 minutes to complete, developers are forced to wait, context-switch, or postpone commits, which breaks their flow and slows innovation. Such delays increase frustration, reduce morale, and ultimately impact the quality and competitiveness of the software product.

Recognizing these challenges, we embarked on a mission to radically reduce our pipeline execution time, setting an ambitious goal to bring it down to 10 minutes or less. This goal wasn’t just about speed for its own sake; it was about reclaiming developer time, accelerating feedback loops, and improving overall team velocity.

Achieving this required a deep understanding of pipeline architecture, identifying hidden inefficiencies, and embracing modern best practices such as parallelization, caching, selective execution, and pipeline modularization. It also meant fostering a culture where the pipeline is continuously monitored, maintained, and improved just like application code.

This introduction explores the critical role of CI/CD pipelines in modern software development, the common pain points associated with slow and inefficient pipelines, and the transformative potential of a well-optimized, fast pipeline.

Whether you’re struggling with a legacy pipeline that has grown unwieldy or setting up a new one with scalability in mind, the lessons and principles outlined here will help you build a pipeline that doesn’t just automate delivery but truly accelerates it.

In the sections that follow, we’ll unpack the key strategies and technical tactics that enable the transformation from slow, cumbersome builds to blazing-fast 10-minute pipelines. We’ll also discuss how to measure success and ensure continuous improvement so your pipeline continues to serve your team effectively as your product and organization evolve.

Fast CI/CD is no longer a luxury it’s a necessity for teams aiming to stay competitive and responsive in a world that demands rapid innovation. Let’s dive into how you can make your CI/CD pipeline a powerful enabler of speed and quality.

Why a 10-Minute Pipeline?

The idea isn’t arbitrary. Research from the (DORA)DevOps Research and Assessment team and books like Accelerate consistently show that high-performing teams deploy more frequently, recover faster, and spend less time waiting for builds. A CI/CD pipeline that delivers feedback within 10 minutes:

- Enables faster iteration and bug fixes

- Reduces context switching

- Improves developer happiness and flow

- Encourages frequent, smaller changes

- Strengthens confidence in automation

The Principles of Fast CI/CD

To build a high-speed pipeline, adopt these foundational principles:

1. Optimize for Fast Feedback

Not all stages need to run for every commit. Prioritize the steps that give the most value, earliest. Run unit tests and static checks up front. Defer slower, deeper validations until later in the pipeline or after merge.

2. Fail Fast, Not Last

Design pipelines to stop at the first sign of failure. There’s no reason to run integration or deployment steps if the code doesn’t even compile. Early exits save time and compute.

3. Don’t Rebuild the World

Avoid rebuilding everything from scratch. Use build caching, dependency restoration, and container layering to avoid redundant work. CI should be smart, not repetitive.

4. Parallelize Everything You Can

Modern CI systems support parallel execution. Split test suites across multiple runners or containers. Run linting, testing, packaging, and scanning in parallel where possible.

5. Be Selective and Context-Aware

Not every change needs every check. For example, a documentation update shouldn’t trigger a full integration test suite. Use path-based filtering, commit tags, or monorepo-aware logic to skip irrelevant steps.

6. Keep Your Pipeline Lean

Audit your CI/CD regularly. Remove legacy stages, old integrations, and unnecessary steps. Pipelines should evolve with your product don’t let them rot in silence.

Technical Tactics That Make a Difference

Here are some specific strategies to implement these principles:

- Use matrix builds for multi-environment testing (e.g., Python 3.8, 3.9, 3.10 in parallel)

- Split unit and integration tests into different jobs

- Run tests with coverage, not just pass/fail

- Use remote caching for Docker layers (e.g., GitHub Actions cache, BuildKit, or JFrog)

- Expire and prune unused artifacts/logs

- Avoid large Docker images build minimal, focused containers

- Limit retention windows for logs, caches, and reports

- Automate flaky test detection and quarantining

- Run CI logic as code, using templates, reusable workflows, or Terraform/CDK pipelines

Measuring Success

You can’t improve what you don’t measure. Track metrics like:

- Average pipeline duration

- Lead time from commit to deploy

- Test suite run time

- Flaky test frequency

- Failure rates by stage

Use these metrics to identify bottlenecks and prioritize what to fix.

Final Thoughts:

A fast CI/CD pipeline isn’t just a developer convenience it’s a strategic advantage. It shortens the feedback loop, accelerates delivery, and strengthens team morale. More importantly, it shifts focus away from waiting and firefighting, and toward building better software faster.

The 10-minute pipeline is an achievable goal. It requires thoughtful design, regular maintenance, and a mindset that values speed and quality. But once in place, it transforms the way your team works.

And the best part? Every minute you shave off your pipeline is a minute you give back to your team.

Conclusion

Achieving a blazing-fast CI/CD pipeline is no small feat, but it’s an investment that pays dividends across your entire development lifecycle. By focusing on principles like fast feedback, smart caching, parallelization, and selective testing, you can dramatically reduce build times without sacrificing quality or security.

A 10-minute pipeline doesn’t just speed up your deployments it revitalizes your team’s productivity, morale, and confidence in automation. Remember, building a fast pipeline is an ongoing journey, requiring continuous monitoring, tuning, and collaboration.

As your codebase and teams grow, your pipeline must evolve alongside them. When done right, a fast CI/CD pipeline becomes a competitive advantage, enabling you to innovate rapidly, deliver reliably, and delight your users consistently. Start small, measure rigorously, and iterate relentlessly and soon, you’ll transform your CI/CD process from a bottleneck into your most powerful development ally.

NAT Gateway vs NAT Instance: What’s the Difference?

What is NAT?

Network Address Translation (NAT) is a method used in networking that allows multiple devices on a private network to access external networks, such as the internet, using a single public IP address.

NAT works by modifying the IP address information in the headers of packets while they are in transit across a routing device, such as a firewall or router. This allows private IP addresses which are not routable on the public internet to appear as if they originate from a valid public IP address.

Typically, NAT is implemented on routers or gateways that connect internal (private) networks to external (public) networks. It’s most commonly used in home networks, enterprise environments, and cloud architectures to preserve the limited pool of IPv4 addresses and to improve security.

There are several types of NAT, including Static NAT, Dynamic NAT, and Port Address Translation (PAT), also known as NAT Overload.

In Static NAT, one private IP is mapped to one public IP. This is straightforward but uses more public IPs. Dynamic NAT assigns a public IP from a pool to a private IP when needed, offering flexibility.

PAT, the most common form of NAT, allows multiple devices to share a single public IP by assigning a unique port number to each session. This is what most home routers use.

NAT also acts as a basic security mechanism, hiding internal IP addresses from the external world. External systems can’t initiate direct connections to internal hosts unless port forwarding is configured. In cloud computing, NAT is widely used to allow virtual machines or instances in private subnets to access the internet without exposing them to inbound traffic.

For example, in AWS, a NAT Gateway or NAT Instance is used to provide internet access to instances in private subnets. Without NAT, these resources could not fetch updates, download packages, or reach external APIs unless they had a public IP, which defeats the purpose of a private subnet. NAT enables controlled, outbound-only connectivity.

NAT becomes especially important in IPv4-based networks due to IP address exhaustion. While IPv6 offers a much larger address space and can reduce reliance on NAT, widespread IPv4 usage continues to make NAT a crucial part of most network designs. Whether in a home setup or a multi-tier cloud architecture, NAT remains essential for enabling private-to-public communication while maintaining network security and efficiency.

What is a NAT Instance?

A NAT Instance is an Amazon EC2 (Elastic Compute Cloud) virtual machine that acts as a bridge between private subnets and the internet in an AWS Virtual Private Cloud (VPC). It enables instances in private subnets which do not have public IP addresses to initiate outbound connections to the internet or other AWS services while preventing inbound connections initiated from the internet.

This is useful for downloading software updates, accessing APIs, or sending data out without exposing internal resources.

To set up a NAT Instance, you launch an EC2 instance in a public subnet, assign it a public IP address, and configure it to allow IP forwarding. You also disable source/destination checks to let the instance route traffic. It must be associated with a route table that directs traffic from private subnets to the NAT Instance for internet-bound requests.

One key advantage of a NAT Instance is customization you have full control over the instance, including the ability to install monitoring tools, configure advanced firewall rules, and tweak system settings. However, it requires manual maintenance, including software updates, security patches, and scalability planning.

Performance depends on the selected EC2 instance type, which means high-traffic applications might require resizing or load balancing.

It is also a single point of failure unless you set up high availability using instance recovery, auto scaling groups, or multiple NAT Instances across Availability Zones. While it can be cost-effective for small workloads, managing NAT Instances at scale can become complex and operationally heavy.

Pros of NAT Instance

- Full control over software and configuration.

- Can install additional monitoring or security tools.

- Potentially lower cost for very low traffic scenarios.

Cons of NAT Instance

- Manual setup and maintenance overhead.

- Single point of failure unless you architect redundancy yourself.

- Scaling requires manual instance resizing or adding more instances.

What is a NAT Gateway?

A NAT Gateway is a fully managed network service provided by AWS that allows instances in a private subnet to connect to the internet or other AWS services, without allowing inbound traffic from the internet.

It performs the function of Network Address Translation (NAT), enabling private IP addresses to communicate externally while remaining isolated from unsolicited incoming connections.

Unlike NAT Instances, NAT Gateways are highly available within an Availability Zone and are designed to automatically scale to accommodate growing bandwidth demands, supporting speeds up to 45 Gbps.

This makes them suitable for production workloads and applications requiring reliable and high-throughput internet access from private environments.

Setting up a NAT Gateway is simple: you create it in a public subnet, associate it with an Elastic IP address, and update the route table of your private subnets to send internet-bound traffic through it. AWS handles all patching, updates, and availability behind the scenes, reducing operational overhead.

NAT Gateways are preferred for their ease of use, scalability, and reliability, but they come at a higher cost than NAT Instances, especially in low-traffic scenarios. They are also less customizable, offering minimal configuration compared to a self-managed EC2 NAT Instance.

In secure VPC designs, NAT Gateways allow instances to fetch updates, connect to external APIs, and interact with services like Amazon S3 or DynamoDB endpoints all while keeping them off the public internet. For multi-AZ high availability, you must deploy a NAT Gateway in each Availability Zone and configure route tables accordingly.

Pros of NAT Gateway

- Fully managed no patching or maintenance required.

- Highly available within an Availability Zone by default.

- Scales automatically with traffic demands.

- Simple to set up and operate.

Cons of NAT Gateway

- Slightly higher cost compared to small NAT Instances for low traffic.

- Less customizable (no ability to install custom software).

- Regional service requires deploying multiple gateways for multi-AZ redundancy.

Key Differences Summary

| Feature | NAT Instance | NAT Gateway |

|---|---|---|

| Management | User-managed EC2 instance | Fully managed AWS service |

| Scalability | Manual scaling | Automatic scaling |

| Availability | Single point of failure unless architected otherwise | Highly available in one AZ, multiple gateways for multi-AZ |

| Cost | Potentially cheaper at low traffic | Slightly more expensive |

| Performance | Limited by instance specs | High bandwidth, auto scaling |

| Customization | Fully customizable | Minimal customization options |

When to Use NAT Instance vs NAT Gateway?

Use NAT Instance if:

- You need granular control over the NAT device.

- You want to run custom software or monitoring on the NAT.

- Your traffic levels are low, and cost sensitivity is high.

- You have the expertise and resources to manage instances reliably.

Use NAT Gateway if:

- You want a hassle-free, scalable, and highly available NAT solution.

- Your workloads generate medium to high network traffic.

- You prefer a fully managed service to reduce operational overhead.

- You want simple setup and integration with AWS services.

Conclusion

While both NAT Gateways and NAT Instances enable private subnets to access the internet, they serve different operational needs.

NAT Gateways simplify network architecture by offloading management and scaling to AWS, making them ideal for production workloads and growing traffic. NAT Instances offer more control and flexibility but require manual maintenance and scaling.

For most modern AWS architectures, NAT Gateway is the preferred choice unless you have specific customization or cost constraints.

VPC Peering vs VPN vs Transit Gateway: Which One Should You Use?

Introduction

In the rapidly evolving world of cloud computing, the way we connect and manage networks plays a crucial role in ensuring seamless communication, security, and scalability of applications. Amazon Web Services (AWS), being a dominant cloud provider, offers multiple ways to connect Virtual Private Clouds (VPCs) with each other and with on-premises networks.

Among the most commonly used solutions are VPC Peering, Virtual Private Network (VPN), and Transit Gateway. Each of these options addresses different networking needs and challenges, providing cloud architects with the flexibility to design infrastructure that best fits their organizational requirements.

Understanding the differences between these connection types is essential because the choice you make can have significant impacts on network performance, security posture, operational complexity, and cost.

For instance, some applications demand ultra-low latency communication between VPCs, while others might prioritize secure encrypted connections over the public internet to link corporate data centers to the cloud. Moreover, as cloud environments grow, network architecture complexity increases, which may require more sophisticated solutions for managing routing and connectivity at scale.

VPC Peering is one of the simplest ways to connect two VPCs directly within AWS. It allows instances in different VPCs to communicate using private IP addresses as if they were on the same network. This method leverages AWS’s high-speed backbone infrastructure, providing low latency and high bandwidth.

However, VPC Peering is limited in scale because it is a point-to-point connection each pair of VPCs requires its own peering link, and it does not support transitive routing between multiple VPCs.

On the other hand, VPN connections offer encrypted tunnels over the internet, allowing secure communication between on-premises environments and AWS, or even between geographically dispersed VPCs. VPNs are an excellent choice for hybrid cloud architectures where legacy data centers need to connect to cloud resources without exposing traffic publicly.

However, VPNs depend on the internet, which can introduce latency and bandwidth limitations. Their setup and management are also more complex compared to VPC Peering, requiring ongoing monitoring to maintain security and performance.

For organizations with growing cloud environments involving many VPCs and hybrid networks, AWS Transit Gateway provides a powerful, scalable solution. It acts as a central hub that connects multiple VPCs and on-premises networks, simplifying routing and enabling transitive connectivity.

Transit Gateway can handle large volumes of traffic with higher throughput capabilities and centralized management, which helps reduce operational overhead. While it introduces additional costs and complexity, Transit Gateway is often the best choice for enterprises looking to streamline complex network architectures.

In this article, we will dive deeper into these three AWS networking solutions: VPC Peering, VPN, and Transit Gateway. We will compare their features, advantages, and limitations to help you understand which option is best suited for your specific use cases.

Whether you’re designing a simple network with a handful of VPCs or architecting a large-scale multi-region infrastructure, making an informed choice will help optimize your cloud networking strategy in terms of cost, performance, and security.

By the end of this guide, you’ll have a clear understanding of the scenarios where each solution shines, enabling you to design networks that are robust, scalable, and aligned with your business goals. So, let’s get started by exploring what VPC Peering is and when it makes sense to use it.

What is VPC Peering?

VPC Peering is a networking connection between two Virtual Private Clouds (VPCs) that enables you to route traffic using private IP addresses, as if the resources in these VPCs were on the same network. It is one of the simplest and most efficient ways to enable communication between different VPCs within the AWS ecosystem.

When you create a VPC Peering connection, AWS sets up a private, point-to-point network link over its global backbone infrastructure, which ensures low latency and high bandwidth data transfer between the connected VPCs.

One of the key advantages of VPC Peering is that it allows seamless communication without requiring internet gateways, VPNs, or transit through third-party devices. This means the data traffic remains within the AWS network and does not traverse the public internet, enhancing both security and performance.

Applications running in peered VPCs can communicate using their private IP addresses, which simplifies network configuration and eliminates the need for complex NAT (Network Address Translation) setups.

VPC Peering works across VPCs in the same AWS region or even across different regions, which is called inter-region VPC Peering. Inter-region peering is especially useful for global architectures that require data replication, backup, or failover scenarios between geographically dispersed environments.

However, it’s important to note that peering connections are non-transitive, meaning if VPC A is peered with VPC B, and VPC B is peered with VPC C, VPC A cannot automatically communicate with VPC C through VPC B. This limitation requires you to establish individual peering connections between each pair of VPCs you want to connect.

Setting up VPC Peering is straightforward: you request a peering connection from one VPC to another, and the owner of the other VPC must accept the request.

Once established, you update route tables in both VPCs to enable routing of traffic through the peering connection. Security groups and network ACLs can be configured to control the traffic flow between the VPCs, giving you granular control over which resources can communicate.

VPC Peering is highly suitable for small to medium-sized architectures where only a few VPCs need to be connected.

It is commonly used for development and production environment segregation, resource sharing between different business units or accounts, and simple multi-tier application architectures. Because it leverages AWS’s private network, VPC Peering offers lower latency and higher throughput than VPN connections.

However, as your cloud environment scales up with many VPCs, managing numerous peering connections can become complex and cumbersome.

Since there is no built-in transitive routing, the number of peering connections grows exponentially with the number of VPCs, potentially complicating network management and routing configurations.

In summary, VPC Peering provides a direct, secure, and efficient method for connecting two VPCs, making it an excellent choice for straightforward, low-latency communication needs within or across AWS regions.

Its simplicity and performance benefits make it a foundational building block for many cloud network designs, especially when used within its scaling limitations.

What is VPN?

A Virtual Private Network (VPN) is a technology that creates a secure, encrypted connection over a public network, such as the internet, allowing data to travel safely between two endpoints.

In the context of AWS, VPNs are commonly used to connect on-premises data centers or remote offices to AWS Virtual Private Clouds (VPCs), or to enable secure communication between geographically separated VPCs. This encrypted “tunnel” ensures that sensitive information remains confidential and protected from eavesdropping or tampering during transmission.

AWS offers several VPN solutions, including the AWS Site-to-Site VPN, which connects your on-premises network to AWS over an IPsec (Internet Protocol Security) connection, and AWS Client VPN, which provides secure remote access for individual users.

VPN connections are relatively straightforward to set up and provide an effective way to extend your existing network into the cloud or allow remote users to securely access resources.

Unlike VPC Peering, VPN traffic travels over the public internet, which can introduce higher latency and potential bandwidth limitations.

Additionally, because the connection depends on the stability of the internet, network performance may vary, and VPNs typically don’t provide the same throughput or low-latency benefits that private AWS infrastructure offers.

VPNs are particularly valuable in hybrid cloud scenarios, where organizations want to maintain a secure bridge between their traditional data centers and cloud environments.

They also support compliance and security requirements by encrypting data in transit. However, VPNs require ongoing management, such as monitoring connection health, renewing certificates, and sometimes troubleshooting latency or drop issues.

VPNs are a versatile and secure way to connect distributed networks and users to AWS, especially when direct private connections are not feasible or cost-effective. They offer strong encryption and flexibility but may come with trade-offs in latency and bandwidth compared to other AWS networking options.

What is Transit Gateway?

AWS Transit Gateway is a fully managed service designed to simplify and scale network connectivity between multiple Virtual Private Clouds (VPCs), on-premises data centers, and remote networks.

Acting as a central hub, the Transit Gateway enables you to connect thousands of VPCs and VPNs through a single gateway, reducing the complexity of managing numerous individual connections like VPC peering or multiple VPN tunnels.

With Transit Gateway, you no longer need to create and maintain separate peering connections between every pair of VPCs. Instead, each VPC or network connects to the Transit Gateway, which manages the routing of traffic between all connected networks.

This hub-and-spoke architecture supports transitive routing, allowing communication between connected networks without the need for multiple peering links.

The service is highly scalable, capable of handling large volumes of traffic with high bandwidth and low latency, making it ideal for enterprises with complex cloud environments or hybrid architectures.

It also integrates seamlessly with AWS Direct Connect for dedicated, private connectivity to on-premises locations.

Transit Gateway simplifies network management by providing centralized control over routing, security policies, and monitoring. It supports route tables, enabling granular traffic segmentation and control between connected networks.

Additionally, AWS offers features like multicast support and inter-region peering with Transit Gateway, further enhancing its flexibility for global architectures.

While Transit Gateway incurs additional costs compared to VPC Peering or VPN, its benefits in scalability, simplified management, and transitive connectivity often outweigh the expenses for large or growing environments.

AWS Transit Gateway is the go-to solution for organizations needing scalable, efficient, and easy-to-manage connectivity across multiple VPCs and hybrid networks, especially when the network architecture grows beyond simple point-to-point connections.

Feature Comparison Table

| Feature | VPC Peering | VPN | Transit Gateway |

|---|---|---|---|

| Connectivity Type | Private IP routing | Encrypted over internet | Central hub for multiple VPCs |

| Scale | Point-to-point (limited) | Point-to-point | Multi-VPC and on-premises |

| Transitive Routing | No | No | Yes |

| Latency | Low (AWS backbone) | Higher (internet-based) | Low to medium |

| Bandwidth | Up to 10 Gbps (varies) | Limited by VPN endpoint | High, scalable |

| Management Complexity | Low | Medium | Medium to High |

| Cost | Low | Medium | Higher |

| Use Case Example | Connect two VPCs | Connect on-premises to AWS | Connect multiple VPCs + DCs |

When to Use VPC Peering

- You have a small number of VPCs that need to communicate directly.

- You want low-latency, high-throughput connectivity.

- You prefer simple setup without additional infrastructure.

- No need for transitive routing.

When to Use VPN

- You need secure communication between on-premises data centers and AWS.

- Remote user access is required.

- You can tolerate some latency due to internet-based transport.

- You want a cost-effective way to connect external networks to AWS.

When to Use Transit Gateway

- Your architecture involves many VPCs (dozens or more).

- You want centralized routing and simplified network management.

- You require transitive routing between VPCs and on-premises networks.

- Your workloads need scalable and high-throughput connectivity.

Conclusion

Choosing the right AWS networking solution depends largely on your environment’s scale and complexity:

- Use VPC Peering for straightforward, direct, high-speed connections between a few VPCs.

- Use VPN when connecting remote or on-premises networks securely over the internet.

- Use Transit Gateway for large-scale, multi-VPC architectures that require centralized management and transitive routing.

Understanding the strengths and limitations of each can help you design a robust, secure, and cost-effective cloud network architecture.

Automating Deployments to On-Premises Servers with CodeDeploy Agent.

Introduction.

Automating deployments to on-premises servers has become a crucial step in achieving consistent, reliable, and scalable software delivery in hybrid infrastructure environments. AWS CodeDeploy, a fully managed deployment service by Amazon Web Services, extends its capabilities beyond the cloud to support deployments directly to on-premises servers.

By installing the CodeDeploy agent on physical or virtual servers in your private data center, you can integrate these systems into your CI/CD pipeline, ensuring uniform software delivery practices across your entire fleet. This approach reduces manual errors, accelerates release cycles, and allows centralized control of application updates.

The CodeDeploy agent communicates securely with AWS services over HTTPS, ensuring that deployment instructions are received and executed properly. To utilize this functionality, servers must be registered with CodeDeploy and associated with appropriate IAM permissions and tag-based groupings.

Once set up, you can create deployment applications and groups targeting specific machines using custom tags. Deployment packages, often stored in Amazon S3 or GitHub, contain application code and an appspec.yml file defining the deployment lifecycle, including pre- and post-deployment hooks. These scripts handle tasks such as stopping services, backing up files, or starting applications after installation.

CodeDeploy supports various deployment configurations, such as “AllAtOnce” or “OneAtATime,” allowing fine-grained control over rollout strategy. With this setup, organizations can unify their deployment processes regardless of the server’s location, bringing cloud-like automation to on-prem systems. It also allows for easy rollback in case of failures, enhancing reliability and uptime.

Logging and status monitoring features help in auditing deployments and debugging issues efficiently. Overall, CodeDeploy’s support for on-premises instances provides a bridge between modern DevOps workflows and traditional infrastructure, allowing businesses to modernize at their own pace. Whether you’re maintaining legacy applications or modernizing existing infrastructure, CodeDeploy brings automation, repeatability, and consistency to your deployment pipeline.

It requires minimal changes to your existing systems and can be tailored to a wide range of operating systems and environments. The setup involves installing the CodeDeploy agent, registering the instance with AWS, configuring IAM roles, and defining deployment settings.

Once configured, deployments can be triggered via AWS CLI, SDKs, or CI/CD platforms like Jenkins or GitHub Actions. This strategy not only streamlines operations but also helps teams focus more on development and innovation, rather than spending time on manual deployments.

CodeDeploy enables true hybrid deployment capabilities, offering the benefits of cloud deployment automation for both cloud-based and on-premises infrastructure. This results in faster releases, reduced risk, and improved software quality across the organization.

1. Prerequisites

- An AWS account

- On-premises Linux or Windows servers

- Access to install software and open outbound HTTPS traffic (for communicating with AWS)

- An IAM user or role to manage CodeDeploy

- AWS CLI installed and configured

2. Install CodeDeploy Agent on On-Premises Server

On Amazon Linux / RHEL / CentOS:

sudo yum update

sudo yum install ruby wget

cd /home/ec2-user

wget https://aws-codedeploy-us-east-1.s3.us-east-1.amazonaws.com/latest/install

chmod +x ./install

sudo ./install auto