Introduction.

In today’s data-driven world, the demand for faster, more efficient computing is higher than ever. Whether you’re processing massive datasets, training machine learning models, running simulations, or conducting scientific research, the ability to perform computations in parallel is a game changer. Traditionally, setting up high-performance or parallel computing environments required specialized hardware, deep system knowledge, and a hefty budget. But thanks to cloud services like AWS, these capabilities are now more accessible than ever—even to solo developers, researchers, and small startups. AWS offers a variety of services that allow you to create scalable, reliable, and cost-efficient parallel computing systems without managing physical infrastructure. From AWS ParallelCluster, which simplifies the deployment of high-performance computing (HPC) clusters, to AWS Batch, which enables large-scale job scheduling and container-based execution, the possibilities are both vast and flexible. You can even take advantage of serverless options like AWS Lambda and Step Functions to orchestrate lightweight parallel tasks at scale.

This guide walks you through the process of building a parallel computing service using AWS’s powerful ecosystem. We’ll cover the core concepts of parallel computing in the cloud, help you choose the right AWS service for your use case, and show you step-by-step how to set up a parallel workload—from configuring your compute environment to running your first jobs. Whether you’re a data scientist analyzing genomes, a researcher simulating physics models, or a developer running large-scale image processing tasks, this guide is designed to make the journey easier. We’ll also touch on best practices, performance tuning, cost optimization, and security considerations to ensure your system runs efficiently and effectively. No matter your level of experience, you’ll find actionable insights to help you leverage AWS for parallel computing in a way that matches your needs and goals.

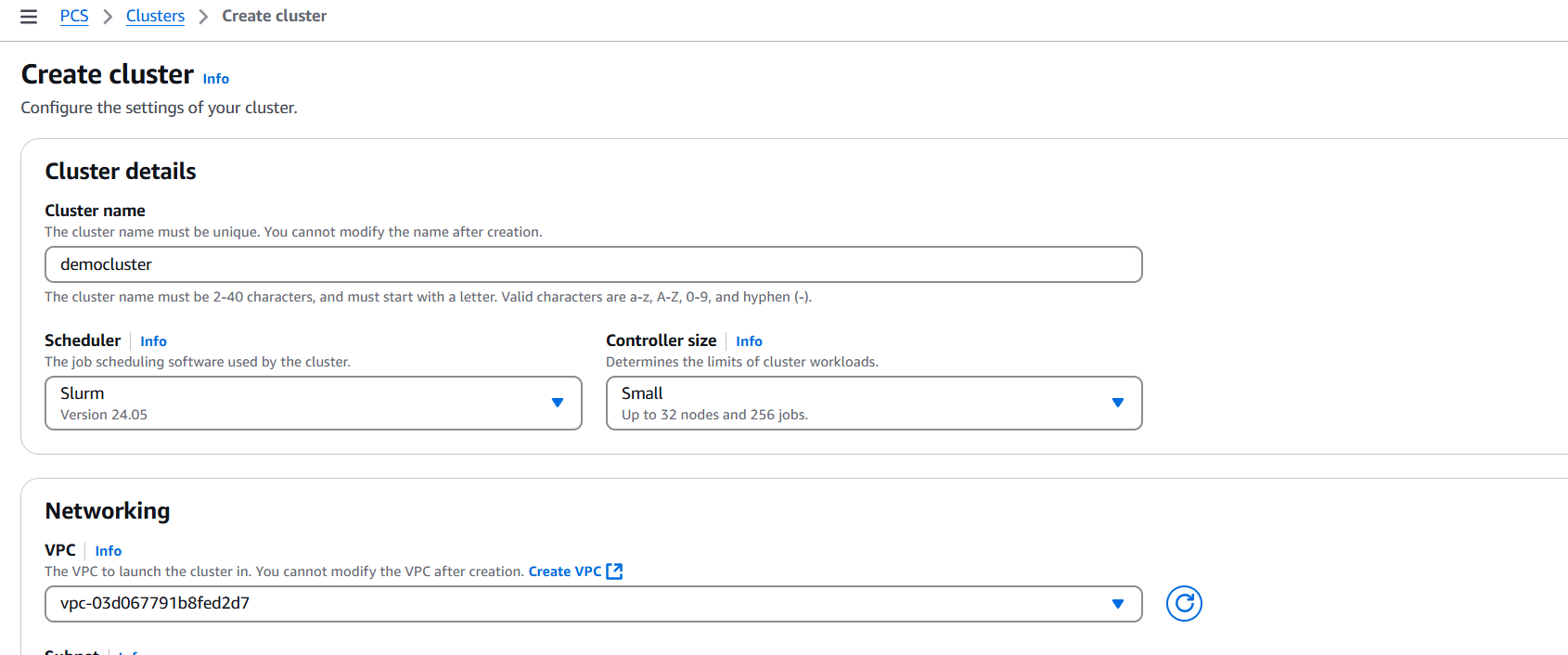

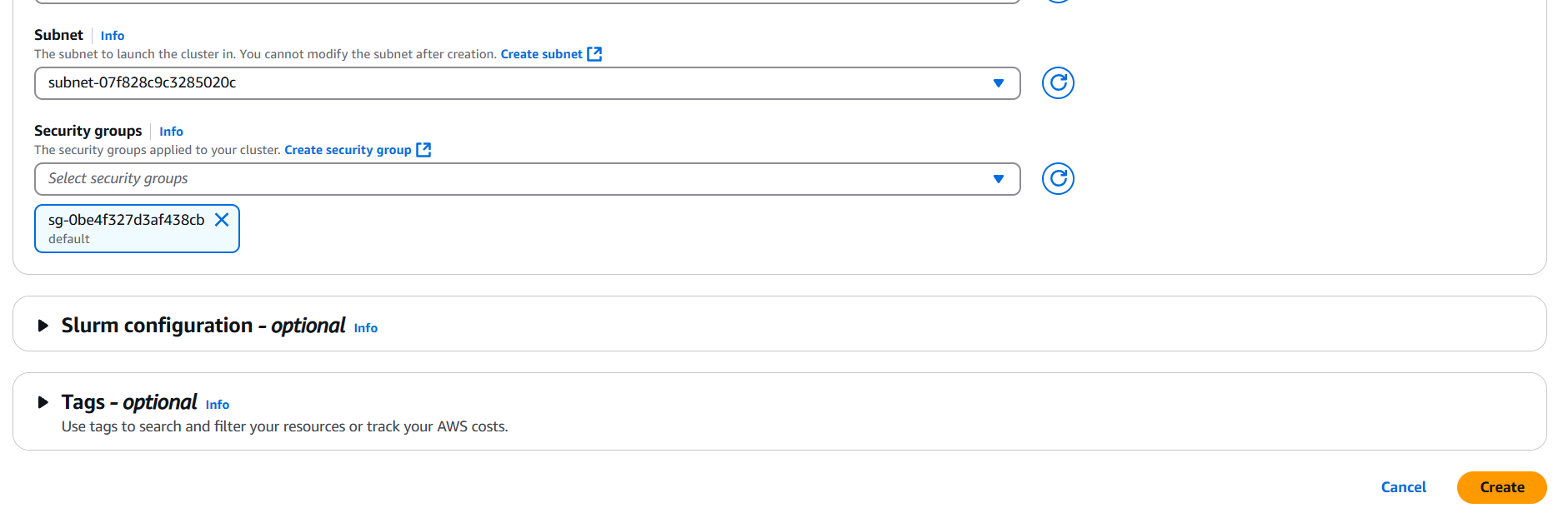

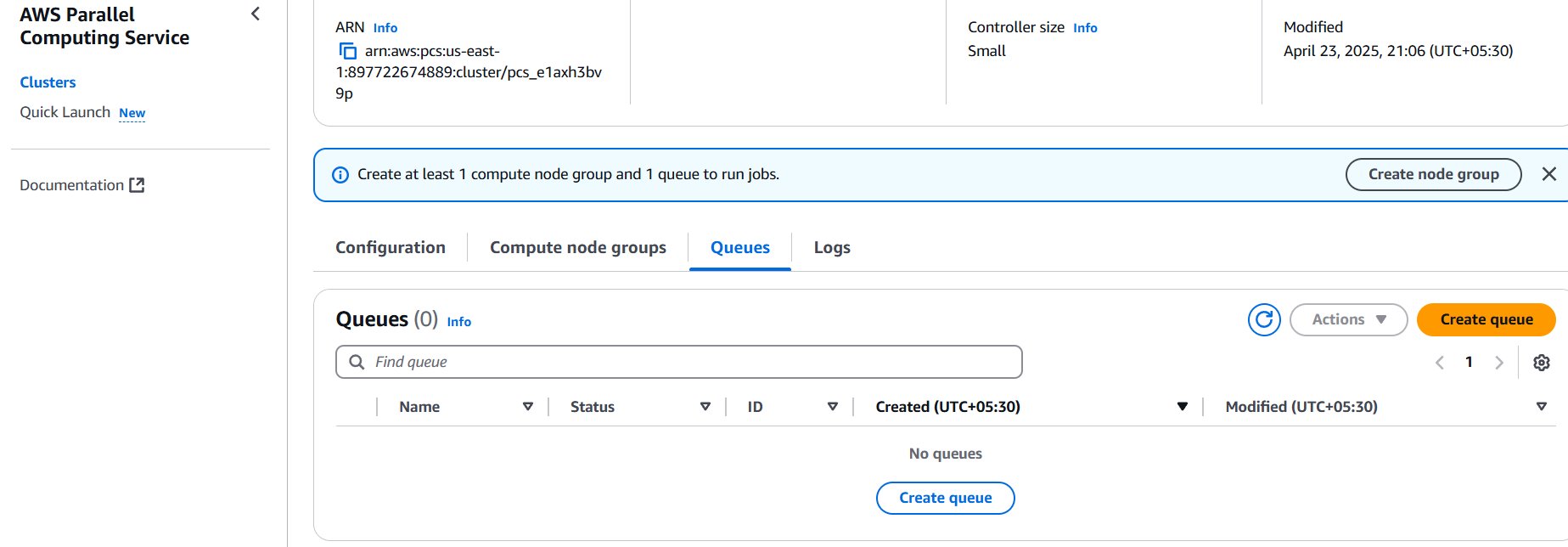

Option 1: AWS ParallelCluster (for HPC)

AWS ParallelCluster is best for HPC (High Performance Computing) applications where MPI (Message Passing Interface) or tightly coupled computing is required.

Steps:

- Install AWS ParallelCluster CLI:

pip install aws-parallelclusterConfigure AWS CLI:

aws configureCreate a configuration file (e.g., pcluster-config.yaml) with compute environment settings:

Region: us-west-2

Image:

Os: alinux2

HeadNode:

InstanceType: t3.medium

Networking:

SubnetId: subnet-xxxx

Scheduling:

Scheduler: slurm

SlurmQueues:

- Name: compute

ComputeResources:

- Name: hpc

InstanceType: c5n.18xlarge

MinCount: 0

MaxCount: 10

Networking:

SubnetIds:

- subnet-xxxx

Create the cluster:

pcluster create-cluster --cluster-name my-hpc-cluster --cluster-configuration pcluster-config.yamlSSH into the cluster and submit jobs using Slurm:

ssh ec2-user@<HeadNodeIP>

sbatch my_parallel_job.shOption 2: AWS Batch (for large-scale parallel jobs)

AWS Batch is ideal for embarrassingly parallel jobs (e.g., video rendering, genomics, machine learning inference, etc.).

Steps:

- Create a Compute Environment:

- Go to AWS Batch → Compute environments → Create.

- Choose EC2/Spot or Fargate.

- Set min/max vCPUs.

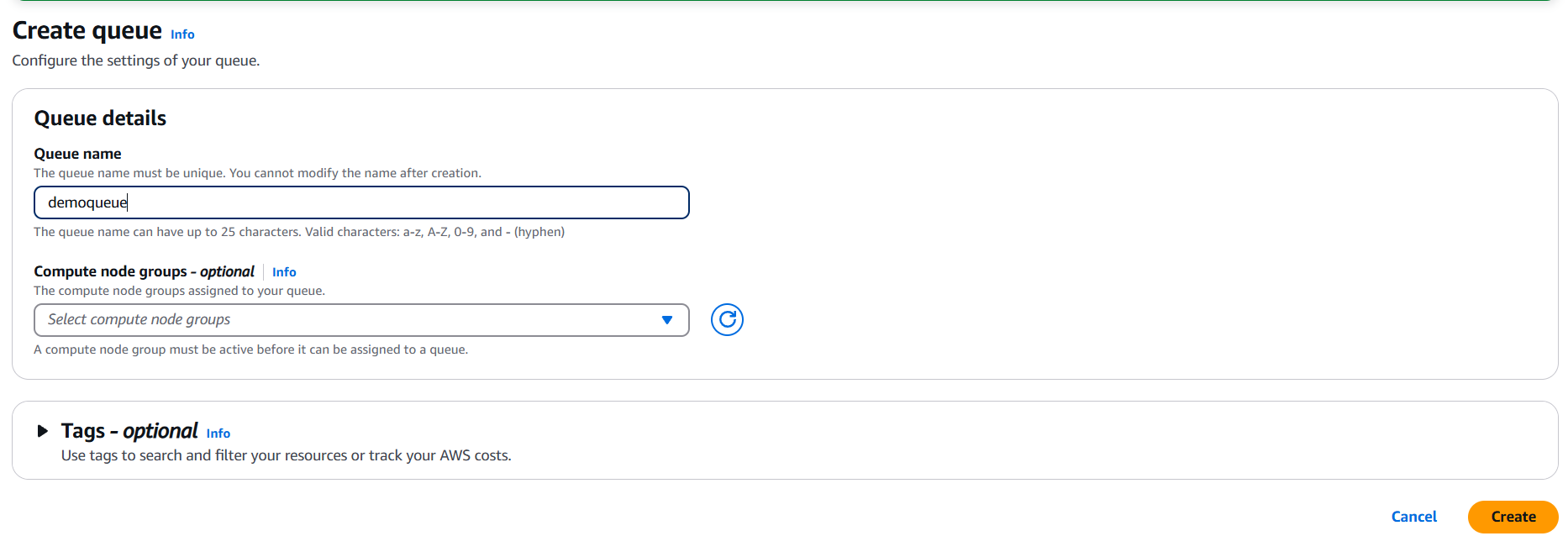

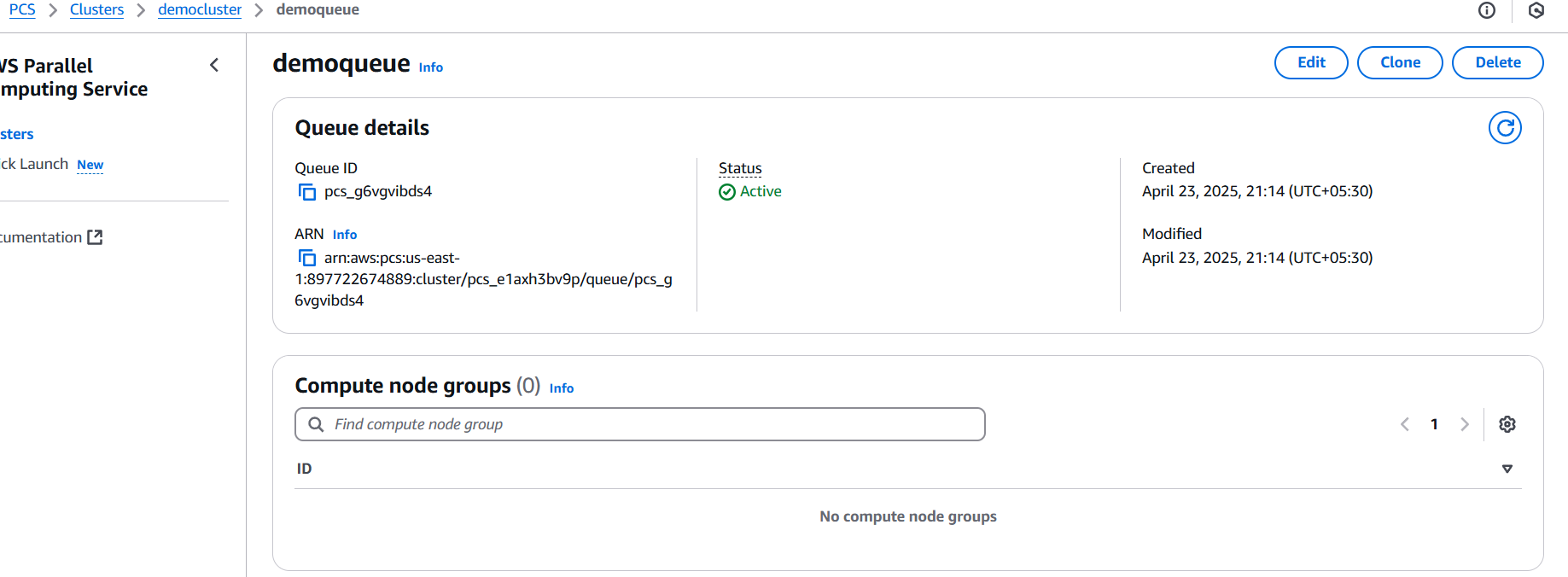

- Create a Job Queue:

- Attach the Compute Environment.

- Set priority and state.

- Register a Job Definition:

- Define Docker image (or script).

- Configure vCPUs, memory, environment variables, etc.

- Submit Jobs (in parallel): You can submit hundreds or thousands of jobs with varying input data.

aws batch submit-job \

--job-name my-job \

--job-queue my-queue \

--job-definition my-job-definition \

--container-overrides 'command=["python", "script.py", "input1"]'Repeat for different inputs using a script or AWS Step Functions.

Conclusion.

Parallel computing doesn’t have to be complicated, expensive, or limited to large research labs and enterprise environments. With AWS, developers and teams of all sizes can harness powerful, scalable infrastructure to run parallel workloads with ease. Whether you’re using AWS ParallelCluster for tightly coupled HPC tasks, AWS Batch for embarrassingly parallel jobs, or integrating Lambda and Step Functions for lightweight, event-driven processes, the flexibility is unmatched. By carefully choosing the right tools and designing your architecture around your specific workload requirements, you can unlock significant performance improvements, reduce runtime, and optimize costs. The cloud takes care of the heavy lifting—provisioning, scaling, and managing the underlying resources—so you can focus on what really matters: solving problems, innovating, and accelerating your project timelines. As your computing needs grow, AWS grows with you, offering a powerful foundation for both experimentation and production-grade systems. Now that you’ve got a roadmap in hand, you’re well on your way to building your own parallel computing service in the cloud.

Add a Comment