Introduction.

In today’s data-driven world, businesses generate vast amounts of raw data every second—from web clicks and app usage to IoT sensors and customer transactions. But raw data alone holds limited value. To turn it into meaningful insights, it must be collected, processed, transformed, and moved across different systems in a reliable and scalable way. This is where data pipelines come in. A data pipeline is a series of steps that automate the flow of data from source to destination, often including stages like ingestion, transformation, validation, storage, and visualization.

Building a data pipeline manually from scratch can be complex, especially when dealing with large volumes of data or multiple integration points. That’s why cloud providers like Amazon Web Services (AWS) offer a suite of tools designed to simplify and scale every part of the data pipeline process. With AWS, you can create robust, automated pipelines that ingest data from various sources (like databases, APIs, or streaming services), process it in real-time or batches, and deliver it to destinations such as Amazon Redshift, S3, or data lakes—all while ensuring security, monitoring, and cost-efficiency.

In this blog post, we’ll walk you through the end-to-end process of building a data pipeline on AWS, using a combination of powerful services like AWS Glue, Amazon S3, AWS Lambda, Amazon Kinesis, and Amazon Redshift. Whether your use case is real-time analytics, periodic ETL processing, or data lake population, AWS provides the flexibility to meet your needs.

You’ll learn how to design a pipeline architecture that meets your data requirements, automate tasks like data extraction and transformation, and monitor the entire workflow for reliability and performance. We’ll also highlight best practices for optimizing performance and reducing costs—so you don’t just build a pipeline, but build a smart one.

Whether you’re a data engineer, developer, analyst, or cloud architect, this guide will give you a hands-on look at how to implement modern data workflows using AWS-native tools. You don’t need to be an expert in every AWS service—we’ll keep things beginner-friendly and practical, with step-by-step instructions and code snippets where needed.

By the end of this post, you’ll not only understand how AWS data pipelines work, but also have a working prototype that you can adapt and expand for your real-world data projects. So if you’re ready to move beyond messy spreadsheets and manual scripts, and start building efficient, scalable data pipelines in the cloud—let’s dive in and make your data work for you.

Prerequisites:

- A source code repository (e.g., GitHub, CodeCommit, or Bitbucket)

- A build specification file (

buildspec.yml) if using CodeBuild - An S3 bucket or a deployment service (like ECS, Lambda, or Elastic Beanstalk)

- IAM permissions to create CodePipeline, CodeBuild, and related services

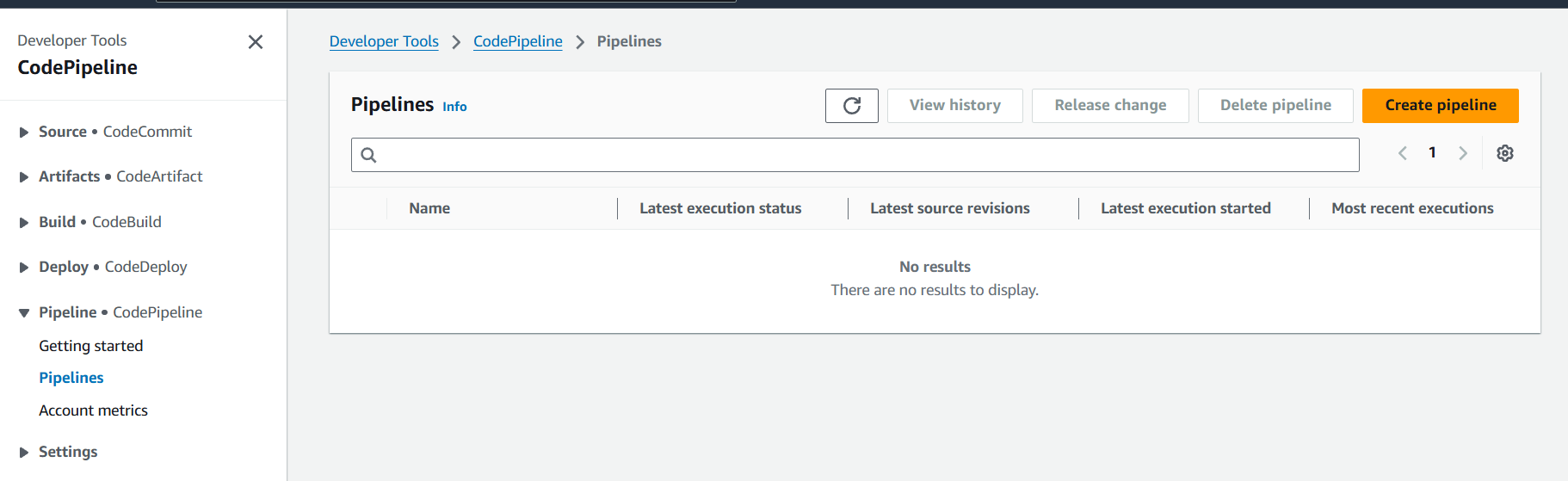

Step 1: Open the AWS CodePipeline Console

- Go to the AWS Console

- Navigate to CodePipeline

- Click Create pipeline

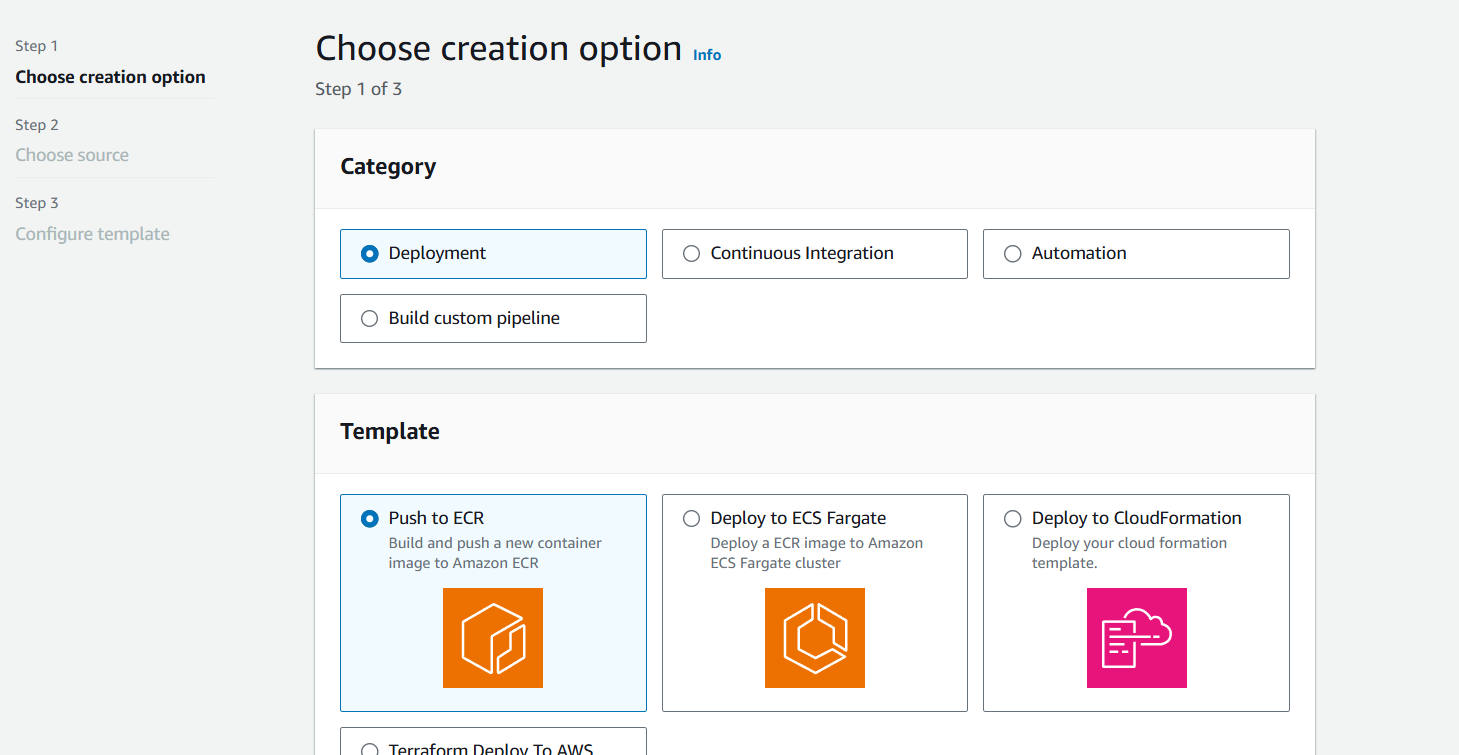

Step 2: Configure Your Pipeline

- Pipeline name: Name your pipeline (e.g.,

MyAppPipeline) - Service role: Choose an existing role or let AWS create one for you (recommended)

- Artifact store: AWS will use an S3 bucket to store artifacts (you can specify one)

Click Next.

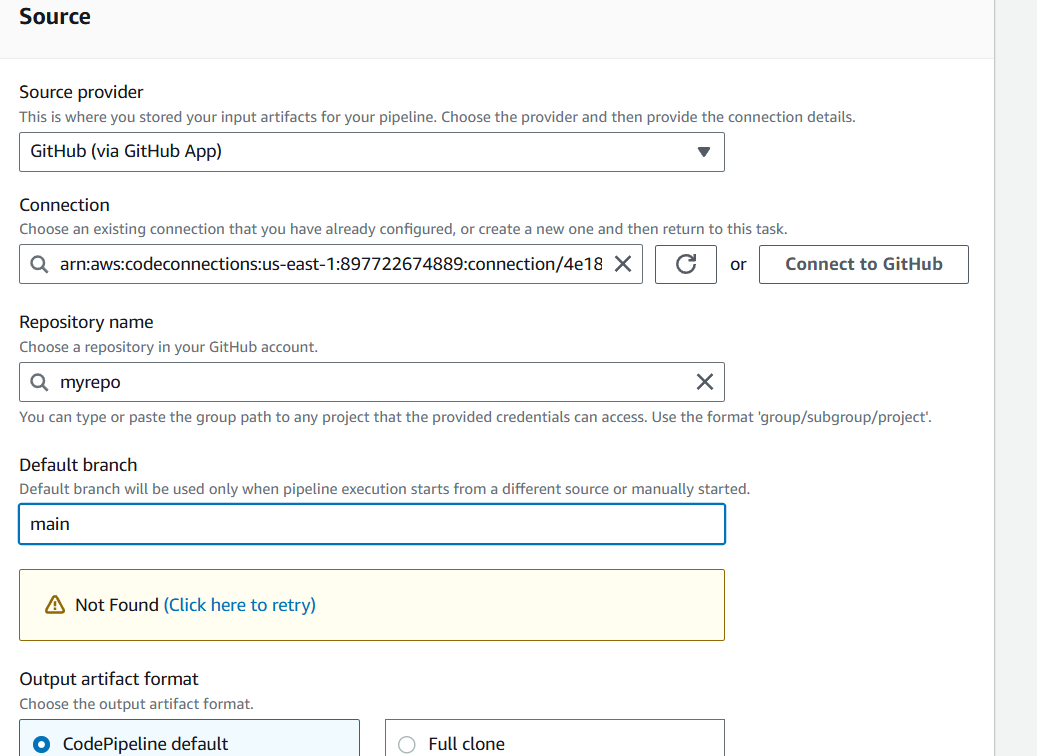

Step 3: Add a Source Stage

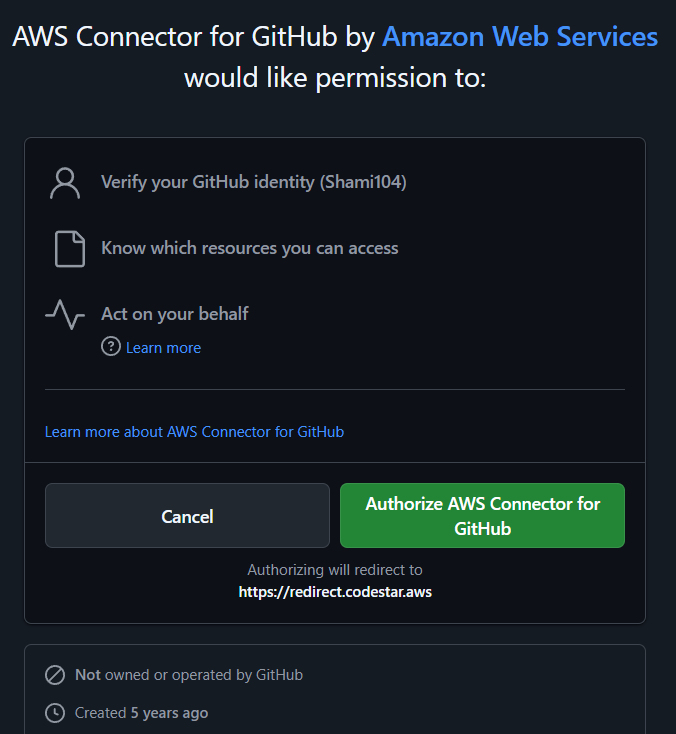

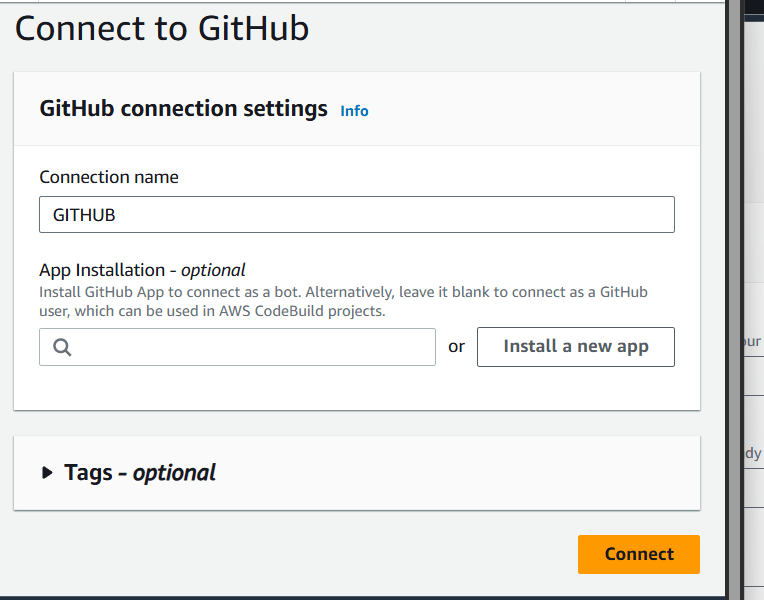

- Source provider: Choose your source (e.g., GitHub, CodeCommit, Bitbucket)

- Connect to your repository (OAuth or via token)

- Select the repo and branch to watch for changes

- Click Next

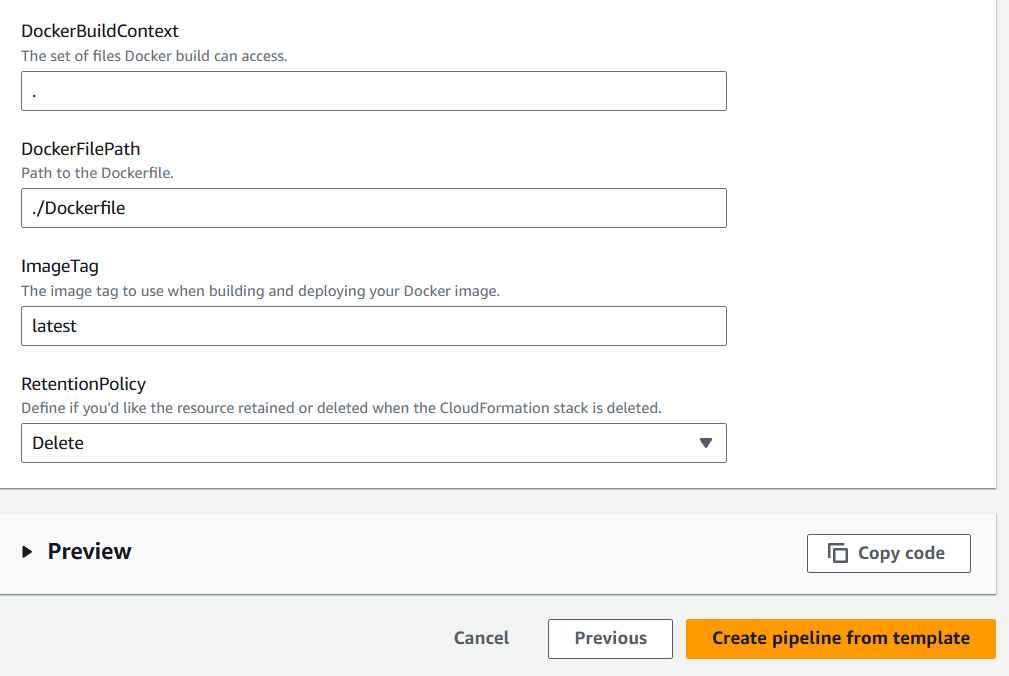

Step 4: Add a Build Stage (Optional but common)

- Build provider: Choose AWS CodeBuild

- Project name: Select an existing build project or create a new one

- If creating a new one, set the environment, runtime, and permissions

- Make sure you have a

buildspec.ymlin the root of your repo

Click Next

Step 5: Add a Deploy Stage

Choose your deployment target:

- Amazon ECS (for container-based apps)

- AWS Lambda

- Elastic Beanstalk

- EC2 / S3

- You can also skip this step to deploy manually later

Configure the deployment settings based on the service you chose.

Click Next

Step 6: Review and Create

- Review all stages

- Click Create pipeline

- The pipeline will start running immediately and show you the progress of each stage

Test Your Pipeline

- Push code to your source repo (e.g., GitHub)

- Watch as CodePipeline automatically pulls the changes, builds them, and deploys to your environment

Conclusion.

Building a data pipeline on AWS might seem overwhelming at first, but with the right approach and the powerful tools AWS offers, it becomes not just manageable—but scalable, secure, and efficient. Whether you’re moving data from transactional systems into a data lake, cleaning and transforming it for analytics, or processing real-time streams for immediate insights, AWS provides a full ecosystem to support every step of the journey.

In this guide, you’ve seen how services like Amazon S3, AWS Glue, Lambda, Kinesis, and Redshift can work together to build robust pipelines that automate your data workflows end-to-end. You’ve learned about ingestion, transformation, and storage, as well as best practices for cost optimization, monitoring, and fault tolerance.

More importantly, you now have a foundation to build on. Whether you’re handling small batch jobs or designing a multi-layered enterprise data architecture, the principles and services discussed here will scale with your needs. As your data grows, AWS makes it easy to adapt and extend your pipeline with features like workflow orchestration (using Step Functions), real-time processing (using Kinesis Data Analytics), and even machine learning integration (with SageMaker).

So what’s next? Experiment, iterate, and refine. Start with small, meaningful pipelines and scale them as your use cases evolve. And if you’re ready to go deeper, explore tools like Amazon Data Pipeline, AWS Step Functions, or Apache Airflow on Amazon MWAA for even more control over your data flow.

Thanks for reading—now go build something data-driven, scalable, and awesome!

Add a Comment