Introduction.

Building scalable real-time data pipelines is crucial for businesses that need to process and analyze large volumes of data as it streams in. Docker, a platform that allows developers to package applications and their dependencies into containers, has become an essential tool for managing complex systems. By utilizing Docker, you can create isolated, consistent environments for each component of a real-time data pipeline, enabling scalability and flexibility.

In this blog, we will explore how Docker can streamline the development and deployment of real-time data pipelines. We’ll cover the key steps for setting up a scalable pipeline, from containerizing data processing tools to integrating them into a microservices architecture. By the end, you’ll have a clearer understanding of how Docker enables faster, more efficient data processing, without compromising on performance or reliability.

Whether you are working with real-time analytics, streaming data, or complex event processing, Docker can help you manage workloads more efficiently. This article will also discuss best practices, common pitfalls, and how to optimize Docker containers for real-time data workflows. Let’s dive into the world of scalable real-time data pipelines with Docker!

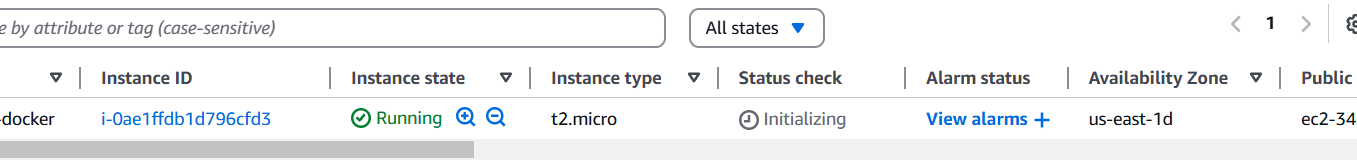

STEP 1: Create a instance.

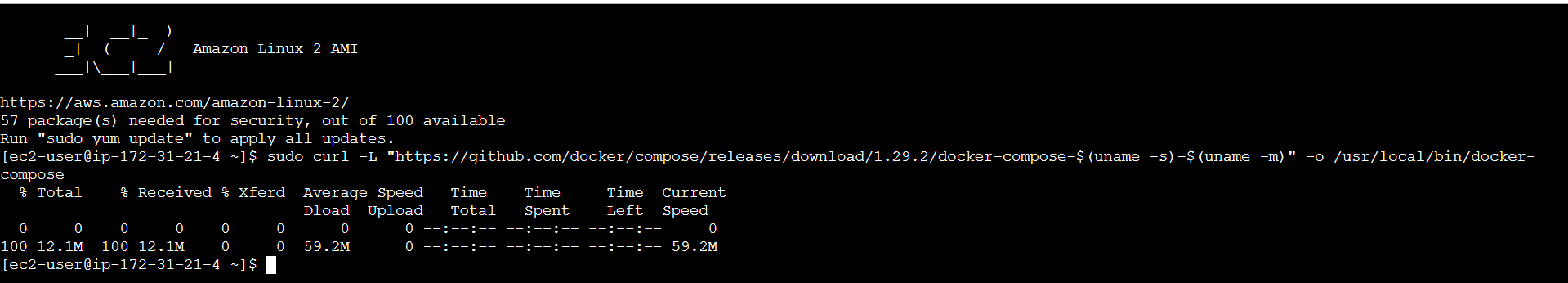

STEP 2: Accessing SSH Connection.

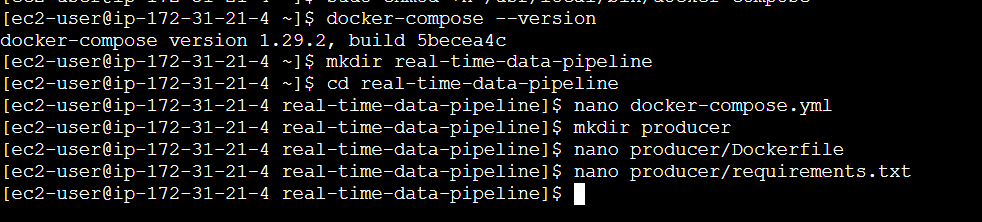

- Install Docker Compose.

sudo curl -L "https://github.com/docker/compose/releases/download/1.29.2/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose

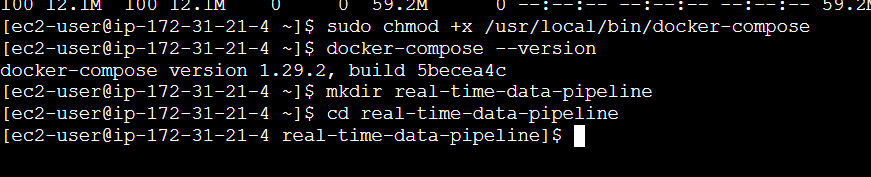

sudo chmod +x /usr/local/bin/docker-compose

docker-compose --version

STEP 3: Complete Docker Compose Setup.

mkdir real-time-data-pipeline

cd real-time-data-pipeline

STEP 4: Create a file.

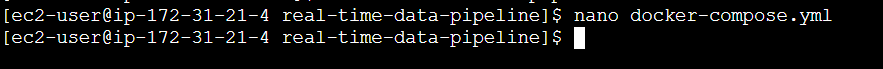

nano docker-compose.ymlversion: '3.8'

services:

zookeeper:

image: wurstmeister/zookeeper:3.4.6

ports:

- "2181:2181"

kafka:

image: wurstmeister/kafka:2.13-2.7.0

ports:

- "9092:9092"

environment:

KAFKA_ADVERTISED_LISTENERS: INSIDE://kafka:9092,OUTSIDE://localhost:9092

KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: INSIDE:PLAINTEXT,OUTSIDE:PLAINTEXT

KAFKA_LISTENERS: INSIDE://0.0.0.0:9092,OUTSIDE://0.0.0.0:9092

KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181

depends_on:

- zookeeper

producer:

build: ./producer

depends_on:

- kafka

consumer:

build: ./consumer

depends_on:

- kafka

db:

image: postgres:13

environment:

POSTGRES_USER: user

POSTGRES_PASSWORD: password

POSTGRES_DB: my_database # This creates the database

ports:

- "5432:5432"

grafana:

image: grafana/grafana:latest

ports:

- "3000:3000"

volumes:

- grafana-storage:/var/lib/grafana

depends_on:

- db

volumes:

grafana-storage:

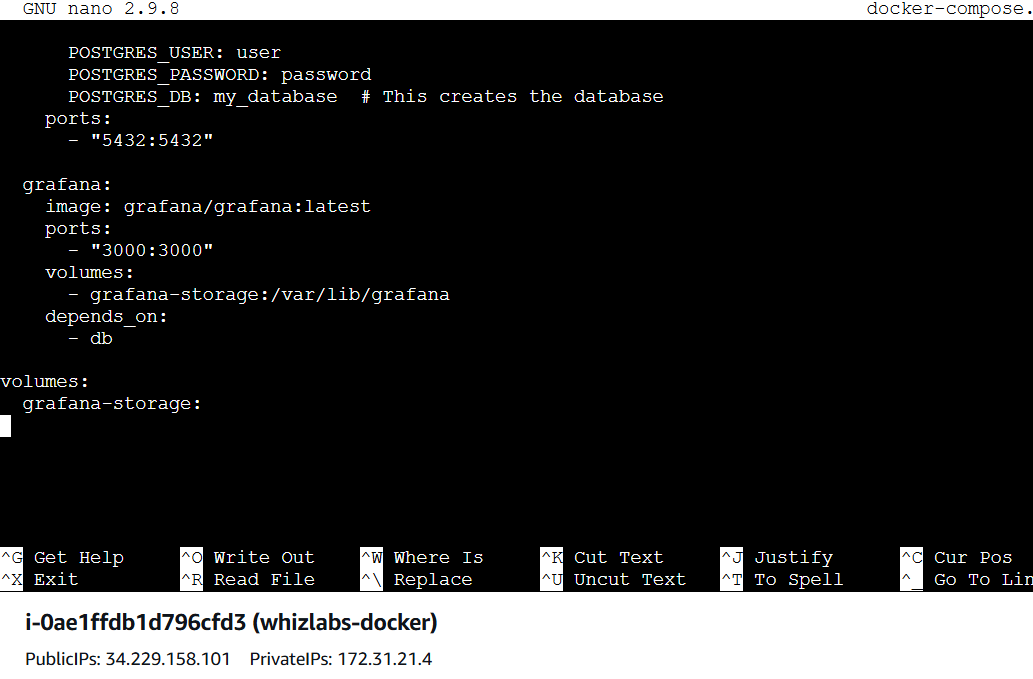

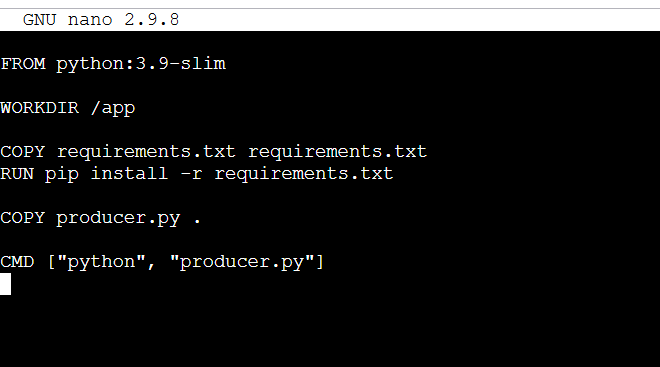

STEP 5: Create Producer and Consumer Services,

mkdir producer

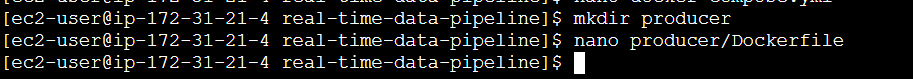

nano producer/DockerfileFROM python:3.9-slim

WORKDIR /app

COPY requirements.txt requirements.txt

RUN pip install -r requirements.txt

COPY producer.py .

CMD ["python", "producer.py"]

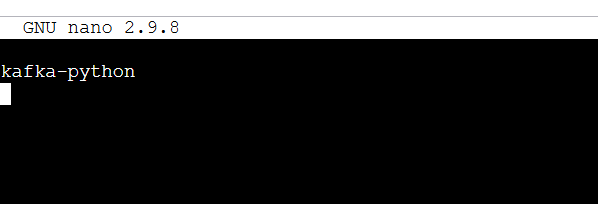

STEP 6: create producer requirements.txt file.

nano producer/requirements.txtkafka-python

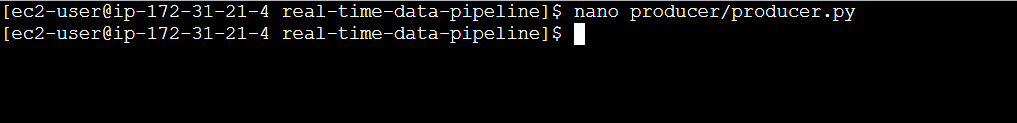

STEP 7: Create the producer.py file.

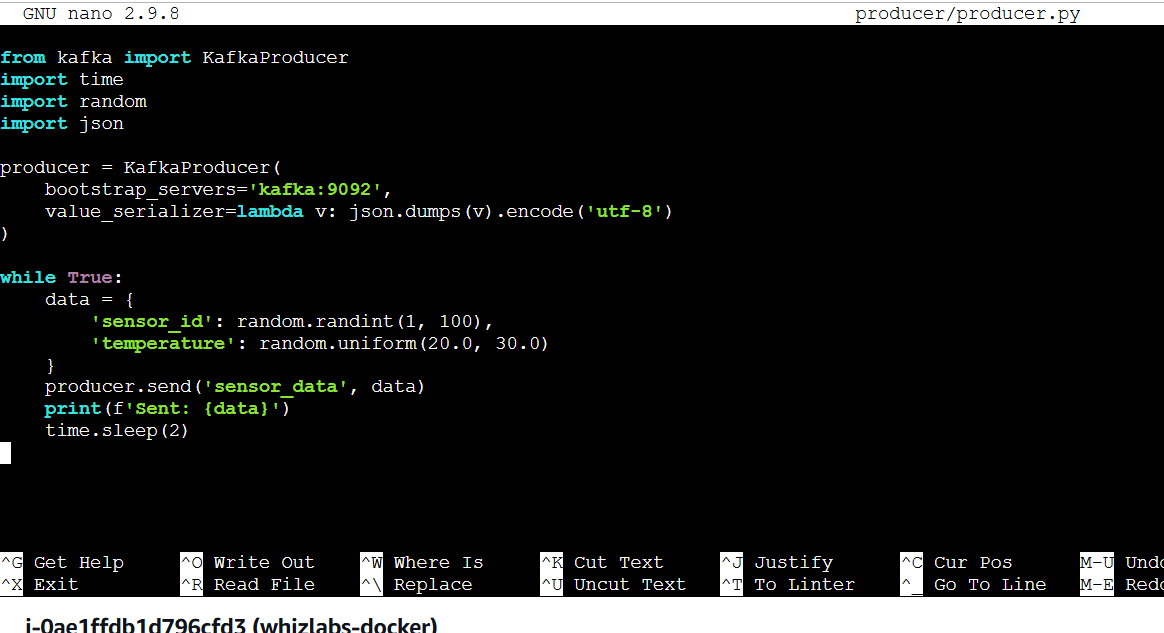

nano producer/producer.pyfrom kafka import KafkaProducer

import time

import random

import json

producer = KafkaProducer(

bootstrap_servers='kafka:9092',

value_serializer=lambda v: json.dumps(v).encode('utf-8')

)

while True:

data = {

'sensor_id': random.randint(1, 100),

'temperature': random.uniform(20.0, 30.0)

}

producer.send('sensor_data', data)

print(f'Sent: {data}')

time.sleep(2)

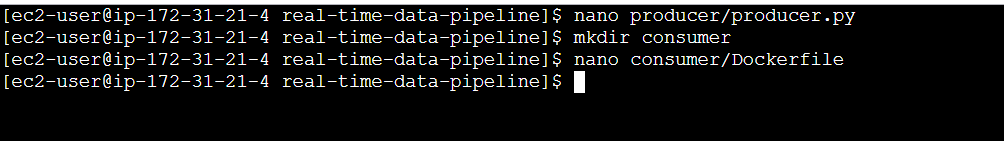

STEP 8: Create a file enter the following command and save the file.

mkdir consumer

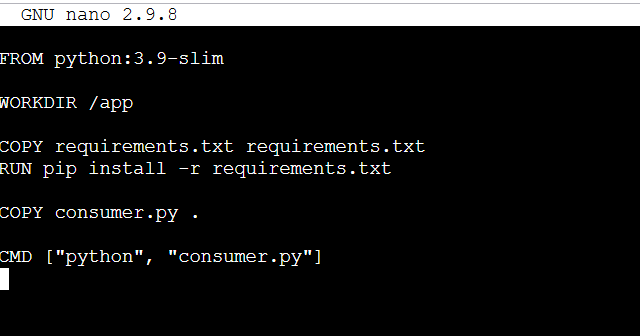

nano consumer/DockerfileFROM python:3.9-slim

WORKDIR /app

COPY requirements.txt requirements.txt

RUN pip install -r requirements.txt

COPY consumer.py .

CMD ["python", "consumer.py"]

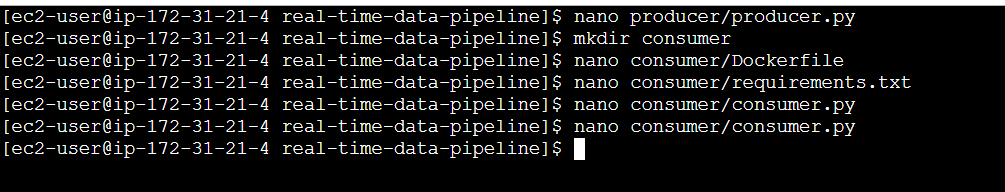

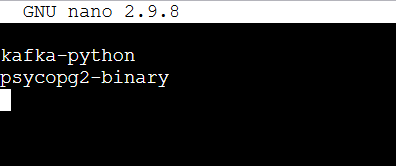

STEP 9: Create consumer requirements.txt file.

nano consumer/requirements.txtkafka-python

psycopg2-binarynano consumer/consumer.pyfrom kafka import KafkaConsumer

import psycopg2

import json

# Set up the Kafka consumer to listen to the 'sensor_data' topic

consumer = KafkaConsumer(

'sensor_data',

bootstrap_servers='kafka:9092',

value_deserializer=lambda v: json.loads(v)

)

# Establish a connection to the PostgreSQL database

conn = psycopg2.connect(

dbname="postgres",

user="user",

password="password",

host="db"

)

cur = conn.cursor()

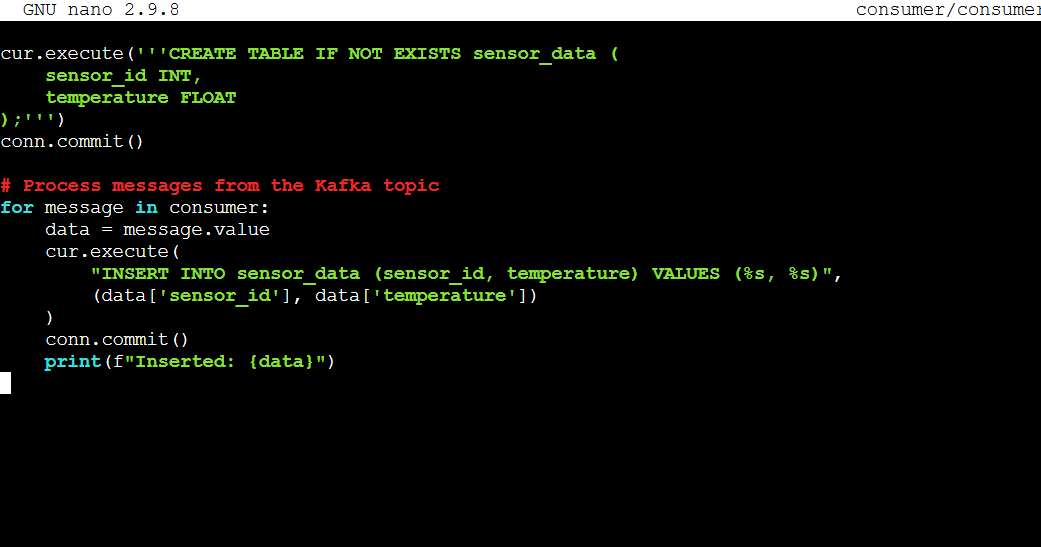

# Create the table for storing sensor data if it doesn't exist

cur.execute('''CREATE TABLE IF NOT EXISTS sensor_data (

sensor_id INT,

temperature FLOAT

);''')

conn.commit()

# Process messages from the Kafka topic

for message in consumer:

data = message.value

cur.execute(

"INSERT INTO sensor_data (sensor_id, temperature) VALUES (%s, %s)",

(data['sensor_id'], data['temperature'])

)

conn.commit()

print(f"Inserted: {data}")

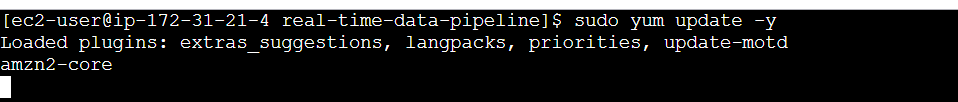

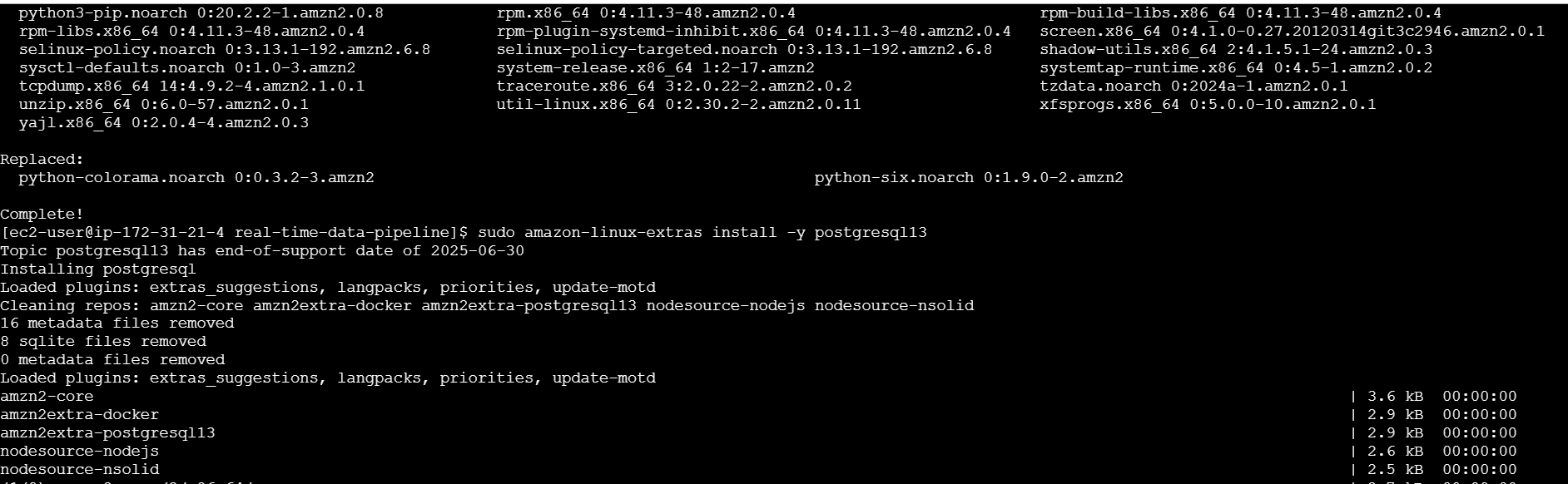

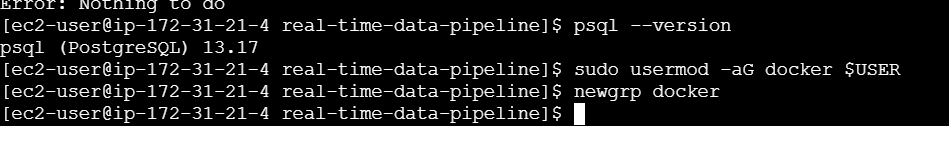

STEP 12: Install PostgreSQL 13 on Amazon Linux 2.

sudo yum update -y

sudo amazon-linux-extras install -y postgresql13

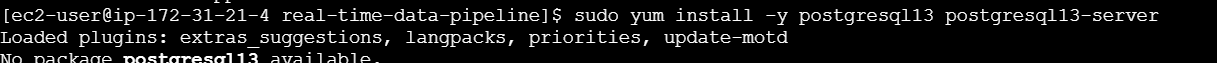

sudo yum install -y postgresql13 postgresql13-server

psql --version

sudo usermod -aG docker $USER

newgrp docker

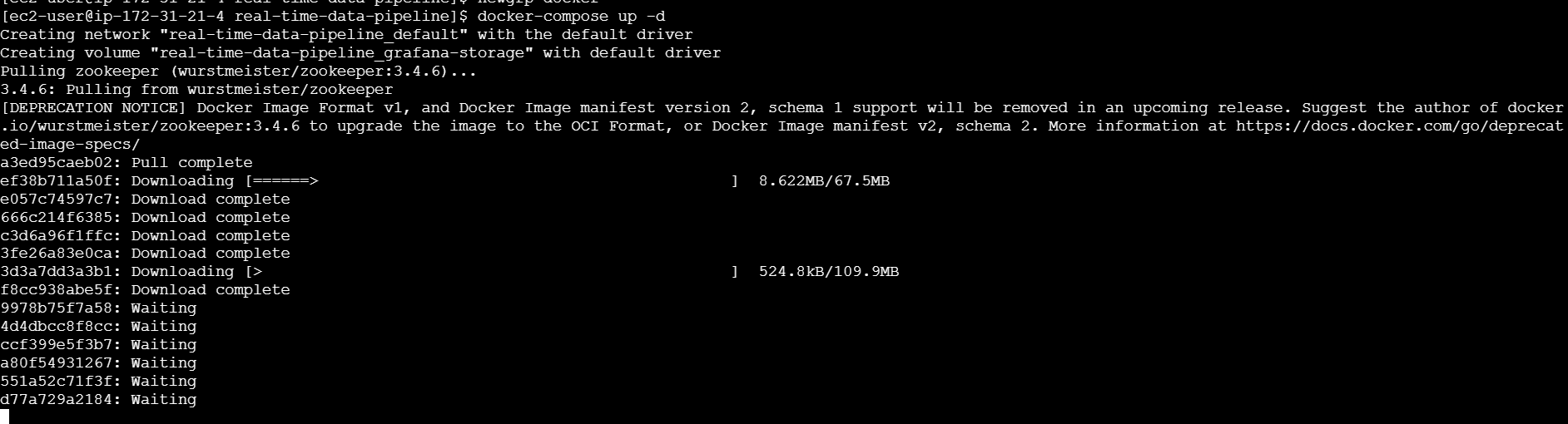

docker-compose up -d

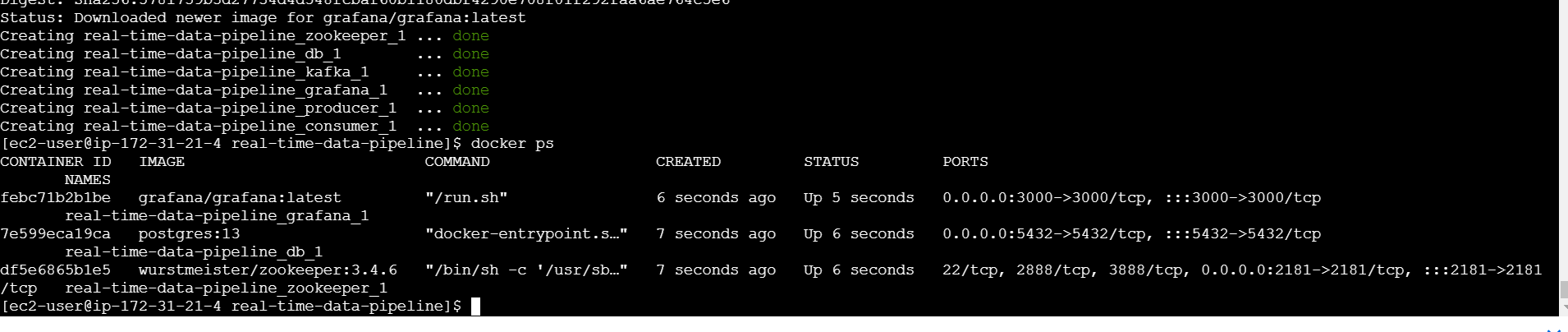

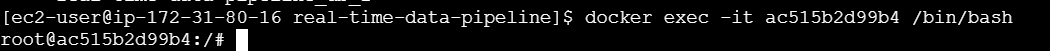

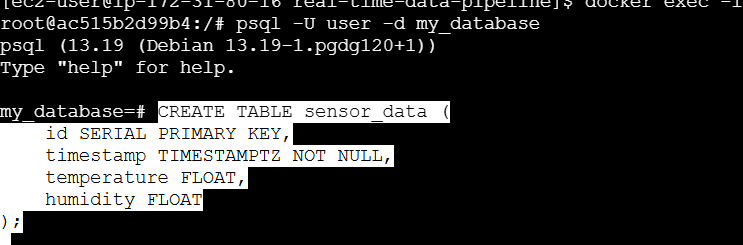

STEP 13: Access PostgreSQL Container.

docker ps

docker exec -it <container_id> /bin/bash

psql -U user -d my_database

Create a Table inside the Database:

CREATE TABLE sensor_data (

id SERIAL PRIMARY KEY,

timestamp TIMESTAMPTZ NOT NULL,

temperature FLOAT,

humidity FLOAT

);

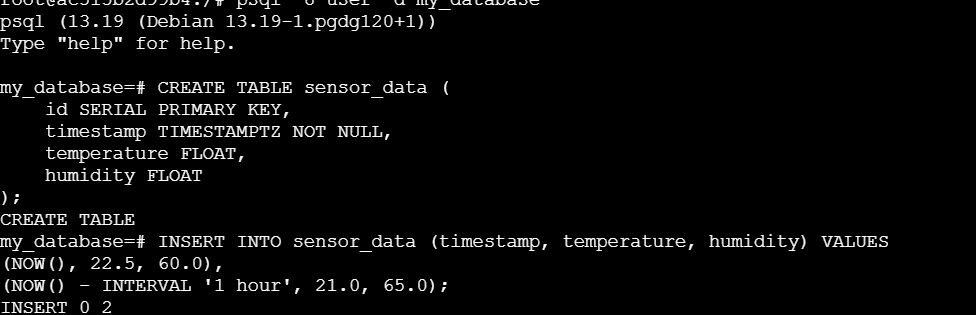

Insert Sample Data to the table:

INSERT INTO sensor_data (timestamp, temperature, humidity) VALUES

(NOW(), 22.5, 60.0),

(NOW() - INTERVAL '1 hour', 21.0, 65.0);

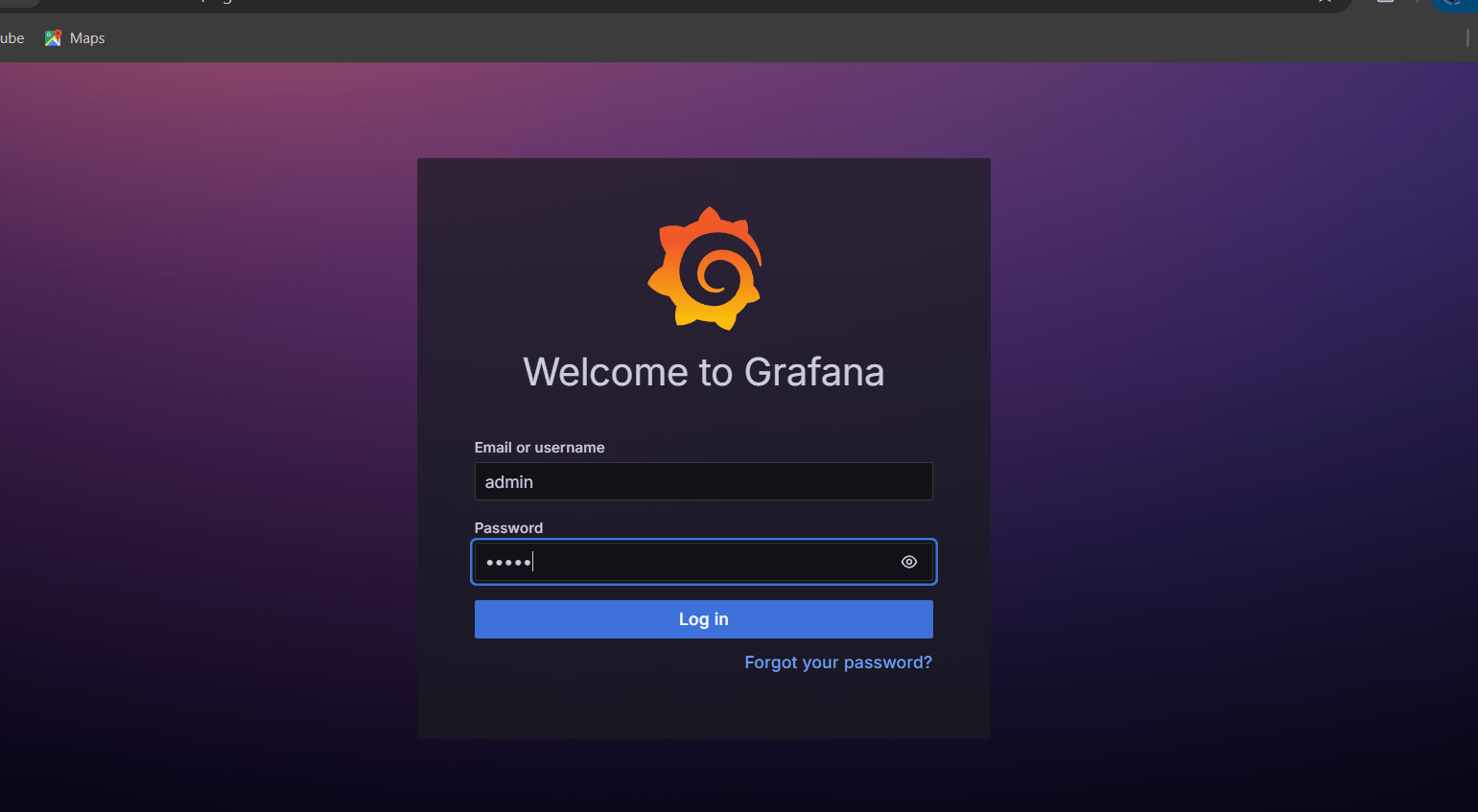

STEP 14: Configure Grafana to connect to this database.

- Enter your browser http://<EC2_PUBLIC_IP>:3000

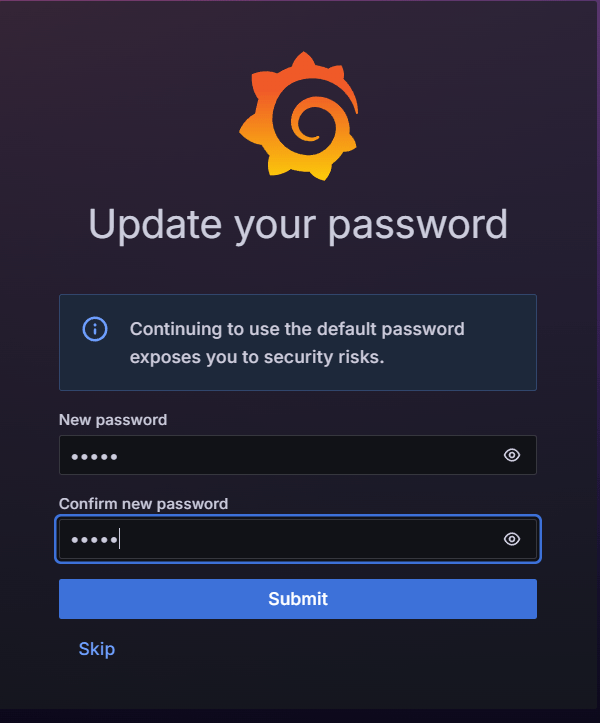

STEP 15: Set a username & password.

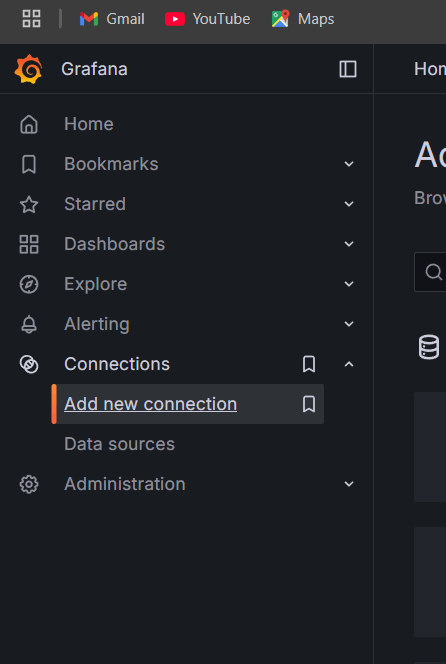

STEP 16: Select connection and click on new connection.

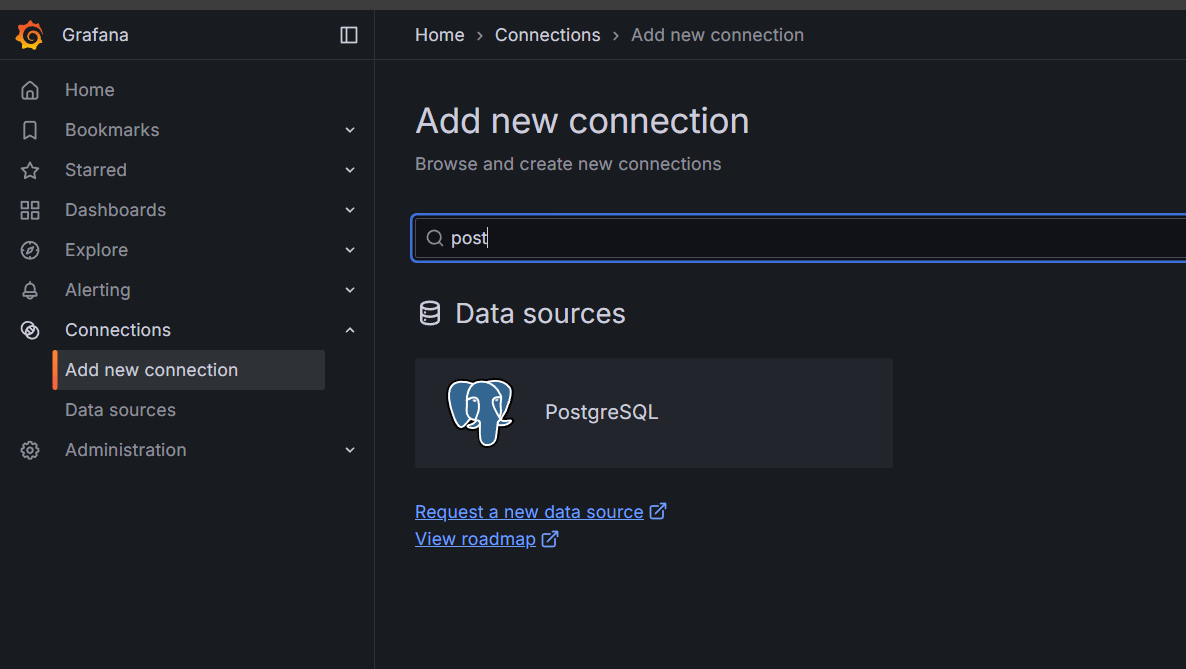

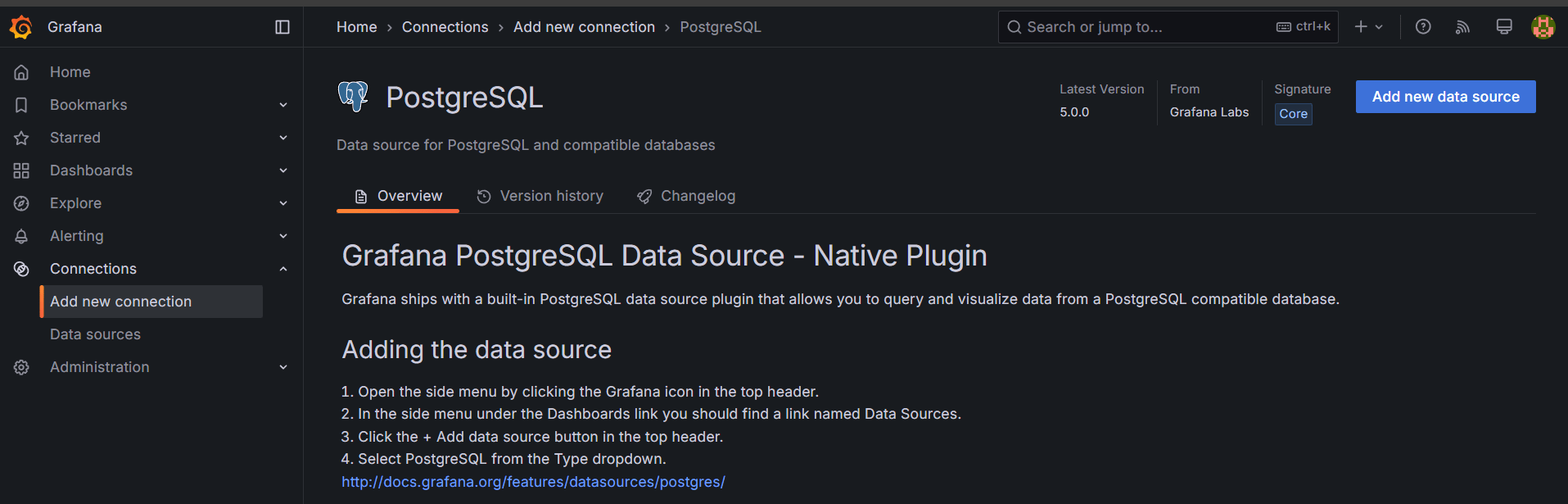

STEP 17: Search for postgresql and select postgreSQL.

STEP 18: Click on add new data source.

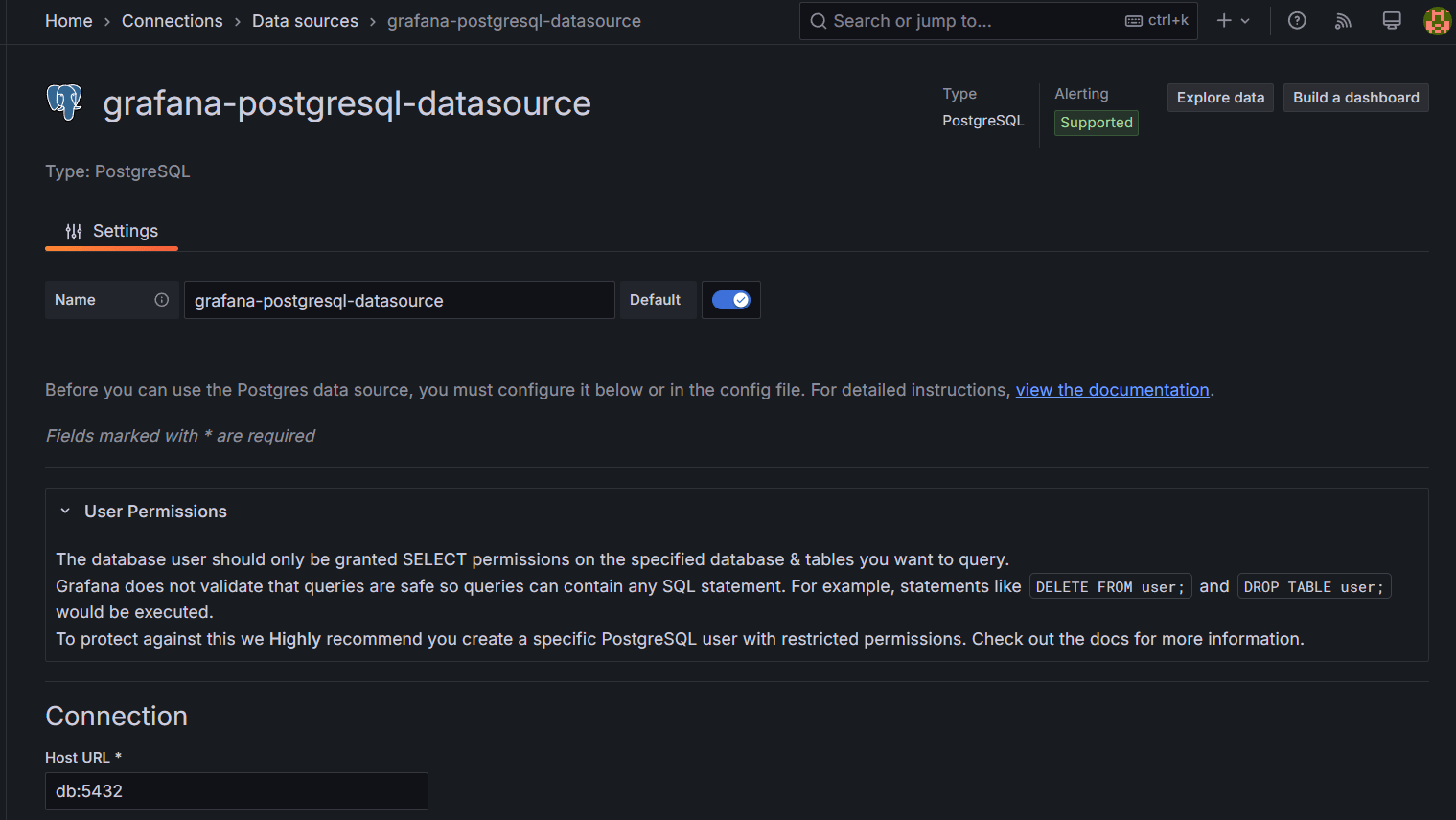

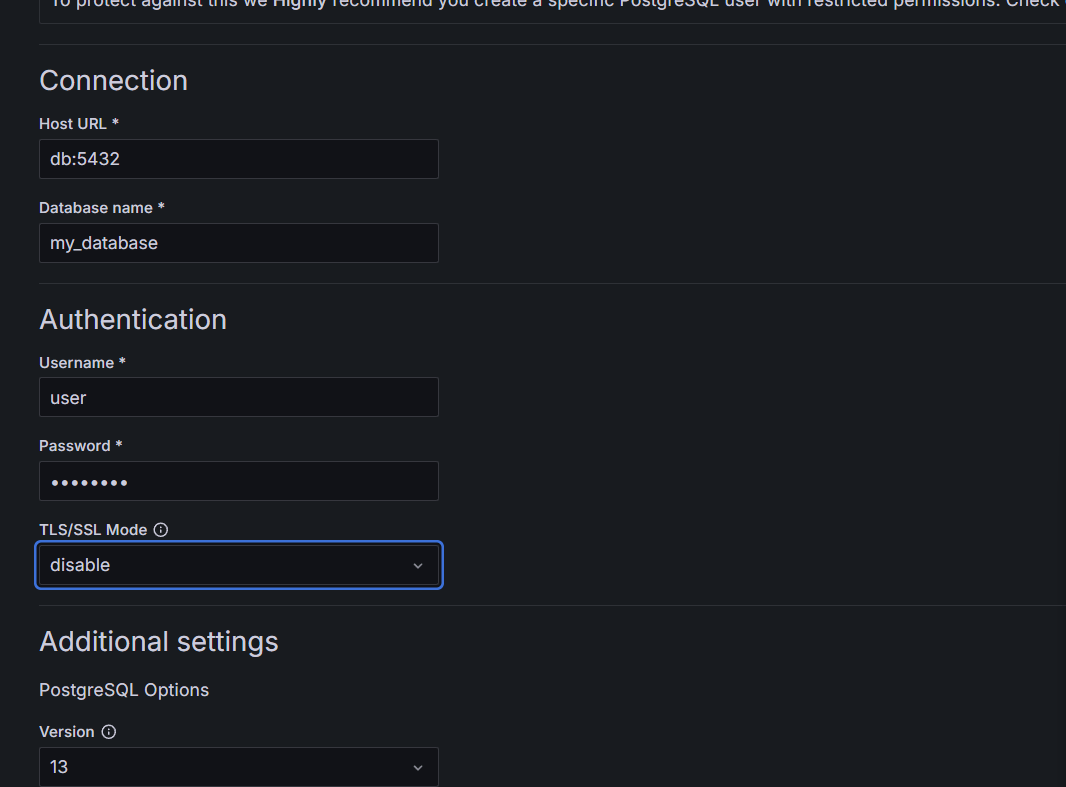

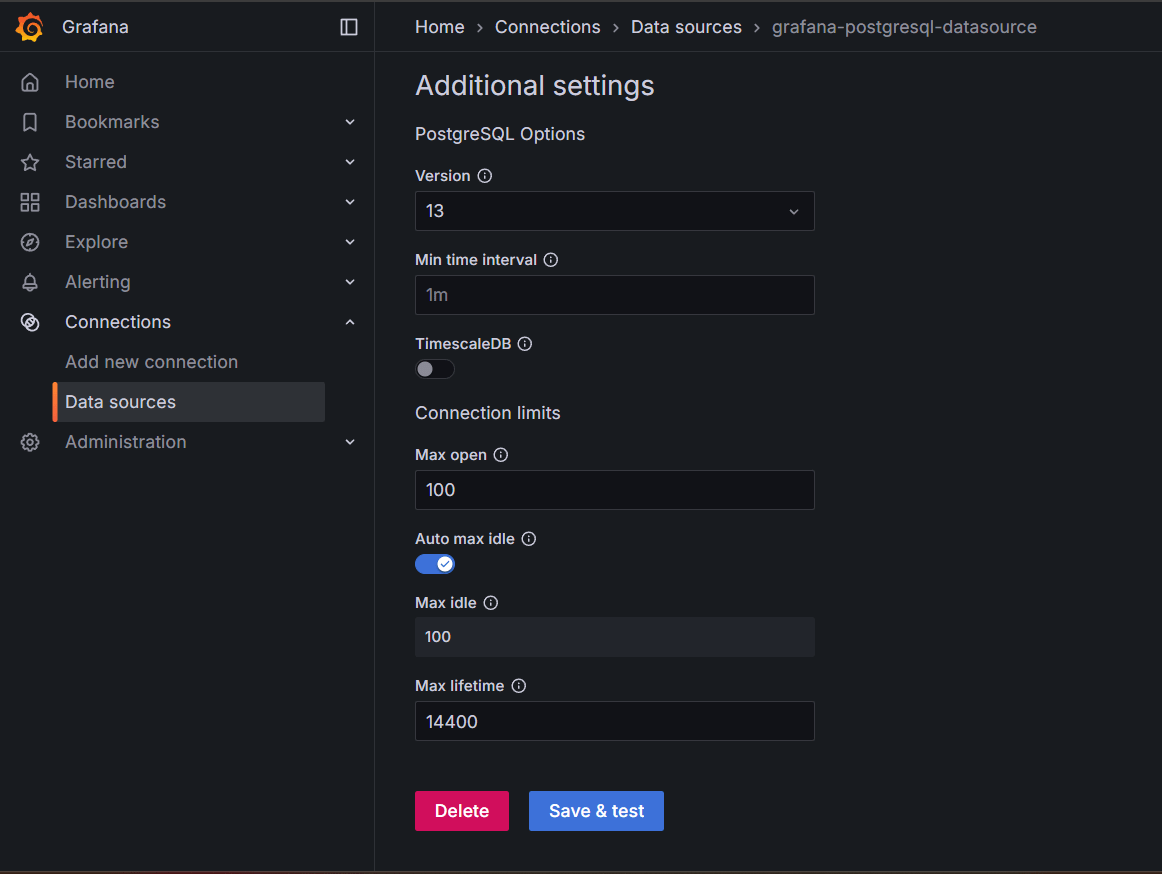

STEP 19: Enter Host URL and Database name Enter Username as “user” and Password as “password”, select TLS/SSL Mode as “Disable”.

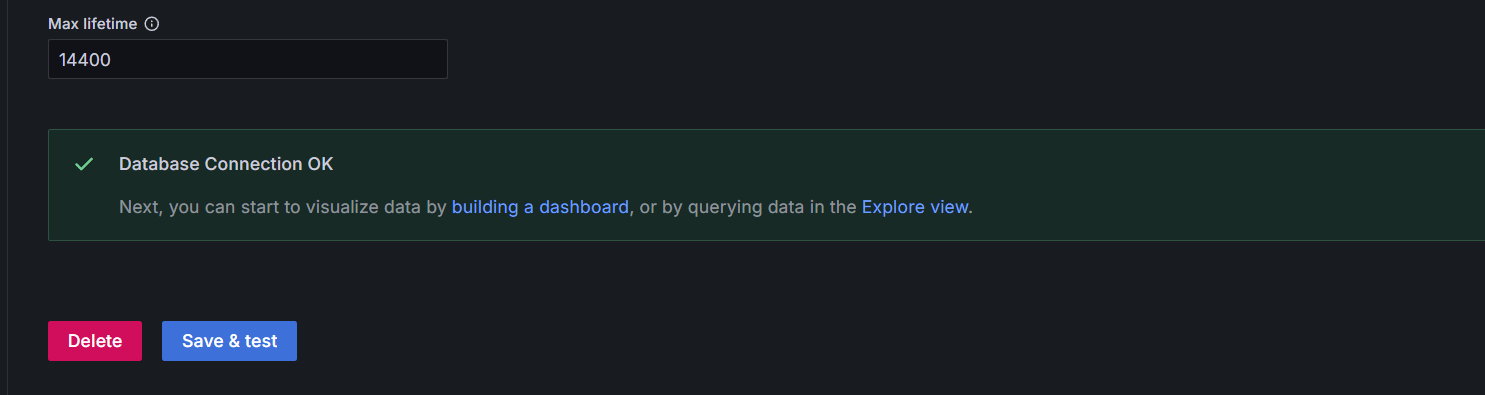

- Save & test.

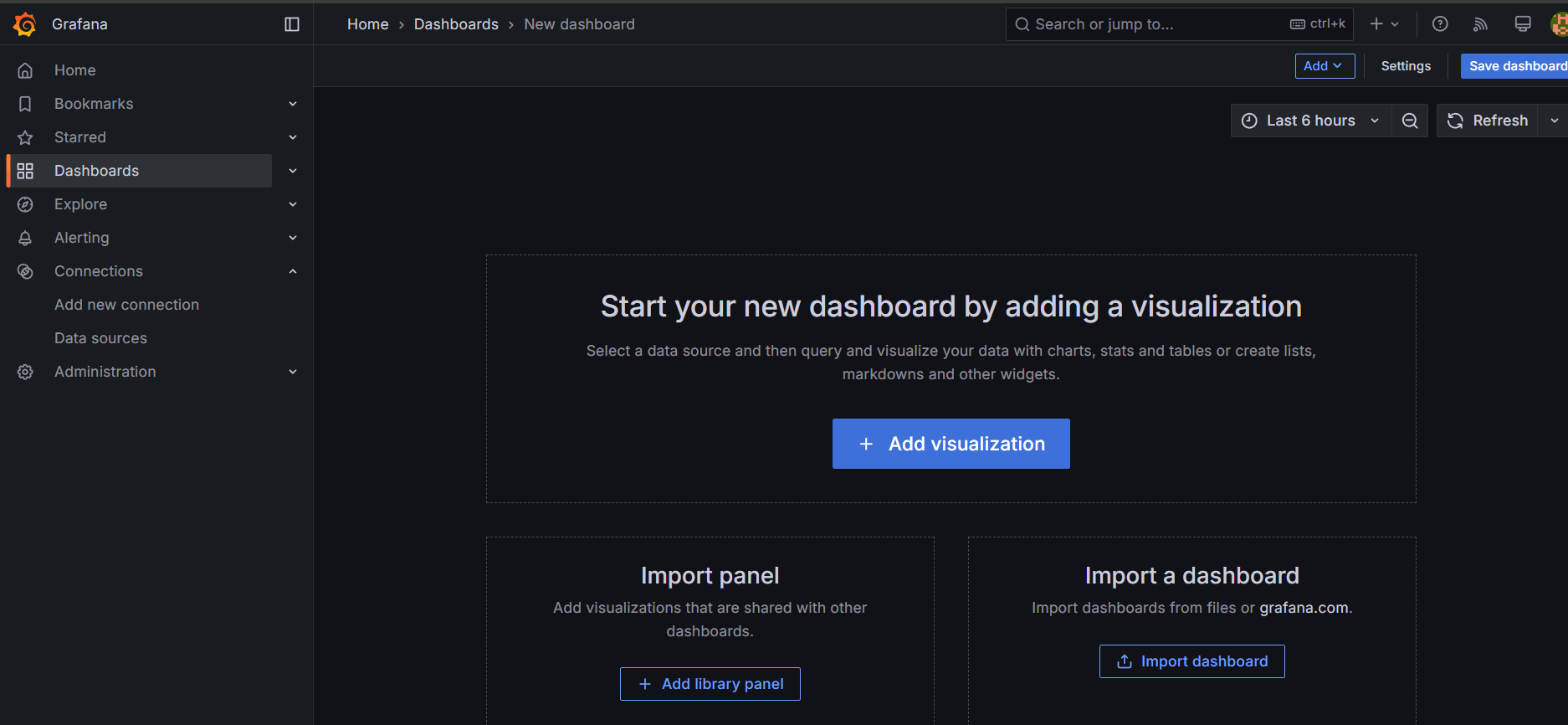

STEP 20: Create Grafana Dashboards.

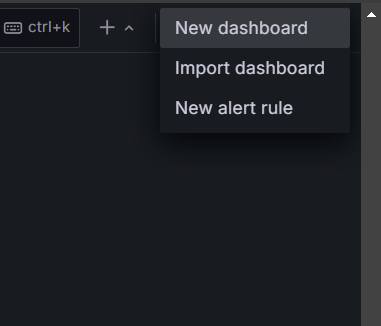

- 1. Click on + icon on the top left corner and then select New dashboard.

- Select Add vizualization.

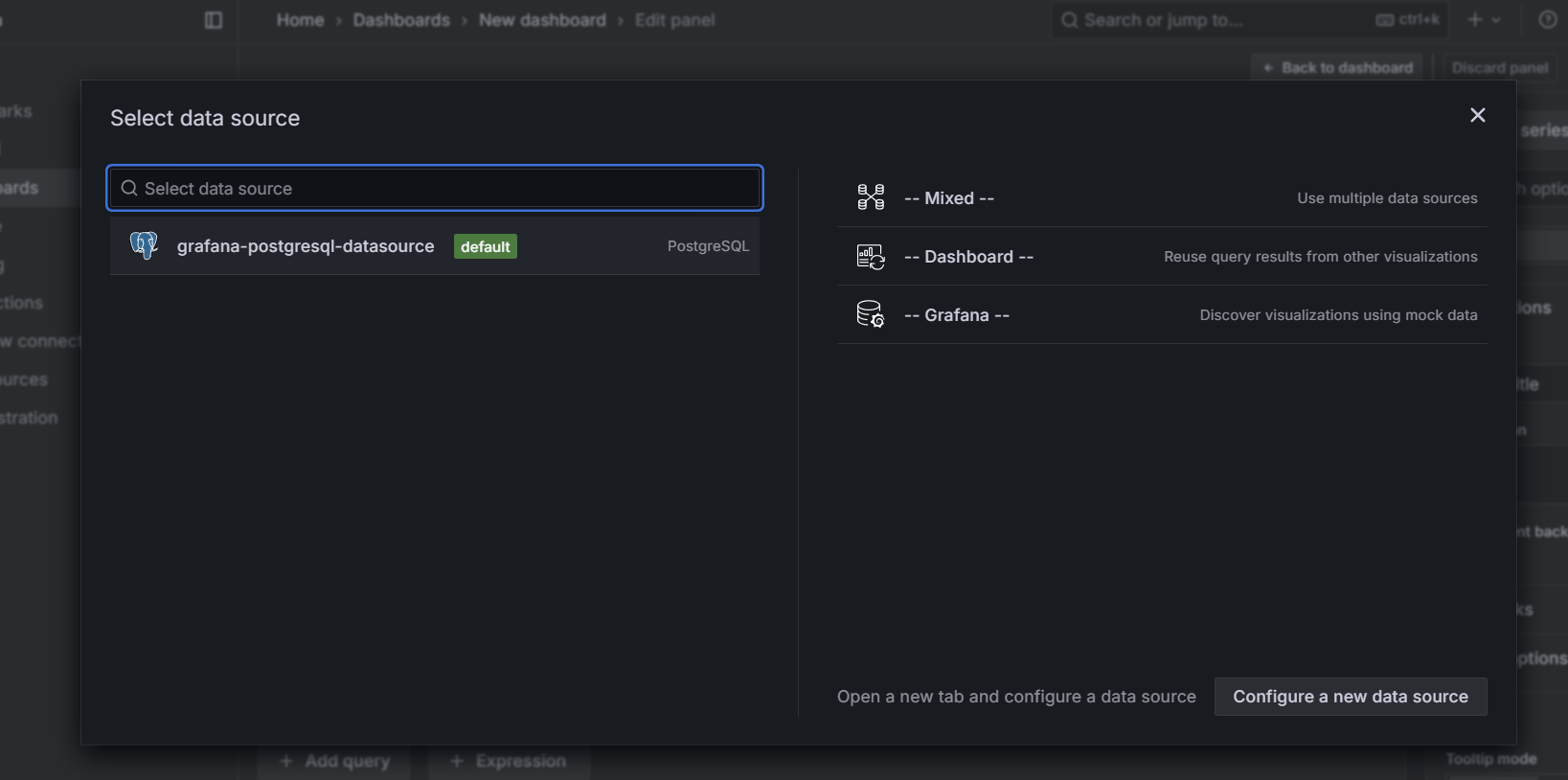

STEP 21: Select data source as PostgreSQL.

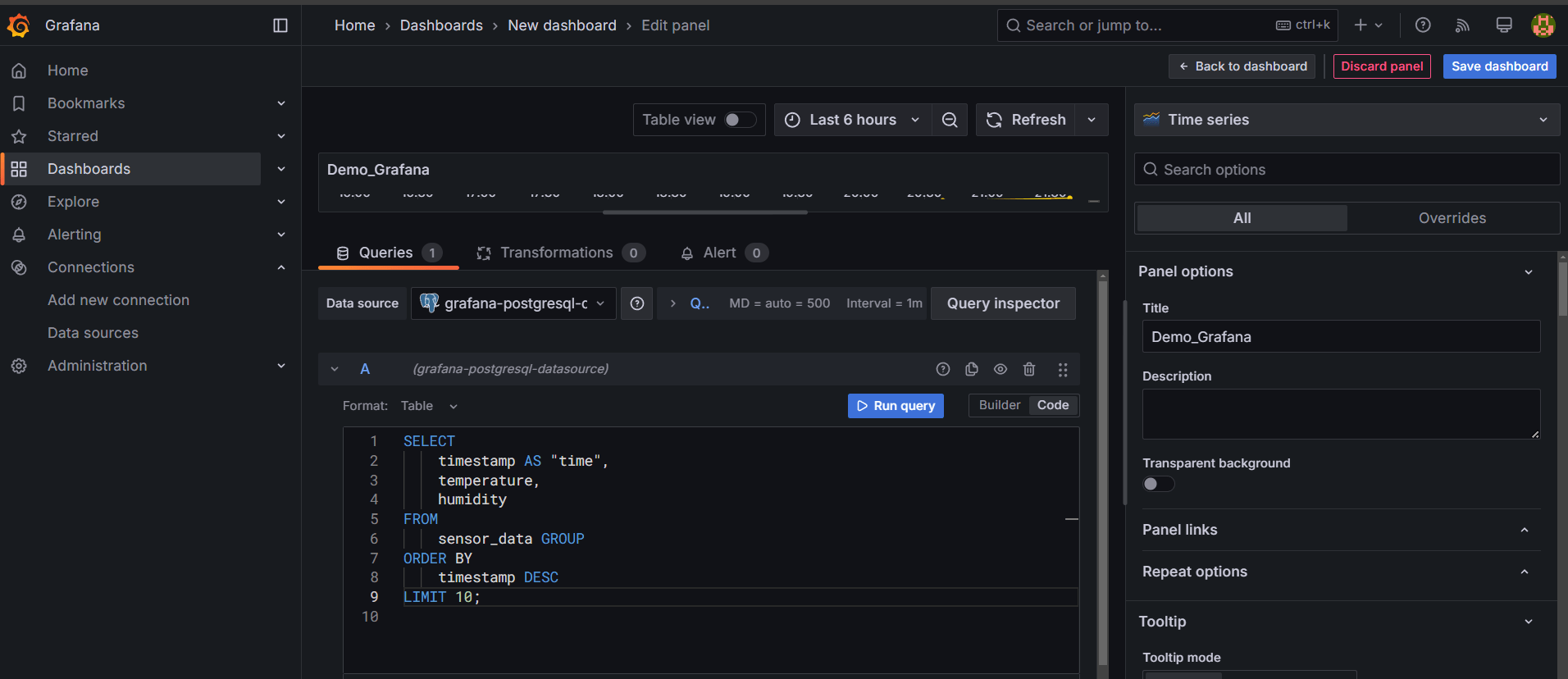

STEP 22: Enter the code and title.

SELECT

timestamp AS "time",

temperature,

humidity

FROM

sensor_data

ORDER BY

timestamp DESC

LIMIT 10;

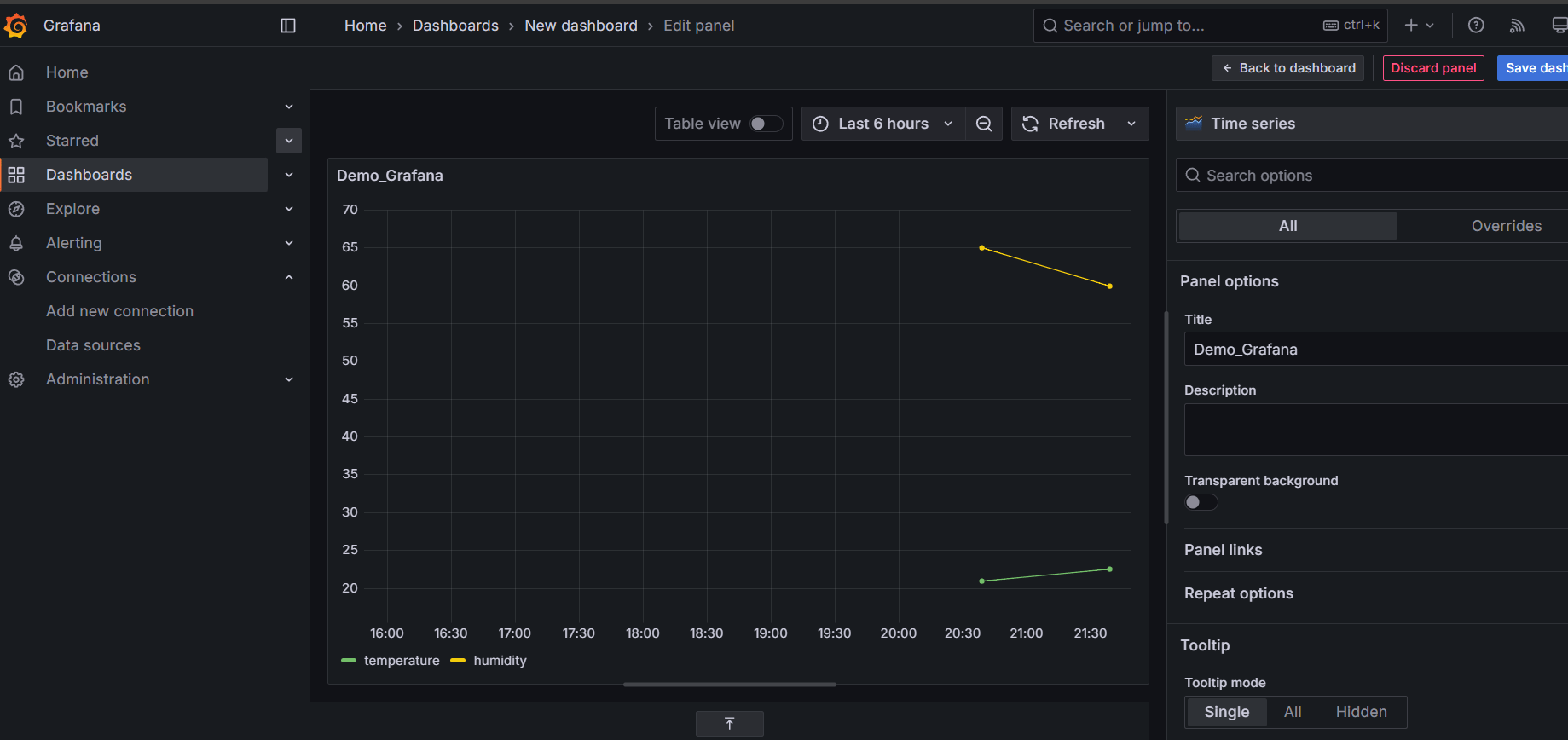

STEP 23: You will see the Dashboard.

Conclusion.

In conclusion, Docker offers a powerful and efficient way to build and scale real-time data pipelines. By leveraging its containerization capabilities, developers can create isolated, consistent environments for each component, making it easier to manage complex data workflows. Docker’s flexibility and scalability are key when handling large volumes of streaming data, ensuring high performance and reliability.

As we’ve seen, Docker simplifies the deployment of real-time data processing tools, streamlining integration and reducing potential issues related to compatibility and resource management. With the right strategies and best practices in place, Docker can significantly enhance the efficiency of your data pipeline, allowing you to process and analyze data faster than ever before.

Whether you’re building an analytics platform or working with continuous data streams, Docker can empower you to scale your operations smoothly. Embracing containerized environments is a smart step towards optimizing your real-time data pipelines, improving performance, and ensuring seamless scalability as your data needs grow.

Add a Comment